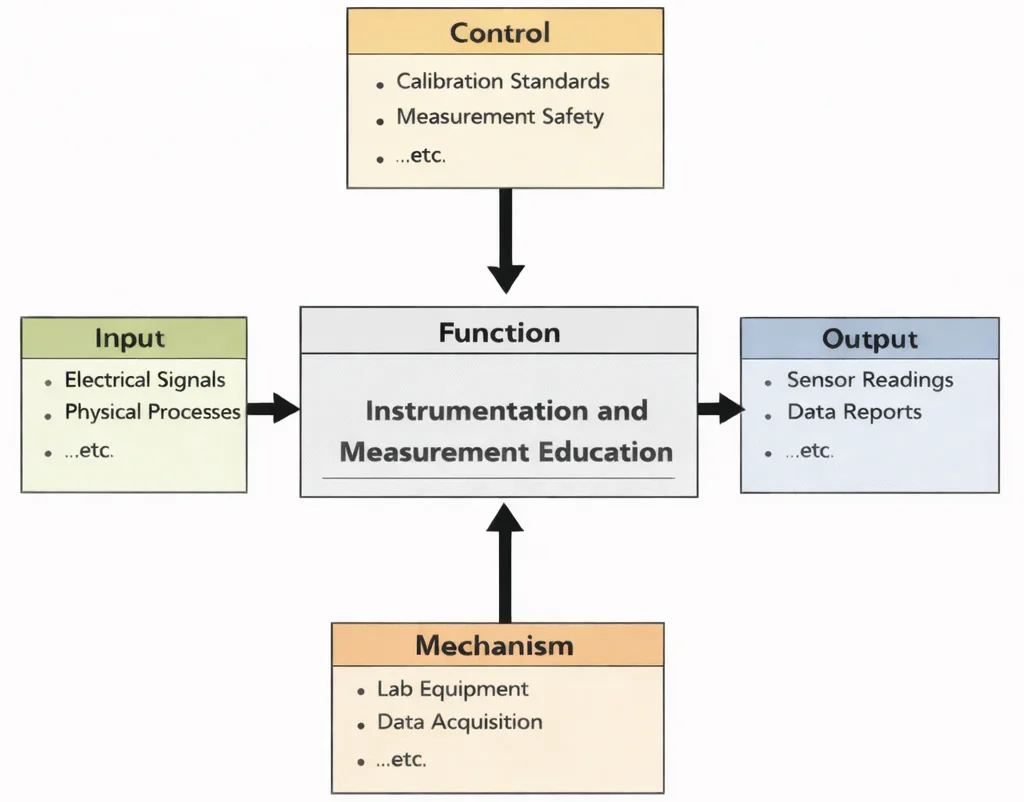

Instrumentation and Measurement Education is, at its heart, training the habit of trusting numbers only after earning that trust. The diagram shows the discipline as a pipeline: students begin with real signals and real measurement questions (Input), then learn to operate under the quiet “rules of truth” such as calibration, traceability, and safe practice (Control). With instruments, sensors, probes, and data-acquisition systems as their working hands (Mechanism), they practice turning messy reality into clean information. The outcome is not just graphs and reports (Output), but the ability to decide whether a reading is meaningful, what uncertainty it carries, and how to test a system so conclusions can stand up in engineering practice.

Instrumentation and Measurement serve as the foundational pillars for all scientific and engineering disciplines, providing the tools and techniques needed to observe, quantify, and analyze physical phenomena. In the context of Electrical and Electronic Engineering, these systems ensure accuracy, precision, and control in complex electronic environments. From measuring voltage in circuits to capturing biosignals in Biomedical Electronics, instrumentation technologies are essential to innovation and operational safety.

Accurate sensing and data acquisition are critical in Control Systems Engineering, where feedback loops depend on precise measurements. Similarly, the performance of advanced Communication Engineering systems hinges on real-time signal diagnostics and calibration tools. Understanding how signals behave also involves techniques studied in Signal Processing.

Measurement systems are integrated directly into Electronics Engineering devices and are embedded within microcontrollers in Embedded Systems and Microelectronics. As part of intelligent networks, they are also core components of the Internet of Things (IoT) and Smart Technologies, enabling devices to sense their environments and respond autonomously.

Instrumentation is equally vital in sustainable technologies, supporting system diagnostics in Renewable Energy and Energy Storage and performance evaluation in Power Systems Engineering. In emerging domains like Quantum Electronics, measurement sensitivity approaches quantum limits, making advanced instrumentation a research frontier.

As automation advances, Robotics and Automation in E&E rely on a fusion of sensors, actuators, and feedback mechanisms—each dependent on robust instrumentation. These applications extend into Environmental Engineering, where monitoring instruments play a crucial role in pollution control and regulatory compliance.

Specific environmental applications include monitoring air quality through Air Quality Engineering sensors and analyzing water systems in Water Resources Engineering. Broader data integration is supported by Environmental Monitoring and Data Analysis, linking instrumentation to policy outcomes in Environmental Policy and Management.

Instrumentation engineers also contribute to the development of Green Building and Sustainable Design by deploying smart sensors for lighting, occupancy, and energy control. In fields like Renewable Energy Systems Engineering and Industrial Ecology and Circular Economy, instrumentation ensures system optimization and accountability in sustainable development.

From factory automation in Industrial and Manufacturing Technologies to field devices in Waste Management Engineering, instrumentation spans diverse industries. Its importance also reaches into climate-oriented efforts, such as Climate Change Mitigation and Adaptation and ecosystem stewardship via Ecological Engineering.

Students entering this field develop skills that combine theory, practical design, and system-level thinking. Whether in healthcare, energy, manufacturing, or environmental protection, instrumentation and measurement form the backbone of reliable, efficient, and safe engineering systems in the modern world.

This image depicts a futuristic measurement laboratory where data quality is the central theme. In the foreground, an engineer carefully adjusts or probes a device on a glowing test surface, suggesting calibration and precise signal checking. Around the room, robotic arms and benchtop instruments represent automated testing, repeatable experiments, and controlled measurement setups. Large translucent dashboards show waveforms, dials, and trend charts, emphasizing how sensors and instruments convert real-world quantities (temperature, pressure, strain, voltage, flow, vibration) into readable signals. Overall, the scene communicates the heart of instrumentation and measurement: accuracy, repeatability, calibration, and interpreting data correctly so decisions in engineering and science can be trusted.

Instrumentation and Measurement – Invisible FAQ

- What is the difference between accuracy and precision in measurements?

- Accuracy describes how close a measured value is to the true value, while precision describes how repeatable the measurements are. A system can be precise but not accurate if it is consistently offset from the true value.

- What is a transducer, and why is it important in instrumentation?

- A transducer converts a physical quantity such as temperature, pressure, or displacement into an electrical signal. This conversion allows measurement systems and controllers to sense and process real-world phenomena electronically.

- How do systematic errors differ from random errors?

- Systematic errors are repeatable biases that shift all readings in one direction, often due to poor calibration or flawed procedures. Random errors arise from unpredictable influences such as noise or small environmental fluctuations, causing scatter around a mean value.

- Why is calibration essential for measurement instruments?

- Calibration compares an instrument’s output to known reference standards and corrects any consistent deviations. Regular calibration ensures that readings remain trustworthy over time, even as components age or conditions change.

- What is signal conditioning in a measurement system?

- Signal conditioning prepares sensor signals for acquisition or processing by amplifying weak signals, filtering noise, shifting levels, or providing isolation. Proper conditioning improves measurement accuracy and protects downstream electronics.

- How do ADCs and DACs link the analog world to digital systems?

- Analog-to-digital converters (ADCs) sample continuous analog signals and express them as digital numbers for processing by microcontrollers or computers. Digital-to-analog converters (DACs) perform the reverse conversion, generating analog voltages or currents from digital values for actuators or analog circuits.

- What is Nyquist frequency, and why does it matter in sampling?

- The Nyquist frequency is half the sampling rate. To avoid aliasing, the sampling rate must be at least twice the highest frequency present in the signal. Sampling too slowly folds high-frequency content into lower frequencies, distorting the recorded data.

- Which factors guide sensor selection for a measurement task?

- Engineers consider measurement range, required accuracy, sensitivity, response time, environmental limits, physical size, and how easily the sensor interfaces with the rest of the system. The chosen sensor must perform reliably under real operating conditions.

- How do temperature variations affect measurement equipment?

- Temperature changes can alter component values, shift sensor characteristics, and introduce drift in instrument readings. Designers use temperature-stable components, compensation circuits, and controlled enclosures to reduce these effects.

- How are wireless and IoT technologies changing instrumentation and measurement?

- Wireless and IoT solutions allow large numbers of distributed sensors to send data to central or cloud-based systems in real time. They enable remote monitoring, predictive maintenance, and richer data analytics, but also introduce challenges in power management, data reliability, and security.

- Electrical & Electronic Engineering topics:

- Electrical & Electronic Engineering – Overview

- Electronics Engineering

- Power Systems Engineering

- Renewable Energy & Energy Storage

- Communication Engineering

- Control Systems Engineering

- Signal Processing

- Instrumentation & Measurement

- Embedded Systems & Microelectronics

- Robotics & Automation in EE

- IoT & Smart Technologies

- Biomedical Electronics

- Quantum Electronics

Table of Contents

Key Concepts in Instrumentation and Measurement

Instrumentation

Instrumentation is the multifaceted discipline dedicated to creating and deploying devices that can accurately measure, monitor, and control a wide array of physical and environmental parameters. Its applications span across scientific research, industrial automation, environmental monitoring, healthcare diagnostics, and aerospace engineering. Core components include:

- Sensors and Transducers: Fundamental to instrumentation, sensors are devices that detect physical or chemical changes—like temperature, pressure, humidity, flow, acceleration, and pH—and convert them into electrical signals. Transducers further process these signals, transforming them from one form of energy to another. High-quality instrumentation leverages advanced sensors such as strain gauge pressure sensors, NTC/PTC thermistors, MEMS accelerometers, optical fiber sensors, and piezoelectric elements. Calibration and stability under varying conditions are critical for reliable performance in industrial-grade applications.

- Signal Conditioning Circuits: Raw sensor outputs often need modification before digitization or use in control systems. Signal conditioning includes amplification (using op‑amps or instrumentation amplifiers), filtering (low-pass, high-pass, band-pass, notch filters), isolation, and linearization. Modern systems integrate programmable gain amplifiers (PGAs) and digitally controlled filters to adjust to varying signal levels and noise characteristics, ensuring that the subsequent data acquisition stage receives signals within its optimal dynamic range.

- Data Acquisition Systems (DAQ): DAQ systems capture signal data—analog or digital—via analog-to-digital converters (ADCs), sampling at specified rates, and storing it in buffers or non‑volatile memory for analysis. Complex DAQ setups include multiple synchronized channels, trigger mechanisms, real-time clocks, and interfaces like USB, Ethernet, or wireless connectivity. They may incorporate onboard microcontrollers or FPGA-based processing to enable edge analytics, data compression, and real-time visualization, turning raw signals into actionable intelligence.

Measurement

Measurement refers to the fundamental process of assigning numerical values to physical quantities with respect to defined units. This requires a rigorous approach to quantify how much of a parameter is present, how accurately it reflects reality, and how reliably it can be repeated. Essential attributes include:

- Accuracy: Accuracy indicates how closely a measured value matches the true or accepted standard. It is influenced by calibration, systematic errors, sensor non-linearity, and environmental factors. Highly accurate instrumentation often uses national metrology standards, traceable calibration services, and regular verification to maintain conformity over time.

- Precision: Precision measures the consistency of repeated readings under unchanged conditions. High precision means results are tightly clustered, even if they are consistently offset. Precision relies on minimal noise, stable power supplies, thermal shielding, and consistent signal conditioning to reduce random errors and ensure repeatability in scientific or industrial experiments.

- Range and Sensitivity: A sensor’s range is the interval between its minimum and maximum measurable values, while sensitivity reflects how small a change it can detect. For instance, a microvolt‑level sensitivity is critical for biomedical ECG electrodes, while wide-range temperature measurements might require thermocouples capable of spanning from –200 °C to +1,200 °C. Trade‑offs between range, sensitivity, and resolution must be carefully balanced to match application requirements.

To explore comprehensive best practices, calibration techniques, and system design strategies in instrumentation and measurement, consult this in‑depth resource on ISA (International Society of Automation), which provides guidelines and technical papers for professionals in the automation and instrumentation field.

Components of Instrumentation Systems

Sensors and Transducers

Sensors and transducers are at the heart of any instrumentation system, serving as the front-line components that interface with the physical world. They are responsible for detecting environmental or system variables and converting them into readable electrical signals. The diversity of sensors available today supports applications across fields as varied as biomedical engineering, aerospace, manufacturing automation, and environmental monitoring. These devices must be carefully selected to suit operational ranges, accuracy requirements, and environmental tolerances.

- Temperature Sensors: These include thermocouples—which generate voltage in response to temperature differences, resistance temperature detectors (RTDs)—which operate by changing electrical resistance with temperature, and thermistors—whose resistance decreases significantly with temperature. Each type has distinct advantages and trade-offs, such as cost, sensitivity, and temperature range. Thermocouples are often used in high-temperature environments like furnaces, while RTDs are preferred for precision measurements in laboratory or industrial settings.

- Pressure Sensors: Pressure measurements are critical in hydraulic systems, weather instrumentation, and biomedical devices like blood pressure monitors. Strain gauges operate on the principle of resistance change under mechanical deformation, while piezoelectric sensors generate charge in response to stress, making them ideal for dynamic pressure changes. Capacitive sensors detect variations in pressure by measuring changes in capacitance, often used in applications requiring high sensitivity and durability.

- Flow Sensors: These are essential in fluid dynamics, chemical processing, and utility management. Ultrasonic flow meters use sound waves to measure flow rate without coming into contact with the fluid, ideal for clean or hazardous liquids. Electromagnetic flow meters work on Faraday’s Law and are suitable for conductive fluids, while turbine flow meters use mechanical rotation to determine flow velocity, making them simple and cost-effective.

- Position Sensors: Used in robotics, automotive controls, and industrial automation, these include potentiometers for linear or angular displacement, encoders for precision rotary measurement, and Hall effect sensors for detecting proximity or rotational position in magnetic fields. Modern robotics and CNC machinery heavily rely on encoders for accurate position feedback and closed-loop control.

Signal Conditioning

Signal conditioning refers to the set of techniques and hardware used to manipulate a sensor’s output into a form suitable for processing. Since raw signals can be noisy, weak, or incompatible with downstream systems, signal conditioning ensures fidelity, safety, and accuracy. Without it, even the most accurate sensor can provide misleading or unreadable data. These circuits often appear as intermediary modules or integrated parts of data acquisition systems and are tailored to specific measurement needs.

- Amplification to boost weak sensor signals: Signal amplification involves using operational amplifiers or instrumentation amplifiers to increase the signal strength to a usable level without distorting its content. For example, strain gauge sensors may produce millivolt-level signals that require amplification to volts for digitization.

- Filtering to remove noise or unwanted frequencies: Filters may be analog (like RC, LC, or active filters) or digital, removing high-frequency electrical noise, power line interference, or irrelevant frequency bands. Low-pass filters are often used in biomedical signals like ECG to eliminate motion artifacts and electromagnetic interference.

- Analog-to-Digital Conversion (ADC) to digitize signals for computer processing: ADCs convert continuous analog signals into discrete digital numbers using techniques such as successive approximation or sigma-delta modulation. Resolution (in bits) and sampling rate are key parameters in ADC selection. For a detailed explanation, see this overview on Analog-to-Digital Conversion from Analog Devices.

Data Acquisition Systems

Data Acquisition Systems (DAQ) encompass both hardware and software used to collect, digitize, and store measurement data. They are crucial for transforming analog input from sensors into usable digital formats and are employed in research labs, industrial plants, environmental monitoring stations, and automotive testing facilities. High-end DAQs may support hundreds of input channels, high sampling speeds, and real-time processing with edge analytics capabilities.

- Devices and software for collecting and storing data, such as LabVIEW or MATLAB: LabVIEW provides a visual programming environment ideal for prototyping, while MATLAB offers extensive toolkits for signal analysis and machine learning integration. These tools also support real-time data streaming, model-based design, and system identification.

- Interfaces like USB, Ethernet, or wireless communication: Connectivity options define how DAQs communicate with host systems, remote servers, or cloud environments. USB and Ethernet are common in benchtop DAQs, while wireless interfaces (e.g., Wi-Fi, Zigbee) allow mobility and scalability in distributed sensor networks.

Output Devices

Output devices translate collected data into a human-readable or machine-interpretable format. They are vital for visualization, diagnostics, and automated control in closed-loop systems. These outputs may appear on simple analog dials or sophisticated touchscreen HMIs (Human-Machine Interfaces) with graphical data overlays. For automated systems, outputs also include control signals that activate actuators or other subsystems based on measurement data.

- Displays: Analog meters, digital readouts, and graphical screens: Analog meters are still useful in legacy systems or where visual trends matter, while digital displays provide accuracy and integration with software dashboards. OLED and LCD graphical interfaces are common in handheld devices and industrial controllers.

- Controllers: Systems that use measurement data for feedback control, such as Programmable Logic Controllers (PLCs): PLCs are central in manufacturing automation, reading sensor input, processing logic in real-time, and adjusting actuators. Advanced PLCs integrate SCADA capabilities, cloud connectivity, and remote diagnostics for robust industrial control systems.

Applications of Instrumentation and Measurement

Industrial Automation

Instrumentation is a cornerstone of automated manufacturing and process control. In industrial settings such as chemical processing plants, food production lines, and refineries, instrumentation enables continuous monitoring of operational variables that are crucial to maintaining efficiency, safety, and product quality.

- Monitoring: Measuring temperature, pressure, flow, and level in industrial processes is critical for optimizing operations and avoiding system failures. Sensors gather real-time data which is displayed on supervisory systems or HMIs (Human-Machine Interfaces), helping operators make quick decisions.

- Control Systems: Using data from sensors to regulate machinery and processes ensures consistency and repeatability. Closed-loop systems automatically adjust input variables in response to deviations, reducing human intervention and increasing precision.

- Robotics: Enabling precision and adaptability through position and force sensors allows robots to perform complex, repetitive tasks such as welding, assembly, and material handling with high accuracy. Advanced feedback loops help robots adapt to variations in their environments.

Medical Diagnostics

Instrumentation has transformed healthcare by enabling accurate diagnosis, continuous monitoring, and personalized treatment plans. From high-end imaging systems in hospitals to wearable monitors at home, instrumentation plays a vital role in patient care and medical research.

- Diagnostic Equipment: MRI machines, X-ray systems, and blood glucose monitors rely on highly calibrated sensors to detect minute biological changes. These devices convert physical parameters into electronic data for doctors to interpret, often with the aid of artificial intelligence algorithms.

- Patient Monitoring: Devices measuring heart rate, blood pressure, oxygen saturation, and EEG/ECG provide real-time data for clinical decisions. Such instruments are used in ICUs, during surgery, and in home-care setups to alert caregivers to critical changes in patient status.

- Wearable Health Devices: Fitness trackers and implantable monitors for continuous data collection are widely used in preventive care and chronic disease management. They offer insights into activity levels, sleep patterns, and overall health trends. See how [wearable medical technology](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8829816/) is advancing continuous care and real-time diagnostics.

Environmental Monitoring

Instrumentation ensures compliance with environmental regulations and promotes sustainability through continuous measurement of air, water, and soil quality.

- Air Quality Monitors: Measuring pollutants like CO₂, NOx, and particulate matter helps identify emission sources and assess health risks in urban and industrial zones. These instruments play a role in climate research and public health policy.

- Water Quality Sensors: Monitoring parameters like pH, turbidity, and dissolved oxygen is essential in water treatment plants, aquaculture, and environmental impact assessments. Portable sensors are also used in field surveys and disaster response.

- Climate Monitoring: Sensors for tracking temperature, humidity, and wind speed in meteorology feed data into forecasting models. They are deployed in weather stations, satellites, and ocean buoys to provide accurate, long-term environmental data.

Aerospace and Defense

Advanced instrumentation is critical in aerospace systems and military applications, where reliability, precision, and robustness under extreme conditions are non-negotiable.

- Avionics: Instruments for altitude, speed, and orientation in aircraft are vital for navigation and safety. Redundant sensor systems ensure continuous operation even in the event of component failures.

- Space Exploration: Sensors for analyzing atmospheric conditions, radiation, and planetary surfaces must operate reliably under vacuum, extreme temperatures, and high-radiation environments. These instruments enable scientific discoveries and mission-critical decisions.

- Weapon Systems: Target tracking and guidance systems depend on radar, infrared, and laser-based instrumentation. Real-time measurement enables precision strikes and adaptive response to threats in dynamic combat environments.

Energy and Power Systems

Instrumentation improves efficiency and safety in power generation and distribution, and plays a growing role in integrating renewable energy sources into the grid.

- Smart Grids: Monitoring voltage, current, and frequency in real-time allows utilities to respond dynamically to demand changes and prevent outages. Smart meters enable consumers to track their usage and adjust consumption habits.

- Renewable Energy: Sensors for optimizing solar panel angles and wind turbine operations increase energy yield. In solar farms and wind parks, automated tracking and adjustment systems rely on accurate instrumentation for optimal performance.

- Energy Audits: Measuring energy consumption and identifying inefficiencies supports sustainability initiatives in buildings, factories, and campuses. Portable meters and IoT-connected sensors allow for comprehensive energy profiling.

Research and Development

Accurate measurement is essential in scientific and engineering research, where new theories are validated through experimental data.

- Material Testing: Measuring mechanical properties like stress, strain, and hardness supports the development of new materials for construction, aerospace, and medical implants. These measurements must meet rigorous standards of repeatability and accuracy.

- Chemical Analysis: Instruments like spectrometers and chromatographs detect chemical compositions at micro or nano scales, supporting research in pharmaceuticals, food safety, and nanotechnology.

- Experimental Physics: High-precision tools for studying subatomic particles are at the core of discoveries in quantum mechanics and particle physics. These tools must operate in ultra-clean environments with extremely low tolerances for error.

Tools and Techniques in Instrumentation and Measurement

Measurement Devices

- Multimeters: Modern digital multimeters are essential for troubleshooting electrical systems, offering functions like auto-ranging, true RMS readings, and data logging. These instruments are widely used across electrical, electronic, and industrial fields to ensure operational integrity of circuits and devices.

- Oscilloscopes: Oscilloscopes display voltage signals over time, making them invaluable in evaluating signal quality, identifying noise, and troubleshooting faults in analog and digital circuits. They range from basic two-channel models to advanced mixed-signal oscilloscopes with integrated logic analyzers.

- Spectrum Analyzers: These tools break down complex signals into their component frequencies, helping engineers study bandwidth usage, identify interference sources, and validate RF communication performance. They are vital in fields like telecommunications, radio astronomy, and EMI/EMC testing.

In addition to handheld instruments, bench-top precision instruments are used in laboratories for high-accuracy tasks, often featuring advanced connectivity options for remote operation and automation. Specialized devices like impedance analyzers, signal generators, and LCR meters further expand the scope of measurement.

Calibration

Calibration involves comparing the readings of an instrument to a known reference or standard to verify accuracy. It’s an essential quality assurance process in sectors like aerospace, healthcare, and manufacturing. Calibration labs use traceable standards that conform to international measurement systems, ensuring consistency across facilities and jurisdictions.

Organizations such as [NIST (National Institute of Standards and Technology)](https://www.nist.gov/) provide the global benchmark for calibration standards. Regular calibration helps prevent measurement drift over time, ensures compliance with regulations, and extends equipment life through proactive maintenance.

In many facilities, automated calibration systems are now employed, using programmable equipment to run tests, log results, and adjust instruments without human intervention. These systems help maintain high throughput in busy metrology labs while reducing operator errors.

Wireless Instrumentation

- IoT Integration: The emergence of the Internet of Things (IoT) has revolutionized instrumentation by enabling smart sensors that communicate wirelessly. These devices can transmit data via Wi-Fi, Bluetooth, Zigbee, or low-power networks like LoRaWAN, allowing for flexible deployment in complex environments.

- Remote Monitoring: In hazardous or inaccessible locations such as oil rigs, volcanoes, or high-voltage substations, wireless instrumentation allows for safe and efficient monitoring. Systems collect real-time data on environmental, structural, or process variables and send alerts when anomalies are detected.

Wireless systems reduce the need for extensive wiring, lowering installation costs and improving system scalability. The adoption of edge computing has further enhanced the capabilities of remote sensors by enabling localized data processing and decision-making without the need for constant cloud communication.

According to [All About Circuits](https://www.allaboutcircuits.com/technical-articles/wireless-instrumentation-iot-edge-computing-sensor-data/), advancements in battery technology and ultra-low-power microcontrollers are making it possible to operate wireless sensors for years without maintenance, thereby enabling wide-area deployment across industrial and smart city applications.

Data Analysis Tools

- Software like MATLAB, Python, and R are integral in processing, visualizing, and interpreting data obtained from measurement instruments. MATLAB is widely used in academia and industry for signal processing and system modeling, while Python provides flexible libraries such as NumPy, SciPy, and Pandas for scientific computing.

- Machine learning algorithms are increasingly being employed for predictive maintenance, anomaly detection, and system optimization. By training on historical sensor data, models can anticipate failures, optimize performance, and adapt control parameters in real time.

Modern data acquisition systems often feature built-in analytics engines that can perform real-time statistical processing, frequency domain transformations, and alarm triggering. These tools not only simplify reporting but also empower engineers to make data-driven decisions that enhance efficiency and reliability.

Challenges in Instrumentation and Measurement

Accuracy and Precision

Instrumentation must achieve a delicate balance between high accuracy and cost-effectiveness. Achieving measurements that closely match true values often involves expensive components, meticulous calibration procedures, and tightly controlled environmental conditions. However, commercial applications—from consumer electronics to industrial automation—frequently require affordable yet reliable instruments. Over time, sensor elements can experience drift due to material degradation or mechanical wear, necessitating periodic recalibration and data correction to maintain precision. Addressing these issues requires monitoring long-term drift using reference standards, compensating for temperature-induced errors, and employing statistical methods to distinguish true signals from noise.

Noise and Interference

Precise measurements are often compromised by both environmental and electrical noise, which can obscure or distort signals. Instruments that measure low-level signals—such as thermocouples or strain gauges—are particularly vulnerable to electromagnetic interference (EMI) and radio-frequency interference (RFI) from nearby power lines or wireless transmissions. Shielding, twisted-pair cabling, and differential amplification are strategies used to mitigate this interference. Analog-to-digital converters must also be carefully selected, as converter aperture jitter and quantization noise impose limitations. For cutting-edge applications, refer to best practices outlined in IEEE standards on EMI control and signal integrity to ensure measurement fidelity.

Scalability

Designing instrumentation systems that can scale effectively to accommodate large IoT deployments presents significant challenges. As sensor networks expand to include hundreds or thousands of nodes, factors such as data aggregation, synchronization, and bandwidth become critical. Managing large volumes of data requires robust edge computing strategies and time-stamping techniques to preserve temporal precision. Additionally, engineers must track sensor health using over-the-air diagnostics and remote calibration procedures. System architecture should support modular expansion and firmware updates without disrupting ongoing operations. This is vital in applications such as smart agriculture and industrial Internet of Things monitoring.

Harsh Environments

Instrumentation deployed in environments with extreme temperatures, high pressures, or corrosive chemicals must be engineered for durability and resilience. Common industrial settings—such as oil refineries, geothermal sites, and chemical plants—expose sensors to thermal cycles, shock, and humidity. Designers use rugged enclosures, hermetic sealing, and robust materials like stainless steel, ceramics, or PTFE to protect sensitive electronics. Calibration in these environments is especially challenging because standard references may not be available on site, often requiring mobile labs or in-line calibration systems.

Integration with Emerging Technologies

To remain relevant, measurement systems must integrate seamlessly with modern tools like AI, big data analytics, and cloud platforms. This requires instruments to output data in standardized formats, support real-time streaming, and communicate securely via protocols such as MQTT or OPC UA. Embedding preliminary analytics on-edge enables early anomaly detection, reducing latency for critical decisions. Many systems now employ machine-learning-assisted calibration to adjust measurement parameters based on usage patterns. Organizations like InstrumentationTools.com provide resources and tutorials for merging instrumentation with AI-driven workflows.

Future Trends in Instrumentation and Measurement

- Smart Sensors

Future instrumentation will increasingly rely on smart sensors—devices that integrate sensing, processing, and communication in a single compact unit. These sensors can perform local data analysis, filtering out unimportant information and sending only essential insights upstream. For example, vibration sensors in industrial equipment may detect anomalies and trigger alerts without requiring operator intervention. In addition, smart sensors are becoming self-calibrating, using embedded algorithms and reference standards to adjust readings automatically over time. This capability dramatically reduces maintenance effort and downtime, making it well-suited for remote installations and mission-critical systems.

- Miniaturization

The trend toward miniaturization is revolutionizing instrumentation and expanding measurement possibilities into previously inaccessible domains. Compact and lightweight instruments—such as micro-electro-mechanical systems (MEMS) accelerometers and microfluidic pH sensors—are enabling wearable health monitors, portable diagnostics, and drones equipped with environmental sensors. Miniaturized designs reduce power consumption, increase mobility, and allow integration into everyday objects. As devices shrink, engineers must balance sensitivity, precision, thermal management, and durability within constrained form factors.

- Energy Harvesting

Energy harvesting solutions are critical in powering future instrumentation, particularly in remote or battery-less deployments. Advanced sensors can now be driven entirely by ambient energy sources such as mechanical vibrations, temperature differentials, radio-frequency waves, or solar exposure. These improvements enable maintenance-free operation for years, ideal for structural health monitoring of bridges or pipeline networks. To optimize performance, harvesting systems often integrate power conditioning units and ultra-low-power microcontrollers, ensuring reliable data acquisition under variable energy availability.

- Artificial Intelligence

Artificial intelligence is poised to transform measurement systems through intelligent data interpretation, predictive analytics, and adaptive calibration. AI-driven models can detect patterns in sensor data streams, flag potential failures before they occur, and suggest corrective actions. For instance, machine learning algorithms can distinguish between normal vibration signatures and those indicating bearing degradation in rotating machinery. These AI-enhanced systems can learn over time, improving their diagnostic accuracy and reducing false alarms—making them invaluable in industries such as manufacturing and aerospace.

- Quantum Measurement

Quantum measurement techniques are redefining the limits of precision. Leveraging principles such as quantum entanglement, superposition, and squeezed states, quantum-enhanced sensors can measure time, magnetic fields, and temperature with unprecedented sensitivity. Applications include atomic clocks for GPS systems, quantum magnetometers for medical diagnostics, and ultra-precise gravimeters for geological surveying. As quantum sensor technology matures, it will be integrated into broader instrumentation networks to enhance accuracy and resolution beyond classical limits.

- Integration with IoT

The integration of instrumentation systems with the Internet of Things (IoT) is transforming how measurement data is collected, analyzed, and acted upon. Connected sensors deliver real-time data to centralized dashboards, enabling remote monitoring and decision-making on-the-fly. This integration supports applications like smart farming, where soil moisture and nutrient sensors adjust irrigation or drone deployments adaptively. High-level analytics platforms can correlate data across thousands of sensor nodes to identify patterns, optimize processes, and improve overall system intelligence, building on foundations outlined by organizations focused on IoT instrumentation protocols.

Case Studies in Instrumentation and Measurement

Industrial Automation

General Electric’s Predix platform exemplifies the integration of advanced IoT-enabled instrumentation in the industrial sector, enabling real-time monitoring, predictive maintenance, and performance optimization across large-scale facilities. Through a network of connected sensors, Predix collects data on temperature, pressure, vibration, and equipment usage, transmitting it to centralized analytics engines that detect inefficiencies or early signs of failure. These insights help industries reduce unplanned downtime, extend the lifespan of critical machinery, and enhance energy management. In sectors such as power generation and aviation, this capability enables better asset utilization and ensures consistent operational standards, minimizing human intervention while boosting productivity. The platform’s cloud-based architecture allows secure access from multiple locations, enabling global coordination of operations. Its scalable design is suitable for small manufacturers and multinational conglomerates alike, adapting dynamically to industry-specific instrumentation requirements.

Medical Diagnostics

The development of continuous glucose monitoring (CGM) systems such as Dexcom represents a transformative leap in diabetes care, combining wearable biosensors, real-time data transmission, and cloud-based analytics. Unlike traditional finger-prick methods, CGMs use minimally invasive sensors placed under the skin to monitor glucose levels every few minutes. These readings are transmitted wirelessly to smartphones or insulin pumps, enabling dynamic insulin adjustment and reducing the risk of hypo- or hyperglycemia. Moreover, many systems now employ predictive algorithms that alert users before dangerous trends occur, allowing preemptive intervention. Beyond patient benefits, healthcare providers gain long-term insights from data logs, facilitating personalized treatment plans and enhancing patient-doctor collaboration. In addition, CGMs have become integral to closed-loop insulin delivery systems, often called “artificial pancreas” devices, that automatically regulate insulin based on sensor data, offering improved glycemic control and quality of life.

Environmental Monitoring

NASA’s Earth Observing System (EOS) serves as a pioneering framework for global-scale environmental instrumentation and satellite-based data acquisition. Utilizing an array of sophisticated instruments aboard satellites like Terra, Aqua, and Aura, EOS measures key environmental parameters including atmospheric temperature, ocean salinity, vegetation density, and polar ice coverage. These data streams feed into predictive climate models and contribute to our understanding of long-term environmental trends and human impact. The system’s use of spectroradiometers, radar altimeters, and thermal infrared sensors allows for precise measurements of subtle environmental changes over time. For example, the Moderate Resolution Imaging Spectroradiometer (MODIS) aboard Terra and Aqua captures high-resolution data across 36 spectral bands, offering insights into deforestation, water vapor concentration, and urban heat islands. The EOS database is accessible to scientists and policymakers worldwide, providing essential data for climate mitigation strategies, biodiversity preservation, and disaster response planning.

Why Study Instrumentation and Measurement

Accurate Data Acquisition for Engineering Systems

Instrumentation and measurement are foundational to monitoring and controlling engineering systems. Students learn how to select sensors, configure instruments, and calibrate measurement devices. This ensures reliable data collection in laboratories and industrial environments.

Principles of Sensor Technology

Students study the principles behind various sensors such as strain gauges, thermocouples, and photodetectors. They learn how sensors convert physical phenomena into electrical signals. This knowledge is critical for integrating sensors into automated systems.

Signal Conditioning and Processing

Measurement systems require filtering, amplification, and analog-to-digital conversion. Students explore these signal conditioning techniques to ensure accurate data. These skills are essential for high-performance instrumentation.

Applications in Industry and Research

Instrumentation is used in fields such as robotics, aerospace, medical diagnostics, and environmental monitoring. Students gain hands-on experience with real-world measurement systems. This prepares them for technical roles across industries.

Standards, Accuracy, and Calibration

Students learn about measurement accuracy, precision, and uncertainty. They study calibration procedures and international standards like ISO and NIST. This ensures instrumentation systems meet regulatory and quality requirements.

Instrumentation and Measurement: Conclusion

Instrumentation and measurement form the critical infrastructure behind virtually every scientific and technological breakthrough in the modern world. They provide the means to observe, quantify, and manipulate physical phenomena with precision, enabling informed decision-making and technological innovation. In the medical field, for instance, accurate instrumentation is key to early diagnosis, real-time patient monitoring, and effective treatment planning. Devices like ECG monitors, blood analyzers, and MRI scanners depend on sophisticated measurement systems that convert physiological signals into interpretable data.

In manufacturing, the importance of measurement and control systems cannot be overstated. From ensuring the dimensional accuracy of components to maintaining optimal conditions in process industries, instrumentation enhances quality, efficiency, and safety. Robotics, CNC machines, and automated assembly lines rely heavily on sensors, actuators, and feedback loops. Similarly, in aerospace and defense, precise measurement systems underpin everything from flight control and navigation to propulsion monitoring and mission-critical telemetry.

Environmental sustainability also owes much to advancements in instrumentation. Modern climate science is rooted in data acquired through networks of sensors measuring air and water quality, greenhouse gas levels, ocean currents, and solar radiation. The application of advanced sensing technologies enables governments and researchers to monitor environmental change, enforce regulations, and respond to natural disasters with real-time insight. These tools are essential for protecting ecosystems and ensuring the long-term health of the planet.

As we move deeper into the era of smart systems, the convergence of instrumentation with Artificial Intelligence (AI), Internet of Things (IoT), and cyber-physical systems is revolutionizing how measurements are captured, interpreted, and applied. Smart sensors that self-calibrate and process data locally (edge computing) are increasingly common, reducing latency and enhancing real-time control. Furthermore, AI-powered analytics enable predictive maintenance and anomaly detection, transforming passive monitoring systems into proactive intelligence hubs.

The future of instrumentation and measurement lies in further miniaturization, integration, and adaptability. Innovations in nanomaterials and MEMS (Micro-Electro-Mechanical Systems) are enabling the development of ultra-sensitive, low-power sensors for wearable health monitors, implantable devices, and environmental microdetectors. With quantum sensing on the horizon, we may soon achieve measurement accuracies previously thought unattainable, opening new frontiers in particle physics, metrology, and navigation systems independent of GPS.

Ultimately, instrumentation and measurement are not just technical fields—they are enablers of progress across disciplines. By providing the tools to observe and understand complex systems, they empower innovation in everything from biomedical engineering to smart infrastructure. As these tools continue to evolve in tandem with emerging technologies, their role in shaping a safer, smarter, and more sustainable world will only grow more central.

Instrumentation and Measurement – Frequently Asked Questions (FAQ)

1. Why do engineers care about both accuracy and precision in measurements?

Accuracy tells you how close your measured value is to the true value, while precision tells you how consistent your readings are when you repeat the same measurement. A system that is only accurate once is not very useful, and one that is only precise may repeatedly give the wrong answer. Reliable instrumentation aims for both: measurements that are tightly clustered and centred on the true value.

2. What exactly does a transducer do in a measurement system?

Most physical quantities cannot be fed directly into a digital instrument. A transducer serves as the bridge, turning temperature, pressure, light, force, or motion into an electrical signal that can be amplified, filtered, digitised, and analysed. Choosing the right transducer is the first step in building a meaningful measurement chain.

3. How can I tell whether my measurement errors are systematic or random?

If your readings are consistently too high or too low across many trials, you probably have systematic error, often linked to calibration issues or a biased method. If your measurements scatter around a mean value in an unpredictable way, random error is dominant. Calibrating against standards reduces systematic error, whereas averaging and careful experimental design help reduce random error.

4. What happens during calibration, and how often should it be done?

Calibration compares the instrument’s output with the response of a trusted reference under controlled conditions. Any consistent offsets or gain errors are noted and corrected, either in the hardware or in software. The frequency of calibration depends on how critical the measurements are, how harsh the operating environment is, and how quickly components are likely to drift.

5. Why do measurement systems need signal conditioning?

Raw sensor signals are rarely ready to be digitised directly. They may be too small, buried in noise, or centred at the wrong voltage. Signal conditioning amplifies or attenuates the signal, filters out unwanted frequencies, isolates circuits for safety, and matches impedance so that the data acquisition hardware sees a clean, usable input. Good conditioning is often the difference between noisy, unreliable readings and high-quality measurements.

6. How do ADCs and DACs connect the physical world to digital electronics?

An ADC samples an analog signal at regular intervals and converts each sample into a numeric value that a microcontroller or computer can process. A DAC takes numeric values and reconstructs an analog signal, for example to drive a speaker or control a valve. Together, they let digital systems sense and act on real-world quantities with fine control and flexibility.

7. What is Nyquist frequency, and why can undersampling be dangerous?

The Nyquist frequency is half of the sampling rate. To capture all important features of a signal without distortion, the sampling rate must be at least twice the highest frequency present. If you sample too slowly, high-frequency content is misinterpreted as lower frequencies (aliasing), which can completely mislead your analysis or control decisions. Anti-aliasing filters and appropriate sampling rates are therefore essential.

8. How do engineers choose the “right” sensor for a job?

They start by matching the sensor’s measurement range and accuracy to the application’s needs, then consider response time, environmental limits, size, cost, and how easily the sensor integrates with the electronics. A good choice will operate reliably where it is installed and provide signals that can be conditioned and digitised without difficulty.

9. In what ways does temperature affect instruments and sensors?

Temperature shifts can change resistance values, alter semiconductor behaviour, and cause mechanical parts to expand or contract. These effects show up as drift in readings or changes in sensitivity. To combat this, designers use components with low temperature coefficients, add compensation circuits or algorithms, and, when necessary, stabilise the physical environment around the measurement system.

10. How are wireless and IoT technologies reshaping measurement and instrumentation?

Instead of relying on long cables and centralised hardware, modern systems can deploy many wireless sensors that report data to gateways or cloud platforms. This makes it easier to monitor remote, moving, or hard-to-access assets. At the same time, engineers must manage power budgets, choose appropriate wireless protocols, and design robust security so that the data flowing through these networks remains trustworthy.

Instrumentation and Measurement: Review Questions with Answers

Instrumentation and measurement provide the quantitative backbone for science, engineering, and modern industry. These review questions explore how sensors, transducers, signal conditioning, and data acquisition work together to turn real-world phenomena into reliable numbers that decision-makers and control systems can trust.

-

Why are accuracy and precision both important in measurement, and how do they differ?

Answer: Accuracy describes how close a measurement is to the true or accepted value, while precision describes how tightly clustered repeated measurements are under the same conditions. An instrument can be precise but not accurate if all readings are consistently offset from the true value, and it can be accurate on average but imprecise if readings scatter widely. In practice, engineers aim for high accuracy and high precision so that results are both correct and repeatable, which is crucial in areas such as healthcare diagnostics, quality control, and scientific experimentation. -

What role do transducers play in instrumentation systems, and why are they essential?

Answer: Transducers convert physical quantities—such as force, pressure, temperature, or light intensity—into electrical signals that measurement circuits can process. For example, a strain gauge converts mechanical deformation into a change in resistance, a thermocouple converts temperature differences into a voltage, and a photodiode converts light into current. Without transducers, electronic instruments would have no way to sense the physical environment. Selecting and configuring the right transducer ensures that the system captures the key variable of interest with sufficient sensitivity, stability, and robustness. -

How do systematic and random errors differ, and what strategies can reduce their impact?

Answer: Systematic errors are consistent biases that push all measurements in the same direction, often caused by miscalibration, incorrect reference values, or flawed experimental setups. They can usually be identified by comparison with standards and corrected through calibration or improved procedures. Random errors arise from unpredictable influences like electrical noise, small environmental fluctuations, or operator variability, causing measurements to fluctuate around a mean value. Engineers reduce random errors by averaging repeated measurements, improving shielding and grounding, controlling environmental conditions, and using better instruments. Distinguishing between these error types is essential for interpreting data correctly. -

Why is regular calibration necessary for reliable instruments, and what does a typical calibration process involve?

Answer: Over time, components drift, sensors age, and mechanical parts wear, causing instruments to deviate from their original behaviour. Calibration restores confidence in the readings by checking the instrument against known reference standards. A typical calibration sequence exposes the instrument to a series of well-defined input values, records the outputs, and compares them with expected results. Any systematic differences are documented and corrected by adjusting internal settings or applying software correction factors. Establishing a suitable calibration interval and keeping traceable records are vital in regulated industries where measurement accuracy underpins safety, compliance, and product quality. -

What is signal conditioning, and how does it improve the quality of measurement data?

Answer: Signal conditioning encompasses all the processing applied to a raw sensor signal before it is digitised or used by other circuitry. Common functions include amplification of weak signals, attenuation of signals that are too large, filtering to remove unwanted noise or interference, level shifting to match the input range of an ADC, and electrical isolation for safety or noise reduction. Without proper conditioning, important details can be lost in noise, or the signal might saturate the acquisition system. By shaping the signal into a clean, well-scaled form, signal conditioning lays the foundation for accurate and stable measurements. -

How do ADCs and DACs bridge the gap between analog signals and digital processing?

Answer: An analog-to-digital converter (ADC) samples a continuous-time, continuous-amplitude signal and represents each sample as a digital value with finite resolution. This allows microcontrollers, digital signal processors, and computers to store, analyse, and manipulate real-world data such as temperature trends or vibration spectra. A digital-to-analog converter (DAC) performs the opposite function, turning digital numbers into analog voltages or currents that can drive actuators, generate test signals, or reconstruct audio. Together, ADCs and DACs enable sophisticated control, monitoring, and communication systems that rely on digital computation but must interact with the analog world. -

What is the relationship between sampling rate, Nyquist frequency, and aliasing in data acquisition?

Answer: The sampling rate is how often an analog signal is measured per second, and the Nyquist frequency is half of that rate. According to the Nyquist–Shannon sampling theorem, to represent a signal faithfully without aliasing, the sampling rate must be at least twice the highest frequency component present in the signal. If the sampling rate is too low, higher-frequency components masquerade as lower frequencies in the sampled data, creating aliasing that distorts analysis or control decisions. To prevent this, engineers select an appropriate sampling rate and use analog anti-aliasing filters to remove components above the Nyquist frequency before sampling. -

Which criteria guide the selection of a sensor for a specific measurement task?

Answer: Sensor selection begins with matching the measurable range and required accuracy to the application’s needs. Engineers then consider sensitivity, resolution, response time, and long-term stability. Environmental factors such as temperature, humidity, vibration, and chemical exposure must also be taken into account, as well as the sensor’s physical size, mounting options, and ease of integration with electronics and software. A carefully chosen sensor will perform reliably under real operating conditions and deliver signals that can be conditioned and digitised without excessive complexity. -

How do temperature variations influence the behaviour of measurement equipment, and how can these effects be mitigated?

Answer: Temperature changes can alter resistor values, shift semiconductor characteristics, and expand or contract mechanical elements, all of which can introduce drift, offset, or gain changes in instruments and sensors. To mitigate these effects, designers may select components with low temperature coefficients, include temperature compensation networks, or apply software correction based on simultaneous temperature measurements. In critical applications, instruments may be housed in temperature-controlled enclosures or calibrated across the expected temperature range to maintain accuracy over varying environmental conditions. -

How are wireless technologies and the Internet of Things (IoT) transforming instrumentation and measurement?

Answer: Wireless and IoT technologies enable large populations of sensors to be deployed over wide areas, sending data to local gateways or cloud platforms without extensive cabling. This opens up new possibilities for remote monitoring, predictive maintenance, environmental sensing, and smart infrastructure. At the same time, designers must address challenges such as limited battery capacity, radio interference, secure data transmission, and network management. When these issues are handled well, wireless instrumentation and IoT architectures provide rich, real-time data streams that can significantly improve decision-making and system efficiency.

Instrumentation and Measurement: Thought-Provoking Questions

1. How do measurement resolution and sensor dynamic range interact to influence the overall quality of data collection?

Answer:

Resolution determines the smallest change in input a sensor can detect, whereas dynamic range defines the limits—minimum to maximum—over which it can measure accurately. A high resolution within a narrow range might deliver very detailed readings but risk clipping or saturating if inputs exceed that range. Conversely, a broad dynamic range can capture larger variations but may reduce granularity in finer measurements. Designers balance these factors based on their application’s needs: for example, a biomedical sensor might favor high resolution over a narrower range, while an industrial sensor for pressure could require a broad range to handle extreme fluctuations.

2. In what ways can advanced signal processing techniques enhance instrumentation reliability, and when could simpler approaches suffice?

Answer:

Signal processing methods—like filtering, averaging, and spectral analysis—clean up noisy or low-level signals, uncover hidden patterns, and improve repeatability. By applying algorithms such as moving averages or Kalman filters, engineers reduce random fluctuations or systematic interference. More sophisticated transforms (like wavelet analysis) might reveal momentary spikes that simpler methods miss. However, these techniques add computational overhead and complexity. For straightforward applications (e.g., a stable temperature measurement in controlled conditions), a simple low-pass filter may suffice. The ultimate choice hinges on performance demands, resource limits, and whether the added complexity delivers tangible gains.

3. Why must engineers carefully balance sampling rate, storage capacity, and data integrity in long-term measurements?

Answer:

Capturing high-speed events often demands fast sampling, but this generates substantial data, increasing storage requirements and processing demands. Overly frequent sampling can also collect redundant information. Conversely, undersampling risks losing critical transient phenomena that might inform failure analysis or system health. Finding a proper balance means selecting a rate that captures essential signal dynamics without burdening memory or missing subtle but significant anomalies. Techniques like event-triggered logging, data compression, or dynamic sampling (adapting rate based on observed signal changes) help manage this trade-off effectively.

4. When measuring phenomena in harsh or remote environments, how do engineers juggle sensor durability, cost, and measurement fidelity?

Answer:

Rugged sensors are designed to withstand extreme temperatures, pressures, or corrosive media, but these specialized materials and housings can raise costs. The quest for durability may also constrain sensor sensitivity or introduce additional signal conditioning. For instance, a robust enclosure can shield the sensor from physical damage but create thermal or pressure lags. Thus, designers must weigh environmental demands (like shock or chemical exposure) against the required precision and budget. They often employ redundancy—multiple sensors or protective layers—to ensure continued operation if one sensor fails, balancing cost with reliability over the system’s lifetime.

5. Why is calibration not a one-time event, and how do standards and traceability maintain measurement confidence over time?

Answer:

Components age, environmental conditions change, and routine wear can shift an instrument’s baseline. Regular calibration against known standards realigns the instrument with traceable benchmarks, correcting systematic drifts before they compromise data. Traceability means each calibration links back to recognized national or international standards, ensuring universal consistency. This process reinforces trust in measurements, particularly in regulated industries like healthcare, aviation, or pharmaceuticals, where errors can have serious consequences. A calibration schedule, coupled with thorough documentation, sustains accurate and verifiable results.

6. How can repeatability be ensured across varied operating conditions and the natural aging of measurement equipment?

Answer:

Repeatability implies that identical conditions produce the same measurement result over time. Changes in temperature, humidity, or vibration can undermine that consistency, as can component drift from aging. Engineers mitigate these issues with robust enclosures, temperature compensation circuits, or environmental control in labs. Selecting higher-grade components reduces long-term drift. Additionally, frequent recalibration or periodic performance tests catch and correct deviations before they accumulate. By controlling both external influences and hardware stability, engineers maintain consistent performance despite changing environments and equipment wear.

7. In what ways does real-time analysis of measurement data empower predictive maintenance and proactive decision-making?

Answer:

Real-time data streaming allows continuous monitoring of equipment health, detecting anomalies or trends before they escalate into failures. Algorithms can recognize patterns—like slight increases in vibration or temperature—that signal early-stage malfunctions. This predictive capability lets operators schedule maintenance at optimal times, minimizing downtime and avoiding catastrophic breakdowns. By integrating sensors, data analytics, and alert systems, organizations transition from reactive to proactive maintenance strategies, lowering operational costs and improving overall reliability in industries from manufacturing to energy production.

8. How do multi-parameter measurement systems address cross-sensitivity issues to ensure each sensor’s accuracy?

Answer:

When measuring multiple parameters (e.g., temperature, humidity, pressure), each sensor may interfere with another’s readings. A high-humidity environment might alter how a temperature sensor behaves, or dust could degrade optical sensors. Engineers design sensor arrays with careful spacing, shielding, and calibration that accounts for interdependent effects. By applying compensation algorithms—such as correlating temperature data to correct humidity readings—they ensure each parameter remains as accurate as it would be in isolation. This synergy is essential in climate monitoring stations, environmental chambers, or integrated industrial processes where multiple variables must be tracked simultaneously.

9. Why might an engineer choose to emphasize high sensitivity over a broad measurement range, and what are the trade-offs?

Answer:

High sensitivity allows detecting minute changes or very small signals, crucial in fields like biomedical sensing (measuring subtle physiological changes) or environmental monitoring (detecting trace contaminants). However, this generally narrows the sensor’s operational range and may increase susceptibility to noise. Conversely, a broad range accommodates extremes but can dilute resolution, making fine distinctions harder to detect. Designers weigh the nature of the target measurement against application tolerance for missed small signals or for saturating if inputs exceed the device’s range. The decision depends on the end goal: capturing subtle phenomena or handling diverse, large-scale readings.

10. What design considerations ensure user trust and clarity in measurement results, especially when the process itself is invisible or abstract?

Answer:

Measurement systems may work behind the scenes or sense phenomena not directly observable (like radiation levels or chemical traces). Clear user interfaces, transparent calibration certificates, and documented error margins instill confidence. Visual cues—such as bar graphs, traffic-light indicators, or auditory alerts—make intangible data more intuitive. Regular self-checks or diagnostic modes reassure users that the system remains healthy. By blending accurate readings with clear, well-explained output, designers close the gap between complex measurement processes and user comprehension, fostering trust in devices from medical monitors to industrial sensors.

11. How do emerging sensor technologies, such as MEMS and optical sensors, expand measurement possibilities, and what complexities accompany them?

Answer:

Micro-Electro-Mechanical Systems (MEMS) offer ultra-compact sensors with low power usage and rapid response, enabling devices like smartphones to measure acceleration or rotation. Optical sensors leverage light interactions for contactless measurements (e.g., detecting gases, blood oxygen saturation). These capabilities open new applications—smarter wearables, advanced robotics, precise environmental probes. However, miniaturization can amplify noise or drift, requiring advanced calibration and packaging. Optical sensors may need carefully managed light paths or environmental controls to prevent contamination. Balancing these complexities with the benefits of small form factor, sensitivity, and speed extends instrumentation into realms once deemed too small or too remote to measure accurately.

12. How does adaptive or closed-loop measurement feed into broader control systems, and why does this blur the line between sensor and actuator?

Answer:

Adaptive measurement systems adjust their parameters—like sampling rate or gain—based on real-time feedback about signal conditions. This allows the instrument to enhance accuracy under changing conditions or filter out extraneous noise dynamically. When integrated into closed-loop control, sensor data immediately informs actuators to maintain a target state (e.g., adjusting valves in a chemical process). The sensor itself may be embedded in an actuator assembly (e.g., a smart motor with built-in current and position sensors), merging sensing and actuation into a single module. This tight coupling improves responsiveness and precision, transforming sensors from passive measurement tools into integral components of self-regulating, intelligent systems.