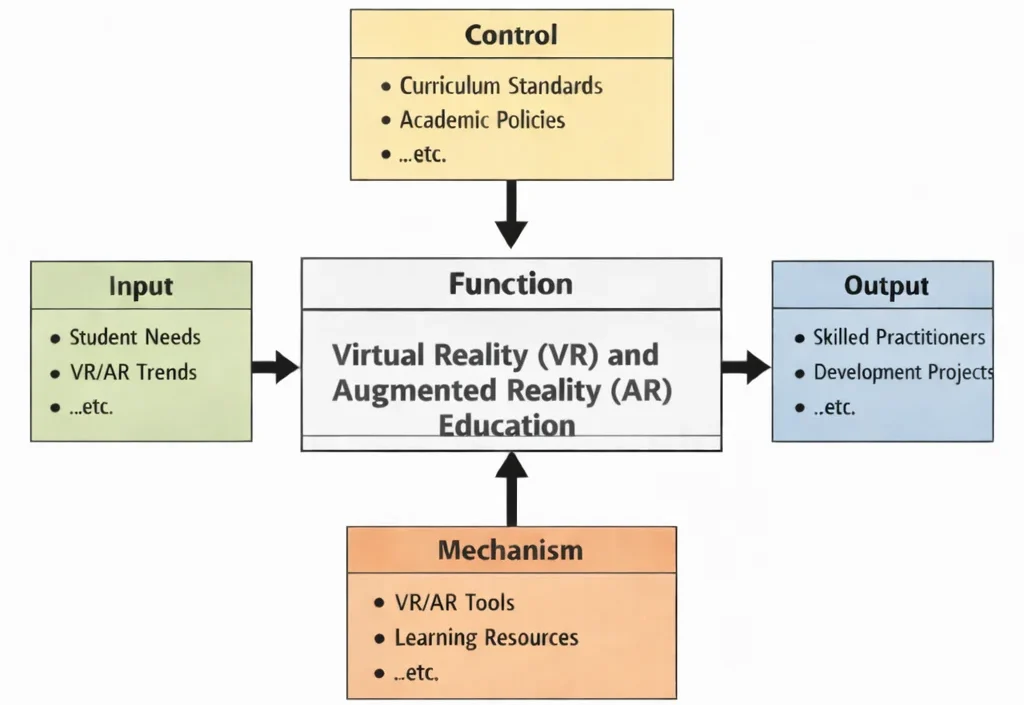

Virtual Reality (VR) and Augmented Reality (AR) Education is, at its core, the art of teaching people to design presence—that peculiar feeling that a digital experience is not merely viewed, but inhabited. The diagram helps clarify what must come together for that to happen. Students arrive with different goals: some want to build simulations for engineering or healthcare, some want interactive storytelling, some are drawn to training, therapy, or industrial design. At the same time, the field moves quickly, and today’s headsets, interaction patterns, and deployment platforms rarely stand still for long. These inputs keep the learning grounded in both human motivation and technical reality.

Controls matter because immersive technology has consequences. Curriculum standards and academic policies do more than organise lessons; they set boundaries for quality and responsibility—comfort, accessibility, safety, ethics, user privacy, and the discipline of evaluation. In VR/AR, a design that looks brilliant on paper can fail in minutes if users feel disoriented, overwhelmed, or excluded. The controls ensure that students learn to measure experience, not just to produce visuals.

Mechanisms then supply the “workbench”: hardware, software, development environments, asset pipelines, testing methods, and carefully chosen learning resources. With these in place, students can prototype rapidly, observe how real users respond, and refine interaction until it feels natural. When the function succeeds, the outputs are unmistakable—practitioners who understand both the technical stack and the psychology of immersion, and projects that demonstrate usable, meaningful experiences rather than mere novelty.

This IDEF0 diagram models “Virtual Reality (VR) and Augmented Reality (AR) Education” as an organized transformation process. Inputs such as student needs and VR/AR trends enter from the left, while controls—curriculum standards and academic policies—shape objectives, safety considerations, assessment, and scope from above. The central function produces outputs on the right, including skilled practitioners and completed development projects. Supporting mechanisms from below—VR/AR tools and learning resources—provide the practical foundation for building immersive experiences, testing interactions, refining usability, and learning through iterative prototyping.

Virtual Reality (VR) and Augmented Reality (AR) have redefined the boundaries of digital interaction, transforming how users perceive, engage with, and respond to digital environments. While game development remains one of the most exciting applications of VR and AR, their influence now extends into fields like education, healthcare, architecture, and cybersecurity. Developers working in this space require a deep understanding of 3D modeling, sensory feedback, real-time rendering, and the immersive integration of narratives and mechanics.

One of the foundations of immersive design lies in narrative design, where AR/VR environments are crafted to respond dynamically to user decisions. Coupling that with artificial intelligence in games creates responsive virtual characters and environments that adapt in real-time. This is particularly potent when layered with procedural storytelling systems within game engine development.

In these immersive contexts, data collection and storage becomes vital. From tracking user gaze and gesture to logging real-time biometric data, AR/VR applications must analyze complex sensory inputs. Insights are drawn using data analysis, supported by data cleaning and preprocessing routines to ensure accuracy. These datasets fuel improvements in design, usability, and personalized experience.

The technical underpinnings of AR/VR are closely tied to tools and technologies in data science, enabling simulation of physics, real-time feedback systems, and adaptive scene rendering. Visualization plays a central role, with data visualization helping developers interpret user interaction patterns and optimize the spatial layout and object responsiveness.

In safety-critical applications, immersive systems must incorporate protocols from network security and endpoint security to prevent breaches. Protection of user data, especially in sensitive AR environments like remote surgery or military simulations, demands robust systems for cybersecurity policy, identity and access management, and cryptography.

Immersive simulations also benefit from integration with big data analytics and domain-specific analytics, especially when deployed at scale. Whether in training modules, virtual classrooms, or collaborative workspaces, these tools help tailor content to individual preferences and performance metrics. Such insights also contribute to the ethical and social aspects of immersive technology design.

As VR/AR hardware becomes more integrated into public and private sectors, new challenges emerge in emerging areas in cyber security such as deepfakes, spoofing of sensor input, and virtual surveillance. Tools from incident response and forensics are crucial for diagnosing vulnerabilities, while ethical hacking allows developers to test and improve security through simulated attacks.

Moreover, projects that integrate real-world operations with mixed-reality environments—such as in smart factories or infrastructure monitoring—must comply with protocols found in CPS security and operational technology (OT) security. Monitoring and modeling such environments with threat intelligence tools ensures a secure and resilient infrastructure.

As this field grows, VR and AR will continue to depend on cross-disciplinary knowledge, blending security protocols, data pipelines, narrative craft, and game mechanics. Students and professionals entering this space are encouraged to explore data science and analytics holistically and pursue ethical, user-centered, and innovative applications of these transformative technologies.

This artwork portrays Virtual Reality and Augmented Reality as a blend of human experience, 3D computing, and collaborative engineering. A prominent VR headset figure symbolizes full immersion—stepping into a simulated environment where perception is shaped by software and sensors. Below, multiple creators sit at workstations, suggesting the teamwork behind immersive systems: designers, programmers, and 3D artists building scenes, interactions, and user interfaces. At the center, a miniature city-like model and scaffolding hint at spatial computing—constructing digital objects that can be navigated, manipulated, and scaled like real structures. Surrounding icons, glowing geometric shapes, and interface overlays represent tracking, rendering pipelines, depth mapping, and real-time feedback. The overall composition captures the core idea: VR creates an entirely digital world you enter, while AR layers digital content onto the physical world, turning spaces into interactive environments for learning, design, training, and entertainment.

- Game Development topics:

- Game Development – Overview

- Game Engine Development

- AI in Games

- Narrative Design

- VR & AR

Table of Contents

Definition of Virtual Reality and Augmented Reality

Virtual Reality (VR):

A fully immersive digital environment that isolates users from the real world, allowing them to interact with virtual objects and scenarios through devices like headsets and motion controllers.

Augmented Reality (AR):

A technology that overlays digital elements, such as images, sounds, and data, onto the real world using devices like smartphones, AR glasses, or headsets.

Core Objectives of VR/AR Development:

Immersion:

Create realistic and engaging experiences that captivate users.

Interactivity:

Enable intuitive and meaningful interactions with digital content.

Accessibility:

Optimize performance across diverse devices and platforms.

Innovation:

Continuously push the technological limits to enhance user experiences.

Key Components of VR/AR Development

Hardware Integration

- Definition:

Ensuring seamless compatibility between the application and VR/AR hardware. - Key Devices:

- VR Headsets: Oculus Quest, HTC Vive, PlayStation VR.

- AR Platforms: Microsoft HoloLens, Magic Leap, ARKit (Apple), and ARCore (Google).

- Input Devices: Motion controllers, hand-tracking systems, and haptic feedback devices.

- Applications:

- Providing real-time feedback to enhance immersion.

- Utilizing sensors for accurate spatial tracking.

3D Modeling and Environment Design

- Definition:

Creating detailed, interactive, and realistic virtual environments for VR and AR. - Key Techniques:

- Low-Poly Optimization: Balancing detail with performance for real-time rendering.

- Texturing and Lighting: Adding realism through advanced shaders and dynamic lighting.

- 360-Degree Spaces: Designing environments that fully surround the user.

- Applications:

- Crafting lifelike worlds for VR games.

- Designing AR overlays for interior design or architecture.

Spatial Computing and Tracking

- Definition:

Utilizing spatial awareness to map and interact with real-world or virtual environments. - Key Features:

- SLAM (Simultaneous Localization and Mapping): Mapping physical spaces for AR interactions.

- Positional Tracking: Enabling movement within a VR environment.

- Object Recognition: Identifying and interacting with real-world objects in AR.

- Applications:

- AR apps for furniture placement, like IKEA Place.

- Room-scale VR experiences in games like Beat Saber.

User Experience (UX) and Interface Design

- Definition:

Designing intuitive and engaging interfaces that align with VR/AR interactivity. - Key Techniques:

- Gaze-Based Navigation: Allowing users to control menus by looking at options.

- Gesture Controls: Leveraging hand tracking for interaction.

- Minimizing Motion Sickness: Ensuring smooth transitions and reducing disorientation.

- Applications:

- Creating interfaces for VR training simulations.

- Developing AR HUDs (Heads-Up Displays) for smart glasses.

Performance Optimization

- Definition:

Ensuring smooth performance and responsiveness across VR/AR devices. - Key Strategies:

- Frame Rate Optimization: Maintaining a stable frame rate (e.g., 90 FPS for VR) to prevent lag.

- Efficient Rendering: Using techniques like culling and level-of-detail (LOD) adjustments.

- Battery Management: Optimizing AR apps for mobile devices.

- Applications:

- Delivering seamless gameplay in VR titles.

- Extending the usability of AR apps on smartphones.

Applications of VR/AR Development

Gaming and Entertainment

- VR: Immersive games like Half-Life: Alyx or The Walking Dead: Saints & Sinners.

- AR: Mobile games like Pokémon GO and interactive museum exhibits.

Education and Training

- VR: Simulated environments for training pilots, surgeons, or engineers.

- AR: Augmented anatomy lessons or historical site visualizations for students.

Healthcare and Therapy

- VR: Pain management through distraction-based therapy or phobia exposure treatments.

- AR: Real-time assistance for surgeons using augmented overlays during operations.

Retail and E-Commerce

- VR: Virtual shopping experiences where users explore stores digitally.

- AR: Try-before-you-buy experiences, like virtual fitting rooms for clothing or makeup.

Architecture and Real Estate

- VR: Virtual walkthroughs of unbuilt properties or designs.

- AR: Overlaying furniture and decor in real-world spaces.

Challenges in VR/AR Development

Hardware Limitations

- VR requires high-performance devices, which can be cost-prohibitive.

- AR faces constraints like limited field-of-view in headsets and mobile battery life.

Motion Sickness

- Poor frame rates or lag can cause discomfort in VR users.

- Developers must design motion systems that minimize nausea.

Content Accessibility

- Ensuring experiences are inclusive and accessible to users with disabilities.

Cross-Platform Development

- Creating applications compatible with diverse devices and ecosystems.

High Development Costs

- Developing VR/AR experiences demands skilled professionals and cutting-edge tools, increasing production costs.

Emerging Trends in VR/AR Development

Mixed Reality (MR):

- Combining VR and AR for seamless transitions between virtual and physical worlds.

- Examples: Microsoft HoloLens projects blending real and digital environments.

AI-Driven Enhancements:

- Integrating AI for adaptive environments, real-time translation, and procedural content generation.

- Examples: Virtual tutors in VR educational platforms.

Tactile Feedback and Haptics:

- Enhancing immersion through gloves, suits, and other devices that simulate touch.

- Examples: Haptic feedback in gloves for realistic object interactions.

Social VR/AR Experiences:

- Creating collaborative spaces for socializing, gaming, or working.

- Examples: VR meeting platforms like AltspaceVR and Horizon Workrooms.

5G Integration:

- Leveraging 5G networks for real-time streaming and enhanced AR/VR performance.

Future of VR/AR Development

The future of VR and AR lies in deeper integration into daily life, offering solutions for education, healthcare, remote work, and entertainment. As hardware becomes more affordable and accessible, the potential for large-scale adoption grows. Innovations in AI, machine learning, and cloud computing will further enhance the sophistication of VR/AR experiences, enabling more realistic interactions, procedural worlds, and collaborative applications.

Why Study Virtual Reality and Augmented Reality

Immersive Technology for Real-World Applications

Studying Virtual Reality (VR) and Augmented Reality (AR) enables students to design fully immersive environments or overlay digital information onto the real world. These technologies are widely used in fields like healthcare (surgical training), architecture (virtual walkthroughs), education (interactive simulations), and entertainment.

Interdisciplinary Skill Development

VR and AR development combines computer graphics, 3D modeling, spatial computing, user interface design, and human perception. Students learn to create experiences that are not only technically sound but also psychologically engaging.

Future-Ready Career Paths

As demand for immersive content rises, skills in VR/AR design are crucial for careers in game development, remote training, product design, and virtual collaboration tools.

Virtual Reality and Augmented Reality: Conclusion

Virtual Reality and Augmented Reality are revolutionizing how we perceive and interact with the world. By merging cutting-edge technology with creativity, VR/AR developers are crafting immersive experiences that transcend traditional boundaries of storytelling, education, and innovation. While challenges like hardware constraints and motion sickness remain, the industry’s rapid advancements signal a transformative future where virtual and augmented realities become integral to human life.

Frequently Asked Questions about Virtual and Augmented Reality in Game Development

What is the difference between virtual reality (VR) and augmented reality (AR) in games?

Virtual reality (VR) places the player inside a fully digital environment using a headset that blocks out the physical world, while augmented reality (AR) overlays digital objects and information on top of the real world through a phone, tablet, or headset. In games, VR focuses on immersion and presence, making you feel as if you are inside the game world, whereas AR blends game elements with your surroundings so you can still see and move within real spaces.

Do I need powerful hardware to start learning VR and AR game development?

High-end hardware is helpful for advanced VR and AR development, but it is not always necessary to begin learning. Many introductory projects can run on mid-range PCs, laptops, and even smartphones using affordable headsets or simple AR toolkits. At pre-university level, you can start by learning the basics of 3D graphics, interaction design, and user interfaces, and then gradually move on to more demanding projects as you gain access to better hardware and university facilities.

What skills should I develop in secondary school if I am interested in VR and AR for games?

If you are interested in VR and AR for games, it is useful to build a foundation in programming, 3D thinking, and human–computer interaction. Learning a language such as C#, C++, or Python will help you understand game logic and interaction. Mathematics, especially algebra, trigonometry, and vectors, will support your understanding of 3D spaces and movement. Art, design, and media studies can also help you think about visual composition, storytelling, and user experience, which are all important for immersive environments.

Which tools and engines are commonly used for VR and AR game development?

Unity and Unreal Engine are two of the most widely used engines for VR and AR game development, offering built-in support for popular headsets and AR platforms. Developers often work with SDKs such as OpenXR, Meta Quest tools, SteamVR, ARCore, or ARKit, depending on their target devices. At pre-university and undergraduate levels, many students start with Unity plus a beginner-friendly VR or AR toolkit, then move to more advanced features as they learn about performance, interaction, and cross-platform deployment.

How is designing for VR and AR different from designing traditional screen-based games?

Designing for VR and AR is different because the player’s body, surroundings, and sense of presence become part of the experience. You must consider comfort, motion sickness, field of view, and the fact that players may look and move in any direction. Interaction often relies on head movement, hand tracking, and spatial gestures rather than just buttons and joysticks. Good VR and AR design respects the player’s physical space, provides clear visual and audio cues, and avoids sudden, uncontrolled camera motions that can cause discomfort.

What are some non-entertainment applications of VR and AR that students should be aware of?

Beyond entertainment, VR and AR are used in education, healthcare, architecture, engineering, tourism, and training simulations. Examples include virtual laboratories for science learning, surgical training environments, architectural walkthroughs, AR manuals for equipment maintenance, and safety training for hazardous tasks. Understanding these applications can help students see how VR and AR skills connect to wider careers in technology, design, and professional training, not only to game studios.

How can I build a pre-university portfolio that shows my interest in VR and AR game development?

To build a pre-university portfolio for VR and AR, start with small, focused projects that demonstrate how you handle interaction, immersion, and user comfort. You might create a simple VR exploration scene, an AR scavenger hunt that recognises real-world objects, or a prototype that visualises data in 3D space. Record short videos or screenshots, and include brief write-ups explaining your design goals, technical approach, and what you learned about user experience. Admissions teams value evidence that you can finish projects and reflect critically on them.

What university majors and careers can grow from an interest in VR and AR for games?

An interest in VR and AR for games can lead to university majors such as computer science, software engineering, interactive media, game design and development, human–computer interaction, or digital arts. Career paths include VR or AR developer, interaction designer, technical artist, experience designer, and simulation engineer. The same skills are also useful in fields such as training and simulation, healthcare technology, architecture and urban planning, and cultural heritage, where immersive experiences are increasingly important.

Virtual Reality and Augmented Reality: Review Questions and Answers

1. What is virtual reality (VR) in game development and how does it enhance gameplay?

Answer: Virtual reality (VR) in game development is a technology that immerses players in a fully digital environment using specialized headsets and motion tracking devices. It enhances gameplay by creating a sense of presence and interactivity that traditional games cannot match. VR enables players to experience the game world in a more visceral and engaging manner, as every movement and gesture is translated into the digital realm. This immersive quality not only increases player engagement but also opens up innovative possibilities for interactive storytelling and dynamic game mechanics.

2. What is augmented reality (AR) and how is it used in interactive game experiences?

Answer: Augmented reality (AR) overlays digital elements such as images, animations, or information onto the real world using devices like smartphones or AR glasses. In interactive game experiences, AR blends virtual content with the physical environment, allowing players to engage with game elements in real time. This technology creates a hybrid experience where digital characters and objects interact with real-world settings, enriching the gameplay with contextual and situational dynamics. As a result, AR provides a unique platform for innovative game mechanics and personalized experiences that extend beyond traditional screen-based interactions.

3. How do VR and AR technologies differ in terms of immersion and user interaction?

Answer: VR and AR technologies differ primarily in the level of immersion and the mode of user interaction they offer. VR completely replaces the real world with a virtual environment, fully immersing the player in a digital space that simulates real-world experiences. In contrast, AR enhances the real world by overlaying digital information on top of it, allowing for a blend of physical and virtual interactions. This fundamental difference means that VR typically provides a more immersive experience, while AR offers a more integrated and context-aware interaction with the real world. Both technologies, however, are designed to elevate user engagement and interactivity in distinct ways.

4. What are the key hardware components required for effective VR game development?

Answer: Effective VR game development requires several key hardware components, including high-resolution head-mounted displays (HMDs), motion tracking sensors, powerful graphics processing units (GPUs), and specialized controllers or input devices. These components work together to create a seamless and immersive virtual experience by accurately tracking the user’s movements and rendering high-quality visuals in real time. The HMD provides the visual interface, while motion sensors capture player movements, and controllers enable interactive gameplay. Ensuring compatibility and performance across these hardware elements is crucial for delivering a smooth and engaging VR experience.

5. How does motion tracking technology contribute to VR gaming experiences?

Answer: Motion tracking technology is fundamental to VR gaming experiences as it captures and translates the player’s physical movements into the virtual environment. This technology uses sensors, cameras, and accelerometers to monitor head, hand, and body movements, allowing for natural and intuitive interactions within the game. Accurate motion tracking enhances immersion by ensuring that the digital world responds realistically to the player’s actions, creating a more engaging and believable experience. Additionally, improved motion tracking minimizes latency and enhances the overall responsiveness of the VR system, which is critical for maintaining user comfort and preventing motion sickness.

6. What challenges do developers face when integrating VR and AR into game development?

Answer: Developers face numerous challenges when integrating VR and AR into game development, including high hardware costs, performance optimization, and ensuring a seamless user experience across diverse platforms. Creating immersive environments requires significant computational power, which can lead to issues with latency and rendering performance. Additionally, developers must address user comfort, as prolonged use of VR headsets can cause fatigue or motion sickness. Balancing technical complexity with creative design and maintaining cross-platform compatibility further complicate the development process, requiring innovative solutions and rigorous testing.

7. How do VR and AR enhance narrative design and storytelling in games?

Answer: VR and AR enhance narrative design and storytelling by immersing players in interactive environments where the story unfolds around them. In VR, players experience a fully immersive world that can convey a deep sense of place and atmosphere, while AR enriches the real world with narrative elements that provide context and depth. These technologies allow for dynamic, branching storylines that react to player choices, making the narrative more engaging and personalized. By integrating visual, auditory, and interactive elements, VR and AR create a multi-sensory storytelling experience that enhances emotional connection and narrative impact.

8. What role do specialized software tools play in developing VR and AR games?

Answer: Specialized software tools are critical in developing VR and AR games as they provide the frameworks and libraries needed to create, test, and optimize immersive experiences. Game engines like Unity and Unreal Engine offer built-in support for VR and AR development, including features for rendering, physics simulation, and user input handling. These tools enable developers to efficiently design complex environments and interactive elements that are essential for immersive gameplay. Additionally, software tools facilitate collaboration among teams, streamline the development process, and help ensure that the final product meets high standards of performance and visual quality.

9. How can game developers optimize performance for VR and AR applications?

Answer: Game developers can optimize performance for VR and AR applications by employing techniques such as efficient rendering, level-of-detail (LOD) management, and performance profiling. Optimization ensures that games run smoothly even on hardware with limited resources, reducing latency and maintaining high frame rates essential for immersive experiences. Developers may also use cloud-based solutions and distributed computing to handle computationally intensive tasks, further improving performance. Continuous testing and iterative refinements help identify bottlenecks and ensure that the application delivers a seamless, engaging experience to users.

10. What future trends in VR and AR are expected to shape the evolution of game development?

Answer: Future trends in VR and AR that are expected to shape the evolution of game development include advancements in AI-driven interactions, improvements in hardware performance, and increased integration of haptic feedback technologies. These trends will lead to more immersive and responsive game experiences, where AI enhances interactivity and realism. Additionally, the development of lighter, more comfortable headsets and better motion tracking systems will broaden the accessibility of VR and AR technologies. As these trends converge, they will drive innovation in game design and create new opportunities for interactive storytelling and personalized gameplay.

Virtual Reality and Augmented Reality: Thought-Provoking Questions and Answers

1. How will the integration of advanced AI techniques further transform VR and AR game experiences?

Answer: Advanced AI techniques have the potential to revolutionize VR and AR game experiences by enabling more realistic and dynamic interactions within the virtual environment. AI can drive adaptive behaviors for non-player characters (NPCs), create procedurally generated content, and respond intelligently to player actions in real time. This integration will lead to more immersive and personalized experiences, where the game world evolves based on individual player behavior and preferences.

The convergence of AI with VR and AR will also streamline development workflows, allowing developers to simulate complex scenarios and optimize game mechanics more efficiently. As a result, the gaming experience becomes not only more engaging but also more tailored, pushing the boundaries of traditional gameplay and storytelling.

2. What ethical considerations arise from the use of VR and AR technologies in game development, and how should developers address them?

Answer: The use of VR and AR technologies in game development raises ethical considerations such as privacy, data security, and the psychological effects of immersive experiences. These technologies often collect sensitive user data and can have a profound impact on a player’s perception of reality, which necessitates careful handling of personal information and transparency about data usage. Developers must ensure that they adhere to strict data protection standards and create environments that are safe and non-exploitative.

Addressing these concerns involves implementing robust security protocols, obtaining informed consent from users, and incorporating ethical guidelines into the design process. Additionally, developers should engage with ethicists and regulatory bodies to continually assess the potential social impacts of their technologies, ensuring that innovations enhance user experience without compromising ethical standards.

3. In what ways can narrative design be enhanced through the use of VR and AR technologies?

Answer: VR and AR technologies offer innovative avenues for enhancing narrative design by creating immersive environments where players can experience the story in a more visceral way. These technologies allow narrative elements to be integrated directly into the game world, enabling players to interact with the story through physical movements and real-time decision-making. This interactivity transforms traditional storytelling into a dynamic, engaging process that adapts to the player’s actions, leading to more personalized and memorable narratives.

Furthermore, the use of VR and AR can break the fourth wall, enabling narratives to blend with the real world or create entirely new dimensions of interactivity. This convergence of technology and storytelling not only deepens immersion but also opens up new possibilities for creative expression, allowing designers to craft multi-layered experiences that resonate with players on both emotional and intellectual levels.

4. How might future hardware advancements impact the development of VR and AR game engines?

Answer: Future hardware advancements, such as faster processors, improved graphics cards, and more efficient motion sensors, will significantly impact the development of VR and AR game engines by enabling more complex simulations and higher-fidelity graphics. As hardware capabilities expand, game engines can incorporate richer details, more sophisticated physics, and advanced AI, resulting in more immersive and realistic gaming experiences. This progression will allow developers to push the boundaries of what is possible, creating expansive virtual worlds and interactive environments that respond in real time to player input.

Additionally, enhanced hardware can lead to improved comfort and accessibility for users, reducing latency and motion sickness while enabling longer play sessions. These advancements will not only streamline the development process but also open up new opportunities for innovation in game design, making VR and AR experiences more appealing and widely adopted.

5. What strategies can be used to balance immersion and user comfort in VR and AR games?

Answer: Balancing immersion and user comfort in VR and AR games requires a careful approach that addresses both the technical and design aspects of the experience. Strategies include optimizing frame rates and reducing latency to prevent motion sickness, designing intuitive user interfaces, and providing customizable comfort settings such as adjustable field-of-view and motion blur. Developers can also incorporate breaks and gradual acclimatization phases to help users adapt to immersive environments.

In addition, thorough playtesting and user feedback are essential for identifying and mitigating discomfort issues. By continuously refining both hardware and software elements, developers can create engaging experiences that maximize immersion while ensuring that players remain comfortable and enjoy prolonged gameplay.

6. How can game developers leverage cross-platform development to maximize the reach of VR and AR games?

Answer: Game developers can leverage cross-platform development by using versatile game engines and development tools that support multiple operating systems and hardware configurations. This approach allows games to be deployed on various VR and AR platforms, such as high-end PC headsets, standalone VR devices, and mobile AR applications, broadening the audience and market reach. Cross-platform development ensures a consistent user experience across different devices while optimizing performance for each platform’s unique capabilities.

Adopting cross-platform frameworks also enables developers to share code and assets, reducing development time and costs. By prioritizing interoperability and scalability, game studios can effectively target a diverse range of users and adapt to evolving technology trends, thereby maximizing the impact and commercial success of their VR and AR titles.

7. What role does user experience (UX) design play in the success of VR and AR game development?

Answer: User experience (UX) design is critical to the success of VR and AR game development because it ensures that the interface, interactions, and overall environment are intuitive, engaging, and accessible to players. Effective UX design in immersive environments considers factors such as ease of navigation, clarity of information, and physical comfort to create a seamless gaming experience. By prioritizing user experience, developers can reduce friction and enhance player satisfaction, ultimately leading to higher engagement and retention.

Furthermore, UX design in VR and AR involves iterative testing and refinement to accommodate diverse user needs and preferences. This ongoing process of feedback and adjustment ensures that the final product not only meets technical requirements but also delivers a compelling and enjoyable experience that resonates with a broad audience.

8. How can the integration of real-time data analytics enhance the immersive experience in VR and AR games?

Answer: Integrating real-time data analytics into VR and AR games can significantly enhance immersion by adapting the game environment based on live data and player behavior. Real-time analytics enable dynamic changes in game scenarios, such as adjusting difficulty levels, altering storylines, or introducing new challenges as the game unfolds. This adaptability creates a more responsive and engaging experience that evolves with each player’s unique actions, making the game world feel alive and interactive.

Additionally, real-time feedback can be used to monitor performance and optimize system resources, ensuring that the immersive experience remains smooth and uninterrupted. By leveraging real-time data, developers can continuously refine the game environment, ultimately leading to a more personalized and captivating gaming experience.

9. How might future advancements in haptic technology influence VR and AR gameplay experiences?

Answer: Future advancements in haptic technology are likely to transform VR and AR gameplay experiences by providing tactile feedback that simulates real-world sensations. Enhanced haptics can recreate the feeling of texture, impact, and resistance, adding a new dimension to immersive gameplay that goes beyond visual and auditory cues. This technology will allow players to experience physical sensations that correspond to in-game events, thereby deepening the sense of realism and presence.

Moreover, improved haptic feedback can be integrated with motion tracking and AI-driven interactions to create a more holistic sensory experience. This convergence of technologies will not only enhance player immersion but also open up innovative possibilities for interactive storytelling and gameplay mechanics, setting new benchmarks for realism and engagement in the gaming industry.

10. What potential benefits and challenges arise from using VR and AR for training and educational purposes in game development?

Answer: Using VR and AR for training and educational purposes offers substantial benefits, including immersive learning experiences that can simulate real-world scenarios in a controlled environment. These technologies enable interactive and engaging training modules, allowing learners to practice skills in a risk-free setting. They also provide immediate feedback and analytics, which can help educators tailor the learning process to individual needs. This approach can lead to higher retention rates and a deeper understanding of complex concepts in game development and design.

However, challenges include the high initial cost of VR and AR hardware, potential technical difficulties, and ensuring that the content is accessible to all users. Additionally, there is a learning curve associated with using these advanced technologies, which may require extensive training for both educators and learners. Balancing these benefits and challenges is crucial for maximizing the educational impact while maintaining cost-effectiveness and accessibility.

11. How can the incorporation of user-generated content in VR and AR games influence narrative design and player engagement?

Answer: The incorporation of user-generated content in VR and AR games can significantly influence narrative design by allowing players to contribute to and shape the game’s storyline and world-building elements. This collaborative approach leads to a more dynamic and personalized narrative, where the game evolves based on the creativity and input of its community. It fosters a sense of ownership and engagement among players, as they see their ideas reflected in the gameplay and narrative outcomes.

Moreover, user-generated content can drive innovation and extend the longevity of a game by continuously introducing fresh perspectives and challenges. However, integrating this content requires robust moderation and quality control to ensure that the narrative remains coherent and engaging. When managed effectively, it can create a vibrant ecosystem where players and developers work together to enrich the game experience.

12. What strategies can be implemented to ensure that VR and AR technologies remain accessible and inclusive to diverse audiences?

Answer: Ensuring that VR and AR technologies remain accessible and inclusive involves implementing design practices that accommodate a wide range of physical, cognitive, and socioeconomic needs. Strategies include developing customizable user interfaces, adjustable comfort settings, and alternative control schemes to cater to different abilities and preferences. Accessibility features such as closed captioning, adjustable field of view, and intuitive navigation are essential for making these technologies usable by as many people as possible.

Additionally, organizations should prioritize affordability and cross-platform compatibility to broaden access, while actively engaging with diverse user groups during the development process. By integrating feedback from underrepresented communities and adhering to accessibility standards, developers can create inclusive experiences that welcome a diverse audience, ultimately enriching the overall gaming ecosystem.

Virtual Reality and Augmented Reality: Numerical Problems and Solutions:

1. A VR game collects 5,000,000 interaction data points per month. If 3% of these data points are used for player behavior analysis, calculate the number of data points used per month, then determine the total data points used in a year, and confirm the average monthly usage.

Solution:

• Step 1: Monthly data points used = 5,000,000 × 0.03 = 150,000.

• Step 2: Annual data points used = 150,000 × 12 = 1,800,000.

• Step 3: Average monthly usage = 1,800,000 ÷ 12 = 150,000 data points.

2. A VR headset has a resolution of 2160×1200 per eye. Calculate the total number of pixels per eye, then for both eyes, and finally the total pixels in millions.

Solution:

• Step 1: Pixels per eye = 2160 × 1200 = 2,592,000 pixels.

• Step 2: Total pixels for both eyes = 2,592,000 × 2 = 5,184,000 pixels.

• Step 3: Total in millions = 5,184,000 ÷ 1,000,000 ≈ 5.18 million pixels.

3. A VR game runs at 90 frames per second. Calculate the total number of frames rendered in 10 minutes, then determine the total for a 24-hour period, and finally the average frames per hour.

Solution:

• Step 1: Frames per second = 90; frames per minute = 90 × 60 = 5,400; frames in 10 minutes = 5,400 × 10 = 54,000 frames.

• Step 2: Frames per day = 90 × 60 × 60 × 24 = 7,776,000 frames.

• Step 3: Average frames per hour = 7,776,000 ÷ 24 = 324,000 frames.

4. A game engine processes 0.01 seconds per frame. For a game running at 90 FPS, calculate the total processing time per minute, then per hour, and determine the time saved if optimizations reduce processing time by 20%.

Solution:

• Step 1: Total time per minute = 90 FPS × 60 seconds × 0.01 = 54 seconds.

• Step 2: Total time per hour = 54 × 60 = 3,240 seconds.

• Step 3: With a 20% reduction, new time per minute = 54 × 0.80 = 43.2 seconds; time saved per minute = 54 – 43.2 = 10.8 seconds.

5. A VR game engine logs 800,000 events per day. If 0.25% of these events are critical, calculate the number of critical events per day, then per week, and finally per month (30 days).

Solution:

• Step 1: Critical events per day = 800,000 × 0.0025 = 2,000 events.

• Step 2: Per week = 2,000 × 7 = 14,000 events.

• Step 3: Per month = 2,000 × 30 = 60,000 events.

6. A game development project reduces loading time from 1.2 seconds to 0.9 seconds per asset. For 3,000 assets, calculate the time saved per asset, total time saved in seconds, and then convert to minutes and hours.

Solution:

• Step 1: Time saved per asset = 1.2 – 0.9 = 0.3 seconds.

• Step 2: Total time saved = 3,000 × 0.3 = 900 seconds.

• Step 3: In minutes = 900 ÷ 60 = 15 minutes; in hours = 15 ÷ 60 = 0.25 hours.

7. A game engine uses a neural network with 128 hidden units. Calculate the total number of parameters if the parameters are given by 128², then compute the number of parameters per layer if the network has 5 layers, and finally determine the average number of parameters per layer.

Solution:

• Step 1: Total parameters = 128 × 128 = 16,384.

• Step 2: Parameters per layer (if equally divided among 5 layers) = 16,384 ÷ 5 ≈ 3,276.8.

• Step 3: Average parameters per layer ≈ 3,277 parameters.

8. A VR headset battery lasts 3 hours on a full charge. If a new technology increases battery life by 30%, calculate the new battery life, the additional time gained in minutes, and the percentage increase.

Solution:

• Step 1: New battery life = 3 × 1.30 = 3.9 hours.

• Step 2: Additional time = 3.9 – 3 = 0.9 hours, which is 0.9 × 60 = 54 minutes.

• Step 3: Percentage increase = 30% (given).

9. A motion tracking system samples data at 200 Hz. Calculate the number of samples collected per minute, then per hour, and finally per day (24 hours).

Solution:

• Step 1: Samples per minute = 200 × 60 = 12,000 samples.

• Step 2: Samples per hour = 12,000 × 60 = 720,000 samples.

• Step 3: Samples per day = 720,000 × 24 = 17,280,000 samples.

10. A game development studio invests $50,000 in a new VR development tool that improves productivity by 20%. If the initial project cost was $250,000, calculate the new project cost after productivity gains, the cost saving, and the percentage reduction in project time assuming cost correlates with time.

Solution:

• Step 1: Improved project cost = $250,000 × (1 – 0.20) = $250,000 × 0.80 = $200,000.

• Step 2: Cost saving = $250,000 – $200,000 = $50,000.

• Step 3: Percentage reduction in project time = 20% (given).

11. A VR game narrative script consists of 15 chapters with an average of 3,000 words per chapter. Calculate the total word count, then determine the average words per chapter, and finally compute the new total if each chapter is expanded by 10%.

Solution:

• Step 1: Total word count = 15 × 3,000 = 45,000 words.

• Step 2: Average per chapter = 45,000 ÷ 15 = 3,000 words.

• Step 3: New total word count = 45,000 × 1.10 = 49,500 words.

12. A game engine development project takes 600 hours to complete. If process improvements reduce development time by 25%, calculate the new total development time, the time saved in hours, and the percentage reduction.

Solution:

• Step 1: New development time = 600 × (1 – 0.25) = 600 × 0.75 = 450 hours.

• Step 2: Time saved = 600 – 450 = 150 hours.

• Step 3: Percentage reduction = (150 ÷ 600) × 100 = 25%.