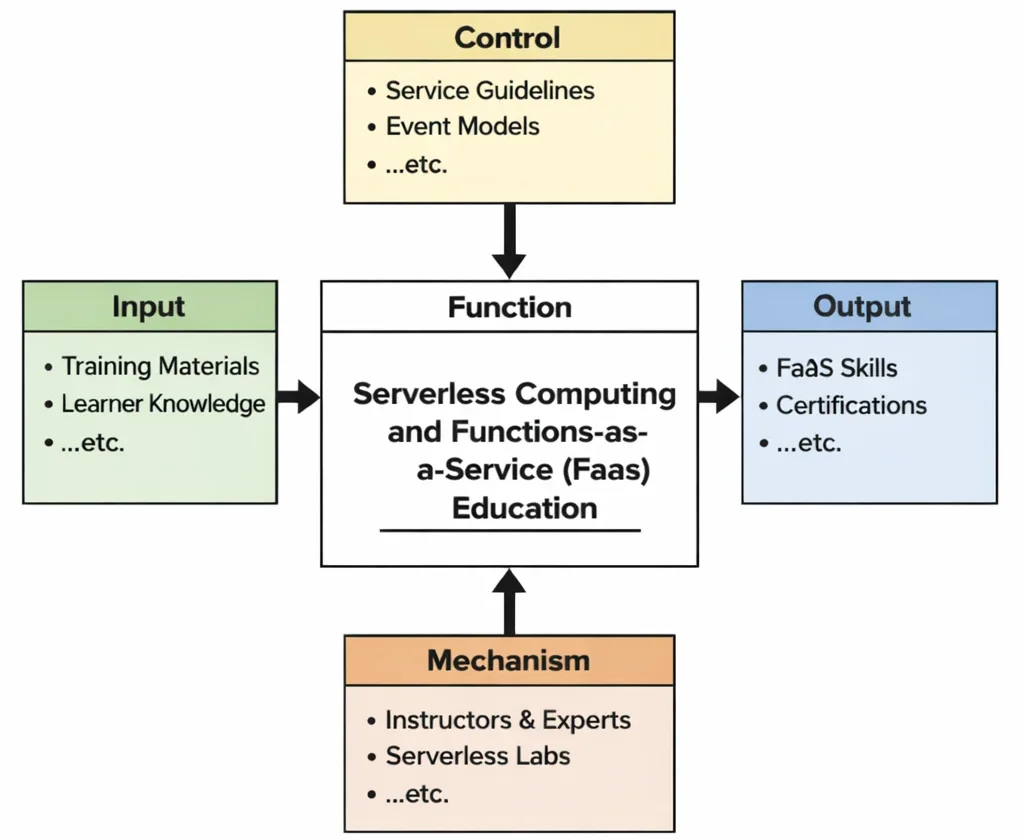

Serverless Computing and FaaS Education teaches a subtle form of power: building systems without inheriting the everyday burden of servers—while still remaining accountable for what runs. The diagram reads like a disciplined journey. Learners bring in inputs—code fundamentals, examples, and real application needs—then the learning is shaped by controls that serverless makes impossible to ignore: permission boundaries, execution limits, cost implications, and governance rules that decide what may run, where, and under which identity. Mechanisms such as guided labs and cloud toolchains turn theory into muscle memory: students write small functions, connect them to triggers, use managed services as building blocks, and learn how observability (logs, metrics, tracing) becomes the “new dashboard” when there is no machine to SSH into. The output is a learner who can think in events, design for graceful failure, secure the function’s identity, and deliver features that scale on demand—without treating the cloud as magic, and without treating operations as an afterthought.

This IDEF0 (Input–Control–Output–Mechanism) diagram summarizes Serverless Computing and Functions-as-a-Service (FaaS) Education as a transformation process. Inputs include training resources, prior programming knowledge, and problem scenarios. Controls include platform constraints, security and governance requirements, cost/usage policies, and curriculum outcomes that shape what “good serverless” looks like. Mechanisms include instructors, cloud platforms, serverless runtimes, deployment tools, and hands-on lab environments that let learners build and test event-driven functions. Outputs include learners who can design, deploy, and operate serverless solutions—writing functions that respond to events, integrating managed services, handling permissions safely, monitoring executions, and making trade-offs around latency, scaling, reliability, and cost.

Serverless computing, particularly in the form of Functions-as-a-Service (FaaS), represents a radical shift in how developers build, deploy, and manage applications. Rather than provisioning servers or managing infrastructure, developers focus solely on writing code while the platform handles execution and scalability. This model fits seamlessly into modern workflows such as DevOps and Infrastructure as Code, which automate deployment and configuration across distributed environments.

As part of performance optimization, FaaS platforms automatically scale based on demand, eliminating idle capacity and reducing operational costs. This aligns well with dynamic architectures that integrate edge computing to handle data closer to the source, enhancing responsiveness for latency-sensitive applications. FaaS also complements advances in cloud connectivity, where API-driven services interact across multiple networks and geographies.

To ensure trust and control in serverless environments, careful attention must be paid to security, compliance, and identity management. Unlike traditional servers, functions may be invoked by diverse triggers, requiring robust identity and access management policies and runtime isolation. These security considerations expand into areas like cloud security and application-level protection, ensuring that each invocation of a function does not expose vulnerabilities.

The containerization of functions often overlaps with virtualization technologies, offering portability and consistency across environments. In enterprise-grade setups, serverless systems may also integrate with data analytics pipelines, making them ideal for bursty workloads such as big data analytics and streaming event processing.

A secure serverless ecosystem also requires proactive defenses such as threat intelligence gathering and continuous endpoint security management. Developers must be aware of issues like privilege escalation, insecure API endpoints, and function sprawl. These concerns are increasingly addressed by emerging areas in cybersecurity and continuous monitoring tools tailored for ephemeral workloads.

In serverless environments, failures are often distributed, making incident response and forensics more challenging. Nevertheless, the integration of AI and machine learning in cloud security enables smarter, adaptive protection mechanisms. Meanwhile, cryptography ensures that data-in-transit and data-at-rest remain secure, even when processed across stateless functions.

Support for cybersecurity policies and operational governance is vital, especially in sensitive deployments such as Operational Technology (OT) security or cyber-physical systems. Additionally, cultivating awareness of serverless security among engineers and architects ensures safe and compliant implementation at scale.

Finally, as serverless computing matures, it intersects with related innovations such as emerging technologies, network security models, and ethical hacking practices designed to identify weaknesses before exploitation. For students exploring the future of computing, understanding FaaS is crucial for mastering event-driven programming, scalable cloud architectures, and automated operations in a post-server paradigm.

This image portrays a “serverless” world where cloud services orchestrate computing behind the scenes. Bright cloud icons float above centralized server stacks, while neon network lines connect user devices, control panels, and monitoring screens—suggesting event-driven execution, rapid scaling, and automated operations. The developer at a console implies that teams focus on writing and deploying functions, while the platform manages provisioning, capacity, and runtime environments. The overall mood—fast, luminous, and highly connected—fits the core promise of FaaS: lightweight units of code that spring into action when triggered, then scale up or down automatically as demand changes.

What is Function-as-a-Service (FaaS) in cloud computing?

Function-as-a-Service (FaaS) is a serverless computing model where developers upload small units of code called functions that run in response to events, such as HTTP requests, file uploads, or queue messages. The cloud provider automatically manages server provisioning, scaling, and maintenance, so you only focus on writing business logic. You are typically billed based on the number of function invocations and the execution time, rather than paying for always-on servers or virtual machines.

How does FaaS differ from traditional server-based and PaaS architectures?

In traditional server-based architectures, teams provision and manage full servers or virtual machines, including operating systems and runtime environments. Platform-as-a-Service (PaaS) abstracts some of this by managing the platform while you deploy full applications or services. FaaS goes further by letting you deploy individual functions that run on demand without managing servers, processes, or long-running applications. This fine-grained, event-driven model allows for more elastic scaling and pay-per-use billing, especially suitable for bursty or sporadic workloads.

What are common use cases for Function-as-a-Service?

Common use cases for Function-as-a-Service include processing HTTP API requests, transforming or validating data as it moves through a pipeline, responding to file uploads, and handling background tasks such as sending emails or notifications. FaaS is also used for real-time stream processing, IoT event handling, scheduled jobs, and back-end glue logic that connects different services. Because functions start quickly and scale automatically, they are particularly useful for workloads that experience unpredictable traffic or short-lived bursts of activity.

How does pricing work for Function-as-a-Service platforms?

FaaS platforms usually follow a pay-per-use pricing model where you are charged based on the number of function invocations and the execution time, often measured in milliseconds. Costs also depend on the allocated memory or compute resources configured for each function. Many providers offer a free tier with a limited number of monthly invocations, making FaaS attractive for experiments, student projects, and low-volume workloads. This pricing model can significantly reduce costs compared to running idle servers, provided that functions are well-designed and not kept running for long periods unnecessarily.

What are cold starts in FaaS and why do they matter for performance?

A cold start occurs when a FaaS platform needs to initialise a new function instance before it can handle a request, for example when a function is invoked after a period of inactivity or when traffic spikes. This initialisation may involve starting a container, loading code, and setting up runtime dependencies, which adds latency to the first invocation. For latency-sensitive applications, cold starts can cause noticeable delays, so developers often optimise function size, choose lighter runtimes, or use warm-up strategies to reduce their impact on user experience.

What limitations and design constraints should developers consider when using FaaS?

FaaS functions typically have limits on execution time, memory, package size, and network access, which can vary by provider. Functions are stateless by design, so any persistent data must be stored in external services such as databases, caches, or object storage. Developers should design small, single-purpose functions, avoid long-running tasks, and handle idempotency because events can occasionally be delivered more than once. Understanding these constraints helps in deciding whether a workload is suitable for FaaS or better served by containers or traditional services.

How do security and monitoring work in a Function-as-a-Service environment?

In FaaS environments, the cloud provider secures the underlying infrastructure, while customers configure identity and access management, network policies, and data protection. Functions should run with least-privilege permissions and sensitive configuration values should be stored as secrets rather than hard-coded. Monitoring tools and logs capture metrics such as invocation counts, errors, and latency, helping teams detect anomalies and optimise performance. Integrating FaaS logs with broader observability and security platforms allows organisations to maintain consistent governance across serverless and non-serverless workloads.

How does FaaS fit into microservices and event-driven architectures?

FaaS naturally complements microservices and event-driven architectures by allowing teams to implement individual capabilities as small, independent functions. Services publish events to queues, topics, or streams, and functions subscribe to and react to those events without needing dedicated servers. This decoupling improves scalability and resilience, because each function can scale independently in response to workload. For students, learning FaaS is a practical way to understand how modern cloud applications are built using events instead of monolithic request–response flows.

Which skills should students develop to work with FaaS and serverless technologies?

Students should become comfortable with at least one programming language supported by major FaaS platforms, such as Python, JavaScript, or Java. They should learn how to design stateless functions, integrate with cloud services like storage, databases, and messaging, and use infrastructure-as-code tools to deploy serverless applications. Understanding event-driven design, API gateways, and basic cloud security practices is also essential. These skills are directly relevant to modern cloud engineering roles that focus on building scalable, cost-efficient back ends using serverless patterns.

Table of Contents

Key Topics in Serverless and Functions-as-a-Service (FaaS)

Serverless computing and Functions-as-a-Service (FaaS) represent a paradigm shift in application development and deployment. By abstracting infrastructure management, these technologies free development teams from dealing with server provisioning, patching, and capacity planning. Instead, developers can focus on writing and deploying code that runs in response to events—leading to high scalability, cost-efficiency, and faster time to market. Two critical aspects that highlight the value of FaaS are Event-Driven Architectures and Cost-Efficiency. Below is a more detailed exploration of each topic.

Event-Driven Architectures

Definition and Core Concepts

- In an event-driven architecture, applications are composed of small, independent functions or services that respond to events. These events can originate from various sources, such as user interactions, API calls, IoT device signals, database changes, or scheduled tasks.

- An event-driven approach helps decouple the components of a system. Each function is triggered only when a relevant event occurs, facilitating a more modular and maintainable design.

Implementation in FaaS

- Serverless platforms (like AWS Lambda, Azure Functions, and Google Cloud Functions) natively support event-driven execution. Developers write functions that specify triggers (for instance, an incoming HTTP request or a file upload to cloud storage), and the cloud provider automatically runs the function in response to that trigger.

- This approach eliminates the need for dedicated servers to be running at all times. The cloud provider handles the orchestration, scaling the environment up or down based on real-time demand.

Advantages

- Scalability: As event loads increase, the platform automatically provisions more function instances in parallel, ensuring minimal impact on performance and user experience. Once the traffic subsides, the platform scales back down, helping manage costs.

- Speed of Development: Because each function is focused on a single task, it can be tested, updated, and redeployed quickly without affecting the entire application.

- Resilience: Failure in one part of the system typically doesn’t bring down other components because functions are loosely coupled. This can enhance the overall reliability of the application.

Common Use Cases

- Real-Time Processing: Monitoring file uploads, data streams, or IoT sensor data and triggering on-the-fly processing or alerts.

- API Backends: Handling on-demand requests for web or mobile applications without maintaining a full server.

- Automation and Scheduled Tasks: Running functions at specific time intervals or in response to certain conditions (e.g., sending automated reports, cleaning up databases).

Cost-Efficiency

Pay-as-You-Go Pricing Model

- A defining feature of FaaS is the pay-for-execution model. Instead of paying for idle server resources, you’re billed only for the milliseconds your function runs and for the number of executions.

- This drastically reduces costs for spiky or unpredictable workloads. If a function is not invoked, it incurs no charges. When it is invoked, the cost is proportional to how long it runs and how many times it’s triggered.

Elimination of Idle Costs

- Traditional server-based systems must be sized for peak loads, meaning you pay for dedicated computing resources even when usage is low. In a serverless environment, you don’t need to keep servers running or overprovision to handle sudden spikes. The cloud provider manages elasticity behind the scenes.

Reduced Operational Overhead

- Maintaining and securing servers—patching operating systems, dealing with hardware failures, and monitoring availability—can be time-consuming and costly. Serverless platforms abstract these responsibilities, letting companies refocus efforts on core features rather than backend maintenance.

Budget Predictability

- Although costs can add up with extremely high volumes of function invocations, companies typically find serverless solutions offer better budget predictability for typical workloads. The fine-grained pricing (based on exact resource consumption) can be closely monitored with usage metrics and logs provided by the cloud provider.

Efficiency in Development Cycles

- Serverless environments often pair naturally with continuous integration/continuous deployment (CI/CD) pipelines, making it seamless to push updates. This rapid iteration can lead to indirect cost savings by reducing time spent waiting on builds and deployments, or managing manual workflows.

Serverless and Functions-as-a-Service (FaaS): Conclusion

Serverless and FaaS leverage event-driven architectures to build modular, responsive applications while offering unparalleled cost-efficiency. The ability to scale dynamically based on demand and eliminate idle resource costs makes these technologies ideal for a wide range of use cases, from IoT systems to data processing pipelines. Together, these capabilities empower businesses to create high-performance applications while optimizing costs and operational overhead.

Serverless computing and FaaS have revolutionized the application development landscape by removing the complexities of managing infrastructure and optimizing resources. The move towards event-driven architectures enables responsive, loosely coupled systems that scale seamlessly and handle varying workloads. Additionally, the cost-efficiency of paying only for actual function execution has made serverless a compelling option for organizations of all sizes.

As companies continue to embrace the cloud-native ecosystem, understanding and leveraging these Key Topics in Serverless and Functions-as-a-Service—Event-Driven Architectures and Cost-Efficiency—will remain vital for building agile, resilient, and cost-effective solutions.

Why Study Serverless and Functions-as-a-Service (FaaS)

Rethinking Application Development Without Managing Servers

Understanding Event-Driven Architectures and Use Cases

Learning to Work with Leading FaaS Platforms

Optimizing for Cost, Performance, and Scalability

Preparing for Cloud-Native Development and Emerging Careers

Function-as-a-Service (FaaS) – Frequently Asked Questions

These FAQs introduce key ideas behind Function-as-a-Service, how it compares with other cloud models, and what students should know for real-world serverless projects.

What is Function-as-a-Service (FaaS) in cloud computing?

Function-as-a-Service (FaaS) is a serverless computing model where developers upload small units of code called functions that run in response to events, such as HTTP requests, file uploads, or queue messages. The cloud provider automatically manages server provisioning, scaling, and maintenance, so you only focus on writing business logic. You are typically billed based on the number of function invocations and the execution time, rather than paying for always-on servers or virtual machines.

How does FaaS differ from traditional server-based and PaaS architectures?

In traditional server-based architectures, teams provision and manage full servers or virtual machines, including operating systems and runtime environments. Platform-as-a-Service (PaaS) abstracts some of this by managing the platform while you deploy full applications or services. FaaS goes further by letting you deploy individual functions that run on demand without managing servers, processes, or long-running applications. This fine-grained, event-driven model allows for more elastic scaling and pay-per-use billing, especially suitable for bursty or sporadic workloads.

What are common use cases for Function-as-a-Service?

Common use cases for Function-as-a-Service include processing HTTP API requests, transforming or validating data as it moves through a pipeline, responding to file uploads, and handling background tasks such as sending emails or notifications. FaaS is also used for real-time stream processing, IoT event handling, scheduled jobs, and back-end glue logic that connects different services. Because functions start quickly and scale automatically, they are particularly useful for workloads that experience unpredictable traffic or short-lived bursts of activity.

How does pricing work for Function-as-a-Service platforms?

FaaS platforms usually follow a pay-per-use pricing model where you are charged based on the number of function invocations and the execution time, often measured in milliseconds. Costs also depend on the allocated memory or compute resources configured for each function. Many providers offer a free tier with a limited number of monthly invocations, making FaaS attractive for experiments, student projects, and low-volume workloads. This pricing model can significantly reduce costs compared to running idle servers, provided that functions are well-designed and not kept running for long periods unnecessarily.

What are cold starts in FaaS and why do they matter for performance?

A cold start occurs when a FaaS platform needs to initialise a new function instance before it can handle a request, for example when a function is invoked after a period of inactivity or when traffic spikes. This initialisation may involve starting a container, loading code, and setting up runtime dependencies, which adds latency to the first invocation. For latency-sensitive applications, cold starts can cause noticeable delays, so developers often optimise function size, choose lighter runtimes, or use warm-up strategies to reduce their impact on user experience.

What limitations and design constraints should developers consider when using FaaS?

FaaS functions typically have limits on execution time, memory, package size, and network access, which can vary by provider. Functions are stateless by design, so any persistent data must be stored in external services such as databases, caches, or object storage. Developers should design small, single-purpose functions, avoid long-running tasks, and handle idempotency because events can occasionally be delivered more than once. Understanding these constraints helps in deciding whether a workload is suitable for FaaS or better served by containers or traditional services.

How do security and monitoring work in a Function-as-a-Service environment?

In FaaS environments, the cloud provider secures the underlying infrastructure, while customers configure identity and access management, network policies, and data protection. Functions should run with least-privilege permissions and sensitive configuration values should be stored as secrets rather than hard-coded. Monitoring tools and logs capture metrics such as invocation counts, errors, and latency, helping teams detect anomalies and optimise performance. Integrating FaaS logs with broader observability and security platforms allows organisations to maintain consistent governance across serverless and non-serverless workloads.

How does FaaS fit into microservices and event-driven architectures?

FaaS naturally complements microservices and event-driven architectures by allowing teams to implement individual capabilities as small, independent functions. Services publish events to queues, topics, or streams, and functions subscribe to and react to those events without needing dedicated servers. This decoupling improves scalability and resilience, because each function can scale independently in response to workload. For students, learning FaaS is a practical way to understand how modern cloud applications are built using events instead of monolithic request–response flows.

Which skills should students develop to work with FaaS and serverless technologies?

Students should become comfortable with at least one programming language supported by major FaaS platforms, such as Python, JavaScript, or Java. They should learn how to design stateless functions, integrate with cloud services like storage, databases, and messaging, and use infrastructure-as-code tools to deploy serverless applications. Understanding event-driven design, API gateways, and basic cloud security practices is also essential. These skills are directly relevant to modern cloud engineering roles that focus on building scalable, cost-efficient back ends using serverless patterns.

Serverless and Functions-as-a-Service (FaaS): Review Questions and Answers:

1. What is Function as a Service (FaaS) and how does it operate?

Answer: Function as a Service (FaaS) is a cloud computing model that enables developers to run individual pieces of code in response to events without managing the underlying infrastructure. It operates on an event-driven basis, meaning that code is executed only when triggered by a specific action or event, such as an HTTP request or a message queue notification. This model abstracts away server management, allowing developers to focus solely on writing code. FaaS is a key component of the serverless paradigm, which optimizes resource utilization and scales automatically based on demand.

2. How does FaaS differ from traditional cloud computing models?

Answer: Unlike traditional cloud computing models that require provisioning and managing virtual machines or containers, FaaS abstracts the infrastructure entirely, letting developers deploy code that executes on demand. Traditional models often involve maintaining continuously running servers regardless of workload, whereas FaaS bills only for the compute time consumed during execution. This results in significant cost savings and operational efficiencies. Moreover, FaaS facilitates rapid scaling and event-driven processing, making it ideal for applications with unpredictable or bursty workloads.

3. What are the primary benefits of using FaaS in cloud environments?

Answer: FaaS offers numerous benefits, including reduced operational overhead, cost efficiency, and improved scalability. By eliminating the need for server management, it allows developers to focus on application logic and innovation. FaaS also enables automatic scaling, ensuring that resources are allocated dynamically based on the workload, which improves performance during high-demand periods. Additionally, the pay-per-use model helps organizations reduce expenses by charging only for the actual execution time of functions.

4. How does event-driven architecture play a role in FaaS?

Answer: In FaaS, event-driven architecture is fundamental because it triggers the execution of code in response to specific events such as file uploads, user actions, or sensor data. This approach ensures that functions run only when necessary, optimizing resource use and reducing idle time. The event-driven model enables real-time processing and rapid responsiveness, which are crucial for applications requiring immediate feedback. Overall, it aligns well with the serverless paradigm by providing a scalable, efficient, and responsive way to handle diverse workloads.

5. What are common use cases for FaaS in modern cloud applications?

Answer: Common use cases for FaaS include real-time data processing, automated scaling of web applications, and handling intermittent or unpredictable workloads. It is frequently used for microservices, where each function performs a discrete task, as well as for tasks like image processing, log analysis, and IoT data ingestion. FaaS is ideal for scenarios that require rapid response times and cost-effective execution, such as processing user requests on high-traffic websites or triggering workflows in response to events. Its flexibility and efficiency make it a popular choice for innovative, agile cloud applications.

6. How does FaaS contribute to cost optimization in cloud deployments?

Answer: FaaS contributes to cost optimization by adopting a pay-per-execution billing model, meaning organizations are charged only for the compute time their functions actually use. This eliminates the need to maintain idle servers, reducing wasted resources and lowering overall operational costs. By automatically scaling based on demand, FaaS ensures that resources are available when needed without incurring extra expenses during low-usage periods. This efficient allocation of resources leads to significant cost savings, especially for applications with variable or unpredictable workloads.

7. What challenges are associated with adopting FaaS, and how can they be mitigated?

Answer: While FaaS offers many benefits, challenges such as cold start latency, vendor lock-in, and complexity in debugging distributed functions can arise. Cold starts, where a function takes longer to execute after a period of inactivity, may impact performance for latency-sensitive applications. Vendor lock-in can restrict flexibility if an organization becomes too dependent on a specific provider’s tools and frameworks. These challenges can be mitigated by implementing techniques like pre-warming functions, designing applications to tolerate latency, and using abstraction layers or multi-cloud strategies to reduce dependency on a single vendor.

8. How does FaaS support scalability and adaptability in application deployment?

Answer: FaaS inherently supports scalability by automatically provisioning and de-provisioning resources in response to incoming events. This dynamic scaling means that functions can handle sudden increases in traffic without manual intervention or over-provisioning of resources. Additionally, its modular nature allows developers to break down applications into smaller, independent functions that can be updated and scaled individually. This adaptability leads to faster development cycles and the ability to respond swiftly to changing market demands, ensuring that applications remain both robust and efficient.

9. In what ways can FaaS be integrated with other cloud services to build comprehensive solutions?

Answer: FaaS can be seamlessly integrated with other cloud services such as databases, messaging queues, and storage solutions to create end-to-end applications. By using triggers from these services, functions can execute in response to events like data updates or file uploads. This integration allows for the creation of microservices architectures, where each function handles a specific task, yet they all work together cohesively. Such a design promotes modularity, ease of maintenance, and enhanced performance, enabling organizations to build scalable, robust, and efficient cloud solutions.

10. What future trends could influence the evolution of FaaS and serverless computing?

Answer: Future trends that could influence FaaS and serverless computing include advancements in container orchestration, improved cold start performance, and enhanced integration with edge computing. Innovations in container technology may lead to more efficient execution environments that minimize latency and resource overhead. Additionally, as FaaS platforms mature, we can expect better support for long-running processes and stateful applications. These trends, along with the growing adoption of AI and machine learning, will drive further innovation in serverless computing, making it an increasingly vital part of the cloud ecosystem.

Serverless and Functions-as-a-Service (FaaS): Thought-Provoking Questions and Answers

1. How might the integration of FaaS with container orchestration technologies shape the future of serverless architectures?

Answer: The integration of FaaS with container orchestration technologies, such as Kubernetes, could create a more flexible and efficient serverless environment. By combining the dynamic scaling capabilities of FaaS with the robust management features of container orchestration, organizations can achieve finer-grained control over resource allocation and deployment strategies. This synergy would allow for smoother scaling, reduced latency, and improved reliability for complex applications. In essence, it would bridge the gap between traditional container-based approaches and modern serverless paradigms, fostering innovation and enhanced operational efficiency.

Moreover, this integration may lead to the development of hybrid platforms where functions are deployed seamlessly alongside containerized microservices. Such an environment would support diverse workloads and provide developers with the best of both worlds—rapid deployment and robust orchestration. This could ultimately result in more resilient and adaptable cloud infrastructures, paving the way for next-generation digital solutions.

2. What are the potential implications of FaaS on software development lifecycles and DevOps practices?

Answer: FaaS can significantly shorten software development lifecycles by enabling rapid prototyping and deployment of discrete functions. This serverless approach allows developers to iterate quickly and deploy changes without the overhead of managing underlying infrastructure. As a result, DevOps practices can become more agile, with continuous integration and continuous deployment pipelines streamlined through automated, event-driven executions. This can lead to faster time-to-market and improved responsiveness to user feedback, ultimately fostering a culture of innovation and efficiency.

On the other hand, the microservices nature of FaaS may introduce complexities in debugging, monitoring, and managing distributed systems. Organizations will need to adapt their DevOps strategies to incorporate specialized tools for tracking function performance and handling state across ephemeral instances. Despite these challenges, the overall impact of FaaS on software development is likely to be profoundly positive, driving more modular, scalable, and resilient applications that align with modern agile methodologies.

3. How can organizations leverage FaaS to drive cost optimization without sacrificing performance?

Answer: Organizations can leverage FaaS for cost optimization by taking advantage of its pay-per-execution model, which ensures that they only pay for compute resources when functions are actively running. This eliminates the cost associated with idle resources that are common in traditional server models. By monitoring usage patterns and employing auto-scaling, organizations can further fine-tune resource allocation to match workload demands precisely. The result is a more efficient, cost-effective deployment model that scales automatically based on real-time needs, ensuring optimal performance at minimal expense.

In addition, integrating detailed analytics and performance monitoring tools can help identify bottlenecks and opportunities for further cost reduction. These insights allow organizations to adjust their architectures, optimize code, and fine-tune function execution, all of which contribute to maintaining high performance while minimizing operational costs. Over time, this strategic approach to cost management can lead to significant savings and a more agile, responsive IT infrastructure.

4. What challenges might arise when implementing FaaS in mission-critical applications, and how can they be mitigated?

Answer: Implementing FaaS in mission-critical applications can introduce challenges such as cold start latency, state management issues, and complexities in monitoring distributed functions. Cold starts, where functions take longer to initialize after a period of inactivity, may affect the performance of real-time systems. Additionally, managing state across ephemeral function instances can be problematic, especially for applications that require persistent connections or data continuity. These challenges must be addressed to ensure that FaaS can reliably support high-stakes, mission-critical workloads.

Mitigation strategies include using techniques like function pre-warming to reduce cold start delays, leveraging external state management services, and implementing comprehensive monitoring solutions tailored for serverless environments. Organizations can also adopt hybrid architectures that combine FaaS with traditional server-based components for tasks that require consistent performance. By carefully designing the application architecture and employing best practices, the risks associated with FaaS in mission-critical scenarios can be significantly minimized, ensuring both reliability and high performance.

5. How might FaaS influence the evolution of cloud service pricing models in the coming years?

Answer: FaaS is likely to drive significant changes in cloud service pricing models by emphasizing the pay-per-execution paradigm rather than traditional resource-based billing. As FaaS adoption grows, providers may refine their pricing structures to account for the granular consumption of compute time, leading to more transparent and cost-effective billing for users. This shift could result in a greater focus on performance-based pricing, where costs are directly tied to application responsiveness and efficiency. In such a model, organizations would benefit from paying only for the actual usage of resources, potentially reducing wasted expenditure and improving overall cost predictability.

Over time, increased competition in the serverless space may further drive innovation in pricing strategies, including dynamic discounts and usage-based incentives. Providers might offer tiered pricing models that reward high-volume users with lower per-execution costs or bundle services to provide additional value. This evolution in pricing could make FaaS more accessible to startups and small businesses while encouraging established enterprises to optimize their operations for cost efficiency, ultimately reshaping the economics of cloud computing.

6. What future innovations could emerge from the integration of FaaS with artificial intelligence and machine learning?

Answer: The integration of FaaS with artificial intelligence (AI) and machine learning (ML) holds the potential to spawn a new generation of intelligent, adaptive cloud applications. By deploying AI/ML models as serverless functions, organizations can execute complex analytics and real-time decision-making at scale without the overhead of managing dedicated infrastructure. This integration would allow for dynamic adjustments based on real-time data, resulting in highly responsive applications that can learn and adapt to changing conditions. Innovations in this area could include personalized user experiences, predictive analytics, and automated anomaly detection, all executed seamlessly in a FaaS environment.

Moreover, the use of AI/ML in conjunction with FaaS can drive the development of self-optimizing systems that continuously refine performance and resource allocation. As models process more data and generate insights, they can feed back into the function execution process, enabling real-time performance tuning and cost optimization. The convergence of these technologies not only enhances operational efficiency but also paves the way for entirely new service offerings that leverage the combined power of serverless computing and advanced analytics.

7. How can developers address the challenges of state management in FaaS architectures for complex applications?

Answer: Addressing state management challenges in FaaS architectures requires innovative solutions, as serverless functions are inherently stateless. Developers can adopt external state management services such as distributed caches or databases to maintain context between function invocations. By decoupling state from function execution, applications can preserve session information and ensure continuity across multiple transactions. Techniques such as stateful workflows and orchestration tools can further assist in coordinating complex processes that require persistent state.

In addition, developers may leverage frameworks that abstract state management complexities, enabling easier integration with serverless functions. These frameworks can provide built-in support for session persistence, error handling, and data synchronization, which are critical for ensuring reliable application performance. By combining these approaches with robust monitoring and testing, developers can effectively manage state in FaaS environments, ensuring that even complex, multi-step applications function smoothly and reliably.

8. What strategies can organizations use to ensure robust security in FaaS deployments, particularly concerning code execution and data handling?

Answer: To ensure robust security in FaaS deployments, organizations should adopt a multi-layered security approach that includes secure code practices, runtime protection, and continuous monitoring. Strategies such as code reviews, vulnerability scanning, and the implementation of least privilege access controls help mitigate risks associated with code execution. Additionally, using encryption for data in transit and at rest, as well as implementing identity and access management (IAM) protocols, strengthens the overall security posture. Automated monitoring and logging of function invocations can also provide early detection of anomalous behavior, enabling rapid response to potential threats.

Integrating security into the development lifecycle through a “shift-left” approach ensures that vulnerabilities are identified and addressed early. Organizations can also leverage managed security services provided by cloud vendors, which offer specialized tools for serverless environments. These combined strategies create a resilient security framework that protects both the execution of functions and the sensitive data they process, ensuring that FaaS deployments remain secure in a dynamic threat landscape.

9. How might the adoption of FaaS impact organizational IT roles and responsibilities in the future?

Answer: The adoption of FaaS is likely to shift the focus of IT roles from traditional infrastructure management to more strategic, development-oriented tasks. As serverless architectures abstract away many of the operational complexities, IT teams can concentrate on writing code, optimizing performance, and integrating innovative features into applications. This shift could lead to a redefinition of roles, with increased emphasis on DevOps practices, automation, and continuous delivery. As a result, the traditional boundaries between development and operations are likely to blur further, promoting a culture of collaboration and shared responsibility.

In addition, the reliance on FaaS may drive the need for specialized skills in areas such as cloud security, serverless architecture design, and performance monitoring. Organizations will need to invest in training and upskilling their workforce to adapt to this new paradigm. Ultimately, the transformation brought by FaaS is expected to foster a more agile, innovation-focused IT environment, where roles evolve to support rapid development and deployment cycles while maintaining robust operational security.

10. What are the potential benefits and drawbacks of a fully event-driven architecture enabled by FaaS in enterprise applications?

Answer: A fully event-driven architecture enabled by FaaS offers the potential benefits of increased responsiveness, scalability, and decoupled system components that can operate independently. This model allows enterprise applications to react to events in real time, facilitating rapid data processing and decision-making. It also enables more flexible, modular design, where individual functions can be updated or replaced without impacting the entire system. However, drawbacks may include increased complexity in managing distributed events, challenges in maintaining state and data consistency, and difficulties in monitoring and debugging across numerous function invocations.

To mitigate these drawbacks, organizations must invest in robust orchestration and monitoring tools that provide end-to-end visibility across the event-driven ecosystem. Clear architectural guidelines and best practices for event design and error handling are essential to ensure system reliability. While a fully event-driven architecture can drive significant innovation and agility, it requires careful planning and management to balance its benefits against the inherent complexities.

11. How can FaaS influence the development of microservices architectures, and what potential synergies exist between the two paradigms?

Answer: FaaS naturally complements microservices architectures by enabling the deployment of discrete, independent functions that can be managed, scaled, and updated separately. This synergy allows organizations to build highly modular applications where each microservice performs a specific task and communicates with others via APIs. FaaS simplifies the management of these microservices by abstracting away infrastructure concerns and enabling rapid, event-driven execution. The result is an agile development environment where microservices can be developed and deployed independently, leading to faster innovation cycles and improved system resilience.

Moreover, the combination of FaaS and microservices can reduce operational overhead and enhance scalability, as resources are allocated dynamically based on actual usage. This allows for a more efficient utilization of compute resources and better cost management. As organizations increasingly adopt cloud-native practices, the integration of FaaS into microservices architectures will become a key driver of digital transformation, enabling more agile, responsive, and robust enterprise applications.

12. How might regulatory challenges impact the adoption of FaaS for sensitive applications, and what strategies can mitigate these concerns?

Answer: Regulatory challenges, such as data protection laws and compliance requirements, can impact the adoption of FaaS for sensitive applications by imposing strict guidelines on data handling, storage, and processing. These regulations may require organizations to implement additional security measures and maintain comprehensive audit trails, which can be challenging in a serverless, ephemeral environment. To mitigate these concerns, organizations can adopt strategies such as integrating advanced encryption, multi-factor authentication, and real-time monitoring into their FaaS deployments. Ensuring that the chosen FaaS provider adheres to industry standards and compliance certifications is also crucial.

Furthermore, developing a robust governance framework that includes regular audits, automated compliance checks, and detailed documentation of function behavior can help meet regulatory requirements. By proactively addressing regulatory challenges and designing FaaS solutions with compliance in mind, organizations can leverage the benefits of serverless architectures for sensitive applications while maintaining the highest standards of data protection and integrity.

Serverless and Functions-as-a-Service (FaaS): Numerical Problems and Solutions

1. Calculating the Cost Per Execution of a FaaS Function

Solution:

Step 1: Assume a FaaS function executes 1,000,000 times in a month and the cost is $0.000002 per execution.

Step 2: Multiply the number of executions by the cost per execution: 1,000,000 × $0.000002 = $2.

Step 3: Verify that the monthly cost for executions is $2.

2. Estimating Monthly Billing for a FaaS Application

Solution:

Step 1: Suppose a function runs 500,000 times per month and each execution takes 200 ms, billed at $0.000003 per 100 ms.

Step 2: Calculate the cost per execution: (200 ms ÷ 100 ms) × $0.000003 = 2 × $0.000003 = $0.000006.

Step 3: Multiply by total executions: 500,000 × $0.000006 = $3.

3. Determining the ROI for Migrating to a FaaS Model

Solution:

Step 1: Assume traditional infrastructure costs $50,000 per month and migrating to FaaS reduces costs by 80%, saving $40,000 monthly.

Step 2: Annual savings = $40,000 × 12 = $480,000.

Step 3: If the migration cost was $200,000, ROI = (($480,000 – $200,000) ÷ $200,000) × 100 = 140%.

4. Calculating the Impact of a Cold Start on Execution Time

Solution:

Step 1: Assume a normal execution takes 150 ms and a cold start adds 350 ms delay, so total = 150 ms + 350 ms = 500 ms.

Step 2: Determine the percentage increase: (350 ms ÷ 150 ms) × 100 ≈ 233.33%.

Step 3: Conclude that a cold start increases execution time by approximately 233%.

5. Estimating the Monthly Execution Volume from a FaaS Function

Solution:

Step 1: Suppose a function is triggered 3 times per second.

Step 2: Total executions per minute = 3 × 60 = 180; per hour = 180 × 60 = 10,800.

Step 3: Monthly executions (30 days) = 10,800 × 24 × 30 = 7,776,000.

6. Calculating the Average Duration Cost per Execution

Solution:

Step 1: Assume a function executes for an average of 250 ms and is billed in 100 ms increments at $0.000004 per increment.

Step 2: Rounding up, 250 ms becomes 300 ms, which is 3 increments.

Step 3: Cost per execution = 3 × $0.000004 = $0.000012.

7. Estimating the Total Compute Time in Hours per Month

Solution:

Step 1: If a function executes 2,000,000 times per month with an average duration of 300 ms each, total milliseconds = 2,000,000 × 300 = 600,000,000 ms.

Step 2: Convert milliseconds to seconds: 600,000,000 ÷ 1,000 = 600,000 seconds.

Step 3: Convert seconds to hours: 600,000 ÷ 3,600 ≈ 166.67 hours.

8. Determining the Increase in Function Efficiency from Optimization

Solution:

Step 1: Assume optimization reduces average execution time from 400 ms to 300 ms.

Step 2: Time saved per execution = 400 ms – 300 ms = 100 ms.

Step 3: Percentage improvement = (100 ms ÷ 400 ms) × 100 = 25%.

9. Calculating the Cost Differential Between Two Billing Models

Solution:

Step 1: Model A bills $0.000005 per execution and Model B bills $0.000007 per execution for 1,000,000 executions.

Step 2: Cost for Model A = 1,000,000 × $0.000005 = $5; for Model B = 1,000,000 × $0.000007 = $7.

Step 3: Differential = $7 – $5 = $2.

10. Estimating the Savings from Reducing Cold Start Frequency

Solution:

Step 1: Assume 10% of 5,000,000 monthly executions experience a cold start, i.e., 500,000 cold starts.

Step 2: If each cold start adds an extra 350 ms costing an additional $0.000001 per execution, extra cost = 500,000 × $0.000001 = $0.50.

Step 3: Reducing cold starts by half saves $0.25 per month.

11. Determining the Average Cost Per Million Executions

Solution:

Step 1: Assume the cost per execution is $0.000006 from Problem 2.

Step 2: For 1,000,000 executions, total cost = 1,000,000 × $0.000006 = $6.

Step 3: This confirms that the average cost per million executions is $6.

12. Break-even Analysis for Investing in FaaS Optimization Tools

Solution:

Step 1: Assume an investment of $100,000 in optimization tools reduces costs by $10,000 per month.

Step 2: Payback period = $100,000 ÷ $10,000 = 10 months.

Step 3: Over a 2-year period, total savings = 10 months’ payback + (14 months × $10,000) = $100,000 + $140,000 = $240,000, confirming a strong return on investment.