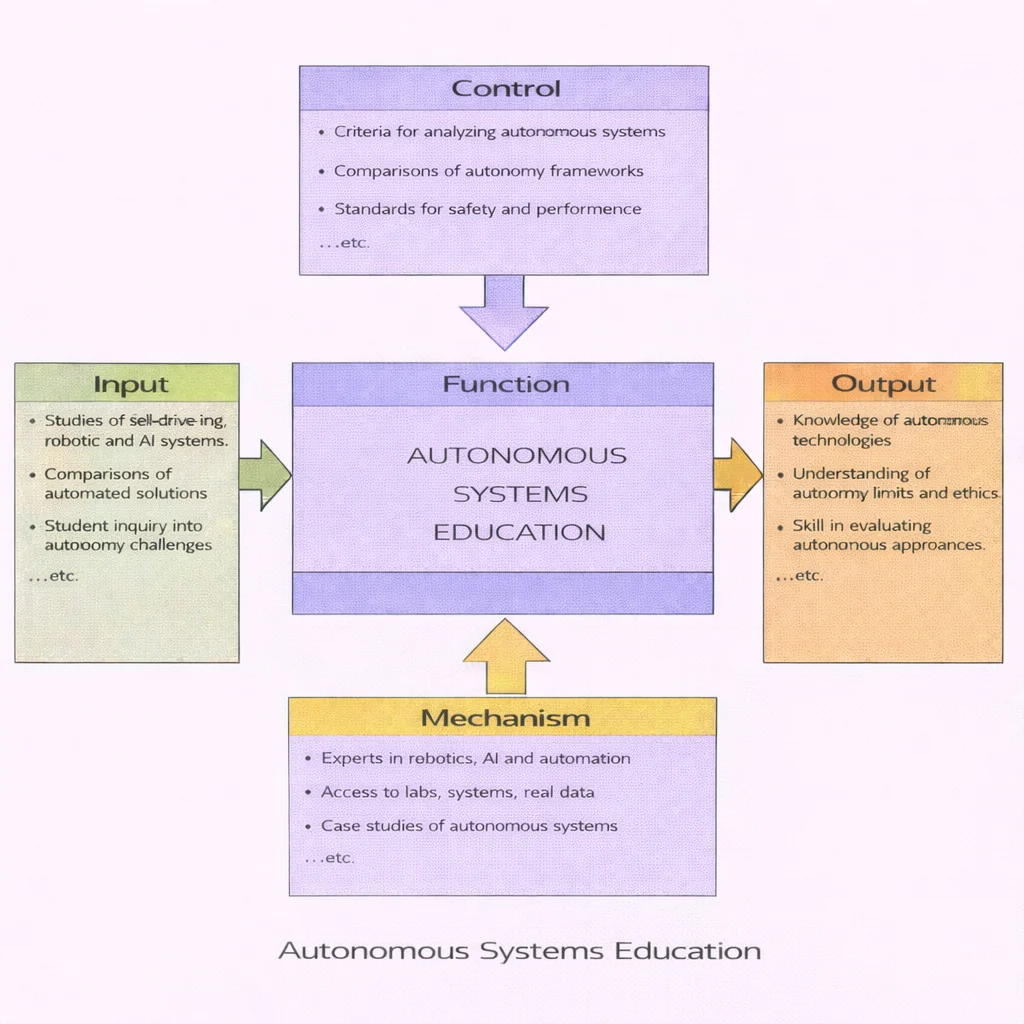

Autonomous Systems Education, as shown in the diagram, turns curiosity about self-driving, robotic, and AI systems into disciplined understanding. Students begin with concrete inputs—examples of automated solutions, real autonomy challenges, and comparative exploration. Their learning is shaped by controls: clear criteria for analysis, well-chosen autonomy frameworks, and safety-and-performance standards that prevent “technology excitement” from replacing careful judgment. The mechanisms—expert guidance, access to labs and real data, and case-based learning—provide the hands-on realism that autonomy demands. The result is not only knowledge of autonomous technologies, but also a mature understanding of ethical limits and the practical ability to evaluate what an autonomous system can do, what it cannot do, and what it should not do.

Autonomous systems are intelligent machines or software agents capable of performing tasks and making decisions without direct human intervention. These systems combine advanced technologies such as artificial intelligence (AI) and machine learning (ML), robotics, computer vision, and control systems to perceive their environments, process information, and act accordingly. Applications range from self-driving vehicles and drones to surgical robots, smart manufacturing, and space exploration. As these technologies mature, they are reshaping industries, transforming urban infrastructure, and raising profound ethical and regulatory questions. Understanding the foundational principles behind autonomy—and how diverse disciplines integrate to support it—is critical for learners and professionals navigating the future of intelligent systems.

Learning Objectives

- Understand the core technologies and components that enable autonomous systems to function independently.

- Explore the wide range of real-world applications where autonomy is transforming industries and services.

- Analyze the challenges, limitations, and safety considerations involved in deploying autonomous systems.

- Evaluate the ethical, legal, and societal implications of intelligent automation.

- Identify the key areas of research and development driving innovation in autonomy and intelligent decision-making.

This infographic illustrates the functional architecture of an autonomous system. It starts with sensors that collect real-world data, which is then processed by the perception module to identify objects and environmental features. The decision-making system evaluates the context and plans appropriate actions, which are executed by the control unit through the actuators. These five core components form a continuous loop that enables autonomy in systems such as autonomous vehicles, drones, and robotic platforms.

Autonomous Systems – Foundation Overview

Autonomous systems are transforming the way machines interact with the world, enabling devices and technologies to operate independently of direct human input. These systems integrate principles from artificial intelligence and machine learning, applying data-driven decision-making, real-time processing, and adaptive control. Their success depends on synergies between disciplines like mathematics, statistics, and data science and analytics, all of which shape the algorithms that power autonomy.

The rise of emerging technologies has paved the way for autonomous platforms to thrive in diverse sectors. For instance, robotics and autonomous systems are now prevalent in factories, warehouses, and service industries, performing repetitive and complex tasks with high efficiency. These machines often function as part of smart manufacturing and Industry 4.0 environments, communicating through networks enabled by internet and web technologies.

Beyond industrial settings, autonomous systems contribute to sustainability and environmental resilience. In environmental engineering, autonomous monitoring platforms assess air and water quality, while self-regulating infrastructure supports green building and sustainable design. Likewise, in energy, the autonomous optimization of solar and battery systems is revolutionizing renewable energy and energy storage solutions.

Autonomous platforms are also reshaping urban landscapes. The integration of these systems with Internet of Things (IoT) and smart technologies fosters responsive city infrastructure—managing traffic flow, energy usage, and public safety. In disaster-prone regions, autonomous drones and vehicles provide support in earthquake and disaster engineering, delivering aid and conducting rapid damage assessment.

Space is another domain where autonomy is essential. In space exploration technologies, probes and rovers rely on autonomous navigation to traverse unfamiliar terrain, guided by onboard sensors and AI. These efforts often begin with powerful launch vehicles and are supported by resilient communication through satellite technology.

Autonomy also intersects with biosciences and computing. The rise of biotechnology includes autonomous lab systems capable of drug discovery and diagnostics. In the realm of computation, ideas from quantum computing—including quantum entanglement, superposition, and quantum gates and circuits—hint at future systems with adaptive and probabilistic behavior, built on qubits.

Autonomous systems sit at the heart of 21st-century innovation. As more disciplines—from STEM to policy—integrate their efforts, these technologies will continue to evolve, supporting everything from global logistics to smart cities, and from personalized medicine to planetary exploration.

This visually dynamic illustration presents a conceptual view of a smart city where autonomous systems operate in harmony. Self-driving taxis and AI-powered delivery vehicles navigate smart roads beneath a skyline filled with drones conducting surveillance and logistics tasks. In the foreground, humanoid robots and robotic arms symbolize advanced manufacturing and service automation. The glowing central AI network visually represents real-time data processing, connectivity, and coordination across all systems. This artistic composition highlights the interdependence of various autonomous technologies—transportation, logistics, industrial robotics, and urban planning—demonstrating how artificial intelligence can orchestrate a seamlessly integrated urban ecosystem.

Autonomous Systems – Introduction

Autonomous systems are intelligent machines or software agents capable of performing tasks and making decisions without direct human intervention. These systems combine advanced technologies such as artificial intelligence (AI) and machine learning (ML), robotics, computer vision, and control systems to perceive their environments, process information, and act accordingly. Applications range from self-driving vehicles and drones to surgical robots, smart manufacturing, and space exploration. As these technologies mature, they are reshaping industries, transforming urban infrastructure, and raising profound ethical and regulatory questions. Understanding the foundational principles behind autonomy—and how diverse disciplines integrate to support it—is critical for learners and professionals navigating the future of intelligent systems.

Learning Objectives

- Understand the core technologies and components that enable autonomous systems to function independently.

- Explore the wide range of real-world applications where autonomy is transforming industries and services.

- Analyze the challenges, limitations, and safety considerations involved in deploying autonomous systems.

- Evaluate the ethical, legal, and societal implications of intelligent automation.

- Identify the key areas of research and development driving innovation in autonomy and intelligent decision-making.

This infographic illustrates the functional architecture of an autonomous system. It starts with sensors that collect real-world data, which is then processed by the perception module to identify objects and environmental features. The decision-making system evaluates the context and plans appropriate actions, which are executed by the control unit through the actuators. These five core components form a continuous loop that enables autonomy in systems such as autonomous vehicles, drones, and robotic platforms.

Table of Contents

Key Characteristics of Autonomous Systems

Sensing and Perception:

Autonomous systems must continuously gather information about their surroundings to operate effectively. This is achieved through various sensors including cameras that capture visual data, LIDAR (Light Detection and Ranging) for mapping distances and identifying objects, and radar systems for detecting motion and structure in poor visibility conditions. These sensors allow the system to construct a multi-dimensional understanding of its environment, enabling accurate navigation, obstacle avoidance, and contextual awareness. Advanced perception technologies also incorporate semantic understanding, where objects are not only detected but classified—such as distinguishing between a pedestrian and a lamppost. In high-stakes applications like autonomous driving or drones, redundancy across sensor types ensures reliability and fault tolerance.

This detailed digital illustration highlights the sensor technologies that enable autonomous systems to perceive and interpret their surroundings. The central element is an autonomous vehicle equipped with an array of sensing devices—LIDAR scanners emitting laser pulses to build 3D maps, radar systems tracking motion in adverse weather, and cameras capturing visual cues such as lane markings, pedestrians, and traffic signs. Each sensor’s field of view is depicted in layered, semi-transparent arcs to demonstrate how overlapping data streams contribute to a robust and redundant perception framework. The scene emphasizes the role of sensor fusion in ensuring accurate navigation, obstacle detection, and semantic object classification, all critical for real-time decision-making in complex, dynamic environments.

Data Processing:

Once environmental data is collected, it must be processed swiftly and accurately. Autonomous systems use real-time computing to transform raw sensor inputs into meaningful insights. This involves noise filtering, signal fusion from multiple sources, and transformation into spatial or temporal models. Complex algorithms are used to interpret patterns, predict dynamic changes, and make sense of ambiguous data. High-performance computing platforms, often leveraging GPUs and edge processing, enable millisecond-level response times. In mission-critical systems, such as medical robots or search-and-rescue drones, processing architectures are designed to be both robust and fault-tolerant.

Decision-Making:

Autonomous systems employ sophisticated decision-making frameworks that blend rule-based logic, probabilistic reasoning, and artificial intelligence. Decisions must factor in safety, efficiency, legality, and context. For example, a self-driving car must weigh traffic rules against real-time risks to choose optimal maneuvers. Predictive models anticipate human behavior, such as pedestrian crossings or erratic driving patterns. Multi-objective optimization is used when multiple goals—like minimizing energy use while maximizing speed—are present. Increasingly, decision-making incorporates ethical considerations, especially in domains like healthcare robotics and military automation.

Self-Adaptation:

To operate reliably in diverse and dynamic environments, autonomous systems must learn from past experiences and adjust their behavior accordingly. This self-adaptation is driven by machine learning models that update parameters based on feedback, improving accuracy over time. For instance, a warehouse robot might adjust its route based on traffic patterns to avoid congestion. Online learning enables real-time adaptation without needing reprogramming. Systems also track performance metrics to detect anomalies and retrain models or switch modes when unexpected conditions are detected. Adaptation also encompasses recalibration of sensors and reconfiguration of behaviors, such as switching from normal to emergency mode.

Action Execution:

Once decisions are made, they must be translated into actions. In physical systems, this involves actuators like motors, servos, or hydraulic cylinders executing precise motions. For digital agents, it may involve database transactions, network communications, or software deployments. Action execution requires precision, reliability, and feedback loops to ensure correct operation. Advanced systems also use predictive control and feedback correction, ensuring that minor errors in motion or force are corrected dynamically. In collaborative environments, such as cobots (collaborative robots), execution also requires awareness of and responsiveness to human actions.

Components of Autonomous Systems

Sensors

Sensors are the eyes and ears of autonomous systems. They gather critical environmental data that serves as the foundation for perception and reasoning. The fusion of multiple sensors enhances accuracy and resilience by compensating for the limitations of individual devices. For example, while a camera may struggle in low light, thermal imaging can detect heat signatures. In robotics, proprioceptive sensors such as gyroscopes and accelerometers also monitor internal states like orientation and speed. This wide range of inputs enables comprehensive situational awareness essential for autonomous operation in varied conditions.

- Vision Sensors: Cameras, including stereo and RGB-D (depth) systems, enable object recognition, facial detection, and scene understanding. Infrared and thermal imaging expand capabilities to night vision and heat detection, useful in surveillance or search-and-rescue applications.

- Proximity Sensors: Technologies such as ultrasonic sensors for short-range detection, radar for medium to long-range sensing, and LIDAR for generating high-resolution 3D maps. These are crucial for obstacle avoidance, localization, and navigation in cluttered or dynamic environments.

- Environmental Sensors: Devices that measure temperature, pressure, humidity, air quality, and light intensity. These are especially important for autonomous systems operating outdoors or in regulated environments like agriculture, aerospace, or hazardous zones.

Processing Units

Processing units form the computational backbone of autonomous systems. They convert raw sensor data into actionable insights and decisions, often in real time. Modern systems employ heterogeneous computing—combining CPUs for general tasks, GPUs for parallel data processing, and AI chips for accelerating inference and deep learning tasks. Edge computing allows this processing to happen locally, reducing latency and improving privacy. Failover mechanisms and real-time operating systems (RTOS) ensure robustness under mission-critical conditions.

- Central Processing Units (CPUs): Handle control logic, system integration, and basic decision-making routines. CPUs coordinate various system modules and execute general-purpose operations reliably and efficiently.

- Graphics Processing Units (GPUs): Designed for high-throughput tasks such as image recognition, video processing, and large-scale matrix operations typical in neural networks. GPUs enable concurrent analysis of complex data streams.

- AI Chips: Specialized processors such as Google’s TPU or NVIDIA’s Jetson modules that accelerate deep learning inference and real-time training. These chips are optimized for tasks like voice recognition, object tracking, and path planning.

Decision-Making Algorithms

Decision-making algorithms bridge perception and action. They interpret the world and determine how the system should respond to various stimuli or goals. The sophistication of these algorithms determines how autonomous, intelligent, and adaptive the system truly is. These algorithms often combine multiple paradigms—symbolic logic, probabilistic reasoning, and deep learning—to create hybrid models capable of robust real-world behavior.

- Rule-Based Systems: Use predefined conditional logic (IF-THEN statements) for deterministic behavior. These systems are easy to design and verify, often used in safety-critical applications where predictability is paramount.

- Machine Learning Models: Learn from labeled or unlabeled data, improving performance over time. Supervised learning is used for tasks like image classification, while unsupervised learning aids in clustering and anomaly detection.

- Reinforcement Learning: Systems learn optimal behaviors by interacting with their environment and receiving feedback in the form of rewards or penalties. Widely used in gaming, robotics, and autonomous driving, this approach fosters adaptive and resilient agents.

Actuators

Actuators convert digital decisions into tangible actions. In physical autonomous systems, actuators enable movement, manipulation, and interaction with the environment. They must be precise, reliable, and capable of working under variable loads and conditions. Integration with control algorithms ensures smooth and accurate operations. Advanced actuators may include haptic feedback for sensitive applications such as robotic surgery or automated assembly.

- Motors: Provide rotational or linear motion. Commonly used in mobile robots, drones, and manufacturing arms.

- Servos: Offer precise control of angular or linear position, often used in robotic joints and camera gimbals.

- Hydraulic and Pneumatic Systems: Deliver high power and force, especially in heavy machinery and large-scale automation.

Communication Systems

Communication systems are essential for connecting various components of autonomous systems, as well as for enabling external coordination. These systems facilitate data exchange between sensors, processors, and actuators, and also allow integration with cloud services, control centers, and other autonomous agents. Communication protocols must support real-time, secure, and high-bandwidth transmissions to ensure system responsiveness and safety. Technologies range from local wireless methods like Wi-Fi and Bluetooth to wide-area networks such as 5G and satellite communications.

- Wi-Fi and Bluetooth: Used for short- to medium-range communication and integration with mobile devices or local networks.

- 5G: Enables low-latency, high-bandwidth communication for time-sensitive applications like connected vehicles and smart cities.

- Satellite Communication: Critical for remote or global coverage, including autonomous maritime or agricultural systems.

Applications of Autonomous Systems

Transportation

- Self-Driving Cars: Autonomous vehicles represent a major transformation in personal and commercial transportation. Equipped with an array of sensors including cameras, LIDAR, and radar, these vehicles perceive their environment in real time. Software systems powered by artificial intelligence interpret this sensory data to make driving decisions such as steering, braking, and acceleration. Companies like Tesla, Waymo, and Cruise are at the forefront, offering driver assistance systems and fully autonomous prototypes. These vehicles can navigate complex urban environments, follow traffic rules, avoid collisions, and even predict pedestrian behavior. The promise of autonomous vehicles includes improved road safety, reduced traffic congestion, and enhanced mobility for the elderly and disabled.

- Autonomous Drones: Drones equipped with GPS, computer vision, and obstacle avoidance algorithms are widely used in logistics, surveillance, and emergency response. Companies like Amazon and Zipline deploy autonomous drones for package delivery and medical supply drops. In disaster zones, drones assess damage, locate victims, and map affected areas more efficiently than humans. These aerial vehicles operate independently, following pre-programmed routes or dynamically adjusting to real-time conditions.

- Autonomous Ships and Trains: In maritime and rail transport, autonomous navigation systems are improving operational efficiency and safety. Autonomous cargo ships use satellite navigation, advanced sensors, and automated control systems to navigate across oceans with minimal human input. Similarly, autonomous trains—such as those deployed in metro systems—enhance punctuality, reduce operational costs, and allow for more frequent service intervals. These innovations are reshaping logistics and public transportation on a global scale.

Healthcare

- Surgical Robots: Robotic-assisted surgery platforms like the da Vinci Surgical System enable minimally invasive procedures with greater precision, control, and flexibility than traditional techniques. These systems translate the surgeon’s hand movements into smaller, more precise motions of tiny instruments. Autonomous functionalities include automatic suturing, camera navigation, and real-time feedback for safety. Surgeons benefit from 3D visualization, tremor reduction, and enhanced ergonomics, which contribute to shorter recovery times and improved patient outcomes.

This semi-realistic digital artwork portrays a surgical robot, inspired by systems like the da Vinci Surgical System, conducting a minimally invasive operation on a patient in a state-of-the-art medical facility. The robotic arms are shown delicately maneuvering miniature surgical tools with high precision, while a medical team monitors the process using a control console and real-time 3D visualization. The scene highlights key features of robotic-assisted surgery—such as tremor reduction, enhanced ergonomics, and precision—underscoring how these systems enhance safety and patient outcomes through technological augmentation.

- Autonomous Diagnostics: AI algorithms trained on vast datasets can now diagnose diseases from medical images such as X-rays, MRIs, and CT scans with accuracy rivaling that of human experts. These systems analyze patterns, highlight anomalies, and generate diagnostic reports automatically. Applications range from early detection of cancers and retinal disorders to monitoring lung infections and cardiac abnormalities. This accelerates diagnosis, reduces clinician workload, and extends medical expertise to underserved areas.

- Patient Monitoring: Wearable devices with autonomous monitoring capabilities continuously track vital signs like heart rate, blood oxygen levels, temperature, and sleep cycles. Integrated with machine learning models, these devices can detect anomalies, send alerts, and recommend actions without human intervention. They play a crucial role in managing chronic conditions, monitoring post-operative recovery, and supporting elderly care. Data collected is often transmitted to cloud platforms for longitudinal health analysis.

Defense and Security

- Autonomous Drones: In military applications, drones carry out reconnaissance, target tracking, and even combat operations with minimal human oversight. Equipped with sensors, cameras, and onboard intelligence, they can patrol borders, locate threats, and respond rapidly. Swarm drone systems coordinate autonomously for surveillance missions, increasing coverage and redundancy. Their low cost and scalability make them vital tools in modern defense strategy.

- Unmanned Ground Vehicles (UGVs): UGVs are deployed in environments that are too dangerous for human soldiers, such as minefields, conflict zones, or contaminated areas. These robots perform logistics support, reconnaissance, bomb disposal, and perimeter security. They are equipped with rugged sensors, communication modules, and autonomous navigation software to traverse complex terrains and execute missions under remote supervision or fully autonomously.

- Cybersecurity Systems: Artificial intelligence enables autonomous detection and mitigation of cyber threats by continuously analyzing network traffic and system behavior. These systems use machine learning to identify anomalies, detect intrusions, and block malware without requiring constant human oversight. They adapt over time, learning from new attack patterns and improving their threat response capabilities. Autonomous cybersecurity solutions are critical for protecting sensitive infrastructures, such as financial institutions and defense networks, from evolving threats.

Industrial Automation

- Manufacturing Robots: Autonomous robots are revolutionizing manufacturing through precision, speed, and consistency. These machines assemble components, perform quality control inspections, and handle delicate materials with minimal supervision. Collaborative robots, or cobots, work safely alongside humans to augment productivity. Integration with computer vision and AI allows these robots to adapt to variability in parts and assembly processes. Factories deploying autonomous robots experience lower defect rates, increased throughput, and reduced labor costs.

This highly detailed digital illustration captures an advanced manufacturing environment where robotic arms precisely weld and assemble an automobile on a production line. The setting features a fully automated factory with no human operators present—only a humanoid robot, equipped with a digital tablet, monitoring and coordinating the operations. Data interfaces and gear-like visual elements in the background suggest real-time AI analytics and smart system integration. The image emphasizes the futuristic potential of fully autonomous production floors where intelligent machines handle all aspects of manufacturing, from assembly to quality control.

- Warehouse Automation: Modern warehouses utilize fleets of autonomous mobile robots (AMRs) and automated storage and retrieval systems (ASRS). Robots like Amazon’s Kiva systems move shelving units, sort packages, and optimize routes in real time. These systems increase inventory accuracy, speed up order fulfillment, and reduce human labor in repetitive and strenuous tasks. Sensor fusion and AI coordination allow dozens or hundreds of units to operate efficiently within the same space without collisions or downtime.

This digital illustration depicts a dynamic warehouse setting where fleets of autonomous mobile robots (AMRs) navigate across the floor, carrying inventory shelves to designated stations. The background features vertical automated storage and retrieval systems (ASRS), with robotic arms retrieving and depositing packages with precision. Multiple robots work in unison, coordinated by AI and sensor fusion, allowing seamless operation within a confined space. The vibrant lighting and advanced interface displays highlight the efficiency and intelligence of modern logistics automation. This scene showcases the transformative impact of robotics on inventory management and order fulfillment.

- Mining and Construction Equipment: Heavy-duty autonomous machines are used in mining to haul ore, drill blast holes, and navigate challenging terrains with precision. In construction, autonomous bulldozers, excavators, and pavers reduce operational risks and ensure consistent outcomes. These machines operate based on geospatial maps, real-time sensor feedback, and pre-programmed instructions. Integration with BIM (Building Information Modeling) systems allows for precise execution and minimal rework.

This semi-realistic digital illustration showcases a fleet of heavy-duty autonomous machines—including haul trucks, excavators, and bulldozers—working in a mining and construction site without any driver cabins or visible controls. The design emphasizes their fully automated nature, with sleek, sensor-equipped exteriors and embedded AI systems. The absence of headlights, windows, and operator compartments reinforces the shift toward truly driverless operations. These intelligent machines operate in coordination through real-time geospatial mapping, environmental sensors, and integration with digital construction models. The backdrop highlights a rugged terrain and layered infrastructure, representing the high-precision, risk-reducing role of automation in large-scale earthmoving and material handling.

Smart Cities

- Autonomous Public Transport: Smart cities are increasingly deploying autonomous buses, trams, and shuttles to provide safe, efficient, and eco-friendly transportation. These vehicles operate on fixed routes or flexible on-demand systems, enhancing mobility for urban populations while reducing emissions and traffic congestion. Equipped with advanced navigation and communication technologies, they integrate seamlessly with city-wide transport networks.

- Traffic Management Systems: AI-based traffic control systems monitor vehicle flow through sensors, cameras, and IoT devices. These systems predict congestion patterns, adjust traffic signals dynamically, and reroute traffic in real time to prevent bottlenecks. Integration with autonomous vehicle fleets enhances overall efficiency and reduces carbon emissions. Cities like Singapore and Barcelona are already adopting such intelligent systems to improve urban mobility.

- Autonomous Waste Management: Smart cities leverage robotic waste collection vehicles and intelligent sorting systems to manage waste more efficiently. These systems detect and collect trash autonomously, optimizing collection routes based on real-time data. AI-powered recycling systems sort materials using computer vision and robotic arms. This not only reduces labor and costs but also promotes sustainability and cleaner environments.

Agriculture

- Autonomous Tractors: These self-driving tractors are equipped with GPS, geofencing, and machine learning systems to perform tasks such as plowing, sowing, and spraying with high precision. Farmers can program field patterns and monitor progress remotely. Autonomous tractors reduce labor needs, improve efficiency, and minimize soil compaction by following optimized paths. They also operate during extended hours or in adverse conditions where human intervention would be limited.

- Drones: Agricultural drones are used for aerial surveillance, crop monitoring, and targeted pesticide application. They collect multispectral data to assess crop health, detect diseases, and estimate yield. By analyzing imagery over time, farmers gain valuable insights into soil variability and growth patterns. Autonomous flight planning and obstacle avoidance enable these drones to cover large areas with minimal oversight.

- Robotic Harvesters: Robotic systems capable of identifying and picking ripe produce—such as strawberries, apples, or tomatoes—are transforming labor-intensive harvesting operations. These machines use machine vision and tactile sensors to assess ripeness, navigate rows, and handle produce delicately to avoid damage. They address labor shortages, improve harvest timing, and enable 24/7 operation, thereby increasing overall productivity in agriculture.

Technologies Driving Autonomous Systems

Artificial Intelligence (AI)

Artificial Intelligence is the core engine behind autonomy, enabling machines to emulate human cognition. AI powers decision-making, environmental interpretation, object recognition, and language processing. In autonomous systems, AI integrates data from various sources, assesses potential actions, and selects the best course with respect to safety, efficiency, and goal achievement. Techniques like computer vision, natural language processing (NLP), and knowledge representation help systems understand unstructured inputs, such as road signs, human speech, or environmental hazards. As AI models become more powerful and accessible, they enable more complex forms of autonomy—from predictive maintenance in machinery to emotional recognition in humanoid robots.

Machine Learning (ML)

Machine Learning, a subset of AI, allows autonomous systems to learn and improve their performance without being explicitly programmed for every situation. ML algorithms analyze historical and real-time data to identify patterns and adjust behaviors accordingly. This adaptive capability is crucial for handling dynamic and unpredictable environments, such as urban traffic or manufacturing floors. Techniques like supervised learning are used for classification and regression tasks, while unsupervised learning identifies anomalies or clusters. Reinforcement learning allows systems to learn optimal policies through trial and error. The rise of deep learning—neural networks with multiple layers—has revolutionized fields like autonomous driving, where real-time recognition and decision-making are required.

Internet of Things (IoT)

The Internet of Things connects autonomous systems to a broader ecosystem of devices, sensors, and cloud infrastructure. This connectivity allows for real-time coordination between machines and environments. For instance, autonomous vehicles communicate with smart traffic lights and road sensors to optimize route planning. In agriculture, tractors, drones, and irrigation systems form an IoT network that shares data on soil health, weather, and crop growth. The IoT infrastructure enables distributed intelligence, where local devices process basic data and communicate key insights to centralized systems. Standard protocols like MQTT and CoAP ensure lightweight and secure data exchange, even in resource-constrained environments.

Robotics

Robotics integrates mechanical systems with software intelligence to perform physical tasks autonomously. This includes the use of actuators, servos, and manipulators coordinated by sensors and control algorithms. In autonomous robots, embedded systems interpret sensor inputs to guide movement, manipulate objects, and avoid obstacles. Applications range from warehouse logistics to surgical operations. Robotics also encompasses human-robot interaction (HRI), where collaborative robots, or cobots, are designed to work safely alongside people. Advances in tactile sensing, machine vision, and mobility have enabled robots to operate in diverse environments—from uneven terrain in disaster zones to delicate assembly in microelectronics.

Cloud Computing

Cloud computing provides scalable infrastructure and computational power that autonomous systems can leverage for data processing, storage, and analytics. Large-scale AI models and simulations that exceed the capacity of local devices are offloaded to cloud platforms. For example, autonomous fleets may upload data to the cloud for centralized learning and policy updates. Cloud services also facilitate integration across geographically distributed systems—such as coordinating autonomous drones in disaster relief. With edge-cloud architectures, latency-sensitive tasks are handled locally while heavier computation is processed in the cloud. Security, redundancy, and high availability in cloud systems support mission-critical autonomous applications.

5G Networks

5G wireless communication is pivotal in enabling real-time decision-making in autonomous systems. It offers ultra-low latency (as low as 1 ms), massive device connectivity, and high bandwidth, essential for exchanging large volumes of sensor data and control signals instantly. Autonomous vehicles benefit from vehicle-to-everything (V2X) communications enabled by 5G, allowing them to interact with infrastructure, pedestrians, and other vehicles. Industrial robots connected via 5G networks can receive real-time instructions and share operational status instantly with central control systems. The combination of 5G with AI and edge computing allows for highly responsive, distributed intelligence across sectors like healthcare, defense, and smart manufacturing.

Challenges in Autonomous Systems

Safety and Reliability

Ensuring consistent safety and reliability across diverse, unstructured environments is one of the most formidable challenges in autonomous systems. These systems must function flawlessly under varying conditions—weather, lighting, terrain, or unexpected human behavior. Redundant sensors, fail-safe mechanisms, and real-time monitoring are used to prevent errors, but the unpredictability of real-world scenarios introduces risk. For example, a self-driving car must recognize and react correctly to a child running onto the street or interpret a temporary traffic sign. Extensive simulation and field testing are essential, but even then, ensuring zero-failure operation remains elusive. Certification and regulatory approval require high levels of evidence and accountability.

Ethical and Legal Issues

- How should autonomous vehicles respond in moral dilemmas?

- Who is liable for accidents caused by autonomous systems?

Autonomous systems raise complex ethical dilemmas and legal challenges. In life-or-death situations—such as unavoidable collisions—how should the system prioritize outcomes? Questions of accountability also arise: if an autonomous drone malfunctions, should responsibility fall on the manufacturer, software provider, or operator? Legal frameworks for liability, transparency, and human oversight are still evolving. Ethical programming frameworks attempt to embed fairness, privacy, and human dignity into machine behavior, but cultural and philosophical differences complicate universal adoption. Regulatory bodies, ethics boards, and legal scholars must collaborate to establish clear, enforceable guidelines for responsible deployment.

Data Privacy and Security

Autonomous systems rely heavily on data collection—from user preferences and biometrics to real-time environmental scans. This raises significant concerns about privacy, consent, and data protection. Unauthorized access or data leakage could lead to identity theft, corporate espionage, or surveillance abuses. Security challenges also include adversarial attacks, where small manipulations fool perception systems (e.g., misclassifying a stop sign). Implementing strong encryption, authentication protocols, and intrusion detection systems is vital. Regulatory compliance with laws like GDPR and the need for ethical data stewardship require transparent data practices and ongoing audits to protect users and organizations alike.

Technological Limitations

- Real-time data processing in complex scenarios.

- Power efficiency and battery life for mobile systems.

Despite rapid advances, technological limitations remain a barrier to full autonomy. Real-time processing of multimodal sensor data requires immense computing power, which may not be feasible in edge devices with limited resources. Battery technology restricts operational time for autonomous drones, vehicles, and robots, especially when operating in remote or power-constrained environments. Additionally, the robustness of perception systems under occlusion, clutter, or variable lighting remains a challenge. Overcoming these issues involves innovations in hardware design, neuromorphic computing, edge AI, and improved energy storage technologies.

High Development Costs

The research, prototyping, and deployment of autonomous systems demand substantial financial and time investments. High costs arise from custom hardware development, data acquisition, AI training, safety validation, and regulatory compliance. Building large datasets for supervised learning or constructing simulation environments to test edge cases can be resource-intensive. Additionally, skilled personnel—such as AI researchers, robotics engineers, and system integrators—are in high demand and short supply. While costs are expected to decrease with standardization and economies of scale, the initial barrier to entry remains significant, especially for startups or public sector projects.

Future of Autonomous Systems

Fully Autonomous Vehicles

The progression toward Level 5 autonomous vehicles—those that require no human intervention—will redefine transportation. Such vehicles will rely on robust sensor fusion, real-time AI inference, vehicle-to-infrastructure (V2I) communications, and comprehensive fail-safe mechanisms. They will offer benefits such as reduced accidents, increased mobility access, and greater traffic efficiency. Early deployments may focus on geo-fenced urban areas or low-speed applications like autonomous shuttles. Regulatory approvals, public trust, and ethical programming will be critical milestones before mass adoption is realized. Fleet-based models for ride-sharing and logistics will likely be the first to capitalize on full autonomy.

Collaborative Robots (Cobots)

Cobots are designed to safely operate in shared spaces with humans, offering assistance in repetitive, precise, or physically demanding tasks. Unlike traditional industrial robots, cobots are lightweight, easily programmable, and responsive to human touch or proximity. Applications include automotive assembly, electronics manufacturing, healthcare assistance, and laboratory automation. Cobots equipped with AI can dynamically adjust behavior based on context or operator preference. They enhance productivity, reduce workplace injuries, and allow human workers to focus on tasks requiring creativity or judgment. Their growing role signals a shift toward more flexible, human-centric automation.

Smart Infrastructure

The integration of autonomous systems into smart infrastructure will transform urban life. Traffic systems, energy grids, water networks, and waste management will be monitored and controlled by intelligent agents, enabling predictive maintenance and dynamic resource allocation. For instance, smart traffic lights will adapt in real time to traffic flow data from autonomous vehicles and mobile sensors. Buildings equipped with autonomous HVAC and lighting systems will optimize energy consumption based on occupancy and weather. As cities digitize and interconnect, autonomous systems will form the nervous system of responsive, sustainable urban environments.

Space Exploration

Autonomous systems will be crucial in exploring environments where human intervention is limited or impossible. Rovers, orbiters, and landers equipped with AI and robotics can conduct scientific experiments, navigate terrain, and transmit data across vast distances with minimal delay. NASA’s Perseverance rover, for example, uses autonomous navigation to traverse Mars without waiting for Earth-based commands. Future missions may include autonomous habitat setup, resource extraction, and even robotic collaboration with astronauts. Autonomous satellites will maintain constellations, monitor Earth’s climate, and explore deep-space phenomena like asteroids or exoplanets.

Autonomous Supply Chains

Autonomous technologies are revolutionizing supply chains by automating everything from production to last-mile delivery. AI-driven systems manage demand forecasting, procurement, and logistics with minimal human oversight. Autonomous mobile robots and drones handle internal transportation, inventory picking, and quality inspections. Blockchain-enabled smart contracts combined with AI analytics ensure transparency and compliance across global operations. In the future, autonomous supply chains will self-optimize based on disruptions, environmental constraints, and customer demand, enabling a resilient and highly efficient global economy.

Three Autonomous Vehicles Case Studies

Autonomous systems have transitioned from experimental technologies to critical components across diverse real-world domains. To illustrate their transformative impact, this section presents three compelling case studies from land, air, and space. Each example highlights how autonomous capabilities—such as real-time perception, adaptive decision-making, and mission-specific actuation—are applied in dramatically different environments. From Tesla’s development of self-driving cars navigating complex urban traffic, to the MQ-9 Reaper drones executing strategic military operations, and finally to NASA’s Perseverance rover autonomously exploring the Martian surface, these cases demonstrate the breadth and sophistication of modern autonomy in action.Case Study 1: Tesla and the Evolution of Autonomous Driving

Tesla, Inc. has been one of the most prominent and controversial leaders in the development of autonomous vehicle technologies. With its integrated hardware and software platform, Tesla aims to deliver a fully self-driving experience using a combination of computer vision, machine learning, and real-time data processing. Unlike many other autonomous vehicle projects that rely heavily on LIDAR, Tesla’s approach emphasizes camera-based perception combined with neural network algorithms that process environmental data in real time.The cornerstone of Tesla’s strategy is its Autopilot and Full Self-Driving (FSD) packages, which offer features such as adaptive cruise control, automated lane changing, traffic-aware navigation, and traffic light recognition. These systems are continuously updated via over-the-air software upgrades, leveraging a massive fleet learning model based on billions of real-world driving miles. Tesla vehicles gather sensor and behavioral data from drivers, which is used to train and refine its neural networks—creating a feedback loop that accelerates system learning across all vehicles.Despite its innovations, Tesla’s autonomous capabilities remain at Level 2 autonomy under the SAE scale, requiring driver supervision. Regulatory bodies have raised concerns about the marketing language and safety expectations of FSD features. High-profile crashes have also triggered public debate about the readiness and responsibility associated with deploying such technologies.Nevertheless, Tesla’s data-driven, software-centric approach has reshaped the automotive industry and influenced global strategies for deploying autonomy in consumer vehicles. It continues to push the boundary between assisted driving and full autonomy, while actively participating in policy discussions and shaping public expectations around intelligent transportation.

This stylized illustration presents a Tesla electric vehicle in motion at sunset, with a futuristic overlay of radar waves and a wireless signal icon emanating from an abstract car symbol. The graphic emphasizes Tesla’s advancements in autonomous driving systems, showcasing the integration of AI, real-time sensing, and data connectivity. The warm-to-cool color gradient and bold typography evoke innovation, mobility, and the transformative potential of self-driving cars.

This digital artwork presents a rear, bird’s-eye view of a Tesla car autonomously navigating a busy city intersection. The vehicle is surrounded by visual overlays representing LiDAR, radar, and camera detection zones. Pedestrian and cyclist icons, along with traffic light recognition, illustrate the vehicle’s advanced situational awareness. The cityscape glows in warm and cool tones, symbolizing the seamless integration of technology and urban life. The image conveys Tesla’s application of AI-driven sensor fusion and real-time environmental mapping to ensure safe and efficient autonomous driving in complex traffic scenarios.

This digital illustration captures the interior of a Tesla vehicle while operating in autonomous mode. The driver sits calmly with hands off the steering wheel, as the dashboard display prominently shows “AUTOPILOT ENGAGED.” Stylized eye-tracking beams indicate active driver monitoring, while heads-up display (HUD) icons reveal system connectivity and situational awareness. The warm glow of sunset outside contrasts with the cool-toned interior, reinforcing the sense of safety, control, and futuristic design. The image encapsulates Tesla’s human-machine interface and its emphasis on user engagement and supervision in semi-autonomous driving.

Case Study 2: Autonomous Military Drones – The MQ-9 Reaper

The MQ-9 Reaper, developed by General Atomics Aeronautical Systems for the United States Air Force and Central Intelligence Agency, represents one of the most advanced and widely deployed unmanned aerial vehicles (UAVs) in modern military operations. Originally introduced in 2007, the MQ-9 Reaper is a remotely piloted aircraft system (RPAS) with semi-autonomous capabilities designed for high-altitude, long-endurance (HALE) missions involving intelligence gathering, surveillance, reconnaissance (ISR), and precision strike.

With a wingspan of 20 meters and a maximum endurance exceeding 27 hours, the MQ-9 Reaper can fly at altitudes up to 50,000 feet and carry both ISR payloads and lethal munitions. It is equipped with sophisticated sensors, including multi-spectral targeting systems (MTS-B), synthetic aperture radar (SAR), signal intelligence (SIGINT) systems, and electro-optical/infrared (EO/IR) cameras. For offensive missions, it is typically armed with AGM-114 Hellfire missiles, GBU-12 Paveway II laser-guided bombs, or GBU-38 JDAMs.

This detailed infographic presents the MQ-9 Reaper—an advanced unmanned aerial vehicle (UAV) used by the United States military for surveillance and precision strike operations. The illustration clearly labels the drone’s critical subsystems, including its satellite communication (SATCOM) antenna, multi-spectral targeting system (EO/IR turret), missile payloads (AGM-114 Hellfire), and sensor suite. With a focus on both structure and function, the image serves as an educational tool for understanding how autonomous capabilities integrate with weapons systems and data collection in modern drone warfare.

While human pilots remain in-the-loop for targeting and engagement, the MQ-9 incorporates autonomous capabilities for navigation, route planning, threat avoidance, and mission execution. Pre-programmed flight paths and onboard autopilot functions allow the drone to autonomously patrol areas of interest or loiter over targets while awaiting instructions. The system uses satellite communications (SATCOM) for command and control, enabling operation from remote ground control stations (GCS) located thousands of miles away from the theater of operations.

The MQ-9 Reaper has been deployed in various combat zones including Afghanistan, Iraq, Syria, and the Horn of Africa. It has played a central role in counterterrorism operations, often conducting high-value target (HVT) strikes and persistent surveillance over conflict regions. However, its use has also sparked debates over transparency, civilian casualties, international law, and the ethics of remote warfare.

As autonomy in military aviation continues to evolve, the MQ-9 serves as a benchmark in drone warfare—showcasing how automation, sensor integration, and decision-support systems can extend the capabilities of modern armed forces while reshaping the strategic, legal, and moral dimensions of combat.

This atmospheric image depicts the MQ-9 Reaper unmanned aerial vehicle (UAV) in active flight over a desert region during sunset. The scene captures the aircraft from a slightly lowered angle, emphasizing its aerodynamic form, rear-mounted propeller, and sensor-laden front turret. The backdrop of rolling terrain and warm, subdued lighting conveys the operational environment in which these drones are typically deployed—remote, rugged, and often hostile. While not labeled like an infographic, this visual immerses viewers in the practical reality of drone missions, complementing technical illustrations by adding narrative depth and contextual realism.

Case Study 3: NASA’s Perseverance Rover – Autonomous Exploration on Mars

Launched by NASA in July 2020 and successfully landing on Mars in February 2021, the Perseverance Rover is one of the most advanced autonomous robotic systems ever deployed beyond Earth. Built as part of NASA’s Mars 2020 mission, Perseverance is tasked with conducting scientific investigations in the Jezero Crater—an ancient river delta believed to have once supported microbial life.

Autonomy plays a central role in the rover’s operation, as round-trip communication delays between Earth and Mars can exceed 20 minutes. This makes real-time remote control impossible. Instead, Perseverance relies on a sophisticated suite of software and hardware to carry out semi-autonomous functions including hazard detection, terrain navigation, path planning, and scientific target selection.

The rover is equipped with advanced systems such as Terrain-Relative Navigation (TRN), which enabled it to autonomously analyze and select a safe landing zone during descent—one of the first times such a capability was used in planetary exploration. Its onboard computer leverages AI-based visual odometry to track its position, avoid obstacles, and optimize travel routes. Perseverance can also use machine learning to classify rock samples and prioritize scientific objectives based on mission goals and environmental constraints.

Additionally, Perseverance supports the experimental Ingenuity Helicopter, the first powered aircraft to fly on another planet. Ingenuity operates autonomously, using its own sensors and navigation software to perform short scouting flights, which feed into Perseverance’s exploration plans. This tandem demonstrates the increasing synergy between multiple autonomous agents working in coordination within an extraterrestrial environment.

Perseverance’s mission extends far beyond exploration—it is also tasked with collecting and caching rock samples for a future Mars Sample Return mission. Its success represents a major milestone in the evolution of space robotics, showcasing how autonomy enables persistent scientific discovery in environments that are distant, hostile, and constantly changing.

This high-resolution digital artwork captures the Perseverance rover during a mission on Mars, showcasing its autonomous capabilities in an extraterrestrial environment. The rocky terrain and dusty Martian atmosphere are faithfully illustrated, reflecting the harsh conditions the rover must operate within. The scene highlights the rover’s active data collection through its robotic arm while navigating the landscape using onboard sensors. The Ingenuity helicopter is shown mid-flight, symbolizing NASA’s pioneering efforts in autonomous aerial exploration beyond Earth. This visual reinforces the real-world application of autonomous systems in interplanetary missions, where self-guided navigation, obstacle avoidance, and real-time adaptation are critical to success.

Key Areas of Studies/Development in Autonomous Systems

Autonomous systems encompass a wide array of technologies and disciplines. Below are the primary areas of study and development driving advancements in this field:Perception and Sensor Fusion

- Objective: Enable systems to sense and understand their environment through high-fidelity, real-time information acquisition.

- Focus Areas:

- Development of advanced sensors like LIDAR, radar, cameras, and infrared sensors: These technologies gather data about the surroundings, each offering unique capabilities—LIDAR provides depth maps, radar performs well in poor visibility, and cameras capture visual details.

- Sensor Fusion: Merges inputs from various sensors to create a unified and accurate model of the environment, compensating for individual sensor limitations and enhancing reliability.

- Object Detection and Recognition: Uses computer vision and AI to identify and categorize objects such as pedestrians, vehicles, or obstacles, enabling responsive and informed decision-making.

- Applications: Self-driving cars for obstacle avoidance, drones for navigation and surveillance, and robotic systems that operate in unpredictable environments.

Artificial Intelligence (AI) and Machine Learning (ML)

- Objective: Equip systems with the ability to learn from data, adapt to new scenarios, and make intelligent decisions without explicit programming for every situation.

- Focus Areas:

- Reinforcement Learning: Enables systems to learn optimal behaviors through interaction with the environment and reward feedback, such as a robot learning to balance or navigate mazes.

- Neural Networks: Mimic human brain functions to identify complex patterns in data—critical for tasks such as facial recognition, anomaly detection, and speech understanding.

- Natural Language Processing (NLP): Allows autonomous agents to understand, interpret, and respond to human language, enabling interaction through voice commands or written instructions.

- Applications: Navigation systems in autonomous vehicles, predictive maintenance in industrial settings, and AI-powered customer service chatbots.

Control Systems

- Objective: Ensure smooth, accurate, and stable movement and operation of autonomous systems across a variety of tasks.

- Focus Areas:

- Feedback Mechanisms: Use continuous input from sensors to dynamically adjust outputs, maintaining performance even in changing conditions (e.g., adjusting a drone’s tilt in wind).

- Path Planning: Algorithms determine the most efficient and safest routes, factoring in obstacles, terrain, and energy consumption.

- Stability Analysis: Mathematical modeling ensures systems don’t enter unstable states, particularly important in balancing robots or aerial drones.

- Applications: Drone flight control, robotic manipulation, and automated vehicle steering and braking systems.

Autonomous Navigation

- Objective: Empower systems to move independently within unfamiliar or dynamic environments while maintaining awareness of their surroundings.

- Focus Areas:

- Simultaneous Localization and Mapping (SLAM): Real-time construction of spatial maps while pinpointing the system's position, essential in environments without GPS.

- Global Navigation Satellite System (GNSS): Integration with GPS, Galileo, and other satellite systems for high-precision positioning and route tracking.

- Dynamic Path Optimization: Adapts navigation routes in response to new obstacles, traffic patterns, or mission changes to improve efficiency and safety.

- Applications: Autonomous vehicles on roads, warehouse robots navigating shelves, and underwater drones exploring ocean floors.

Human-Machine Interaction (HMI)

- Objective: Facilitate intuitive and effective collaboration between humans and autonomous systems, ensuring safety, trust, and productivity.

- Focus Areas:

- Intuitive Interfaces: Interfaces that support natural interactions, such as voice commands, hand gestures, or touchscreens, make systems accessible to non-experts.

- Behavior Modeling: Predicting user intent and adapting system responses accordingly enhances user experience and efficiency.

- Safety Protocols: Rules and safeguards to prevent unexpected behavior and ensure predictable interactions in shared environments.

- Applications: Industrial cobots that respond to human gestures, autonomous cars with driver override features, and medical robots assisting surgeons.

Ethics and Policy

- Objective: Ensure that the development and deployment of autonomous systems align with societal values, laws, and public interest.

- Focus Areas:

- Ethical Frameworks: Guiding principles that inform decisions where moral trade-offs occur, such as whom to protect in an unavoidable accident scenario.

- Regulatory Compliance: Adhering to laws and standards (e.g., GDPR, ISO) that govern safety, liability, and transparency.

- Data Privacy and Security: Protecting user data from unauthorized access or misuse, especially as autonomous systems collect and process sensitive information.

- Applications: Ethical decision-making in autonomous vehicles, compliance in AI-powered surveillance, and transparency in automated loan approvals.

Edge Computing and Real-Time Processing

- Objective: Reduce latency and enhance responsiveness by processing data locally at the edge of the network instead of relying solely on cloud computing.

- Focus Areas:

- Low-Latency Processing: Real-time decision-making capabilities, critical for tasks like emergency braking in autonomous cars or collision avoidance in drones.

- Resource Optimization: Balancing processing speed with limited hardware and energy constraints in edge devices.

- Integration with Cloud Computing: Combining local responsiveness with cloud-level insights and data storage to create hybrid systems.

- Applications: Smart security cameras, autonomous agricultural robots, and wearable medical devices.

Energy Management and Power Systems

- Objective: Enhance the sustainability and operational lifespan of autonomous systems through efficient energy usage and innovative power solutions.

- Focus Areas:

- Battery Technology: Innovations in battery chemistry and structure to increase capacity, reduce weight, and support fast charging.

- Energy Harvesting: Capturing ambient energy (solar, thermal, kinetic) to supplement or replace traditional power sources, particularly in remote deployments.

- Power Optimization Algorithms: Software that intelligently regulates energy consumption based on system activity and environmental inputs.

- Applications: Long-range drones, solar-powered environmental sensors, and battery-efficient delivery robots.

Communication Systems

- Objective: Ensure autonomous systems can communicate reliably with other systems, infrastructure, and human operators in real time.

- Focus Areas:

- Vehicle-to-Vehicle (V2V) and Vehicle-to-Everything (V2X): Protocols that allow autonomous vehicles to exchange data with each other and with roadside infrastructure.

- 5G Networks: Providing ultra-fast, low-latency connections required for high-volume data exchange and coordination among distributed systems.

- Swarm Communication: Coordination and synchronization of multiple autonomous units (e.g., drone fleets) to achieve collective goals efficiently.

- Applications: Smart traffic systems, coordinated drone surveillance, and autonomous fleet logistics.

Security and Cybersecurity

- Objective: Defend autonomous systems against cyber threats that could compromise safety, data integrity, or operational functionality.

- Focus Areas:

- Encryption and Authentication: Ensures that only authorized users and devices can access system data and commands.

- Intrusion Detection Systems: Monitors for anomalies or unauthorized activities, enabling real-time threat mitigation.

- Resilience Mechanisms: Ensures continued operation or safe shutdown in the face of security breaches or system failures.

- Applications: Military drones, medical robots, and power grid monitoring systems.

Simulation and Testing

- Objective: Rigorously evaluate autonomous systems in virtual and controlled settings to identify weaknesses, improve design, and validate behavior before real-world deployment.

- Focus Areas:

- Virtual Environments: Digital worlds where systems are tested under varying conditions—weather, lighting, obstacles—without physical risks.

- Digital Twins: Real-time, digital replicas of physical systems used for diagnostics, maintenance prediction, and optimization.

- Failure Analysis: Simulating rare but critical failure cases to ensure systems respond appropriately under worst-case scenarios.

- Applications: Automotive crash testing, surgical robot validation, and aerospace control system verification.

Multi-Agent Systems

- Objective: Develop coordinated networks of autonomous agents that operate collaboratively or competitively to accomplish complex tasks.

- Focus Areas:

- Coordination Algorithms: Manage task allocation, resource sharing, and motion planning among multiple agents.

- Swarm Intelligence: Inspired by biological systems, where simple agents follow local rules leading to emergent collective intelligence.

- Distributed Decision-Making: Each agent makes local decisions based on shared goals and limited information, enabling scalability and resilience.

- Applications: Coordinated disaster response drones, automated warehouse pick-and-place systems, and autonomous vehicle fleets.

Autonomous System Safety and Validation

- Objective: Certify that autonomous systems function reliably, safely, and ethically across diverse scenarios and edge cases.

- Focus Areas:

- Safety Standards: Compliance with formal guidelines like ISO 26262 or IEC 61508 ensures systematic safety measures during design and deployment.

- Behavioral Validation: Tests to ensure systems react predictably in morally sensitive and high-stakes situations, such as emergency stops or route deviations.

- Robustness: Assurance that systems maintain performance despite variations in input, environment, or system faults.

- Applications: Passenger autonomous vehicles, robotic surgical systems, and flight control modules.

Autonomous Agriculture Systems

- Objective: Transform traditional farming with intelligent machines that increase productivity, precision, and sustainability.

- Focus Areas:

- Precision Farming: Using sensors, drones, and AI to monitor crop health, soil quality, and environmental conditions for data-driven interventions.

- Robotic Harvesters: Machines capable of identifying ripeness and autonomously picking produce, reducing labor needs and improving efficiency.

- Irrigation Management: Systems that monitor moisture levels and autonomously regulate water delivery to conserve resources.

- Applications: Smart greenhouses, vineyard robots, and autonomous tractors.

Space Exploration

- Objective: Develop autonomous technologies that can survive, operate, and make decisions independently in the harsh and remote environments of outer space.

- Focus Areas:

- Planetary Rovers: Self-navigating robots that collect soil samples, conduct experiments, and transmit data back to Earth.

- Spacecraft Autonomy: Enables vehicles to perform orbital maneuvers, rendezvous, and docking procedures without human input.

- Habitat Construction: Autonomous systems that prepare planetary surfaces, transport materials, and assemble living quarters for astronauts.

- Applications: Mars rovers, lunar base missions, and deep-space probes.

Why Study Autonomous Systems

Understanding the Future of Intelligent Machines and Automation

Exploring Interdisciplinary Foundations in Robotics, AI, and Engineering

Preparing for High-Impact Applications Across Sectors

Engaging with Ethical, Legal, and Societal Implications

Building the Skills for Tomorrow’s Intelligent Systems

🎥 Related Video – Why Study Emerging Technologies

Autonomous systems—from self-driving cars to intelligent drones—are built on the foundation of emerging technologies like AI, robotics, and sensor fusion. Understanding these systems in context helps students grasp the broader innovation landscape, including ethical, technical, and societal dimensions.

This video from our Why Study series highlights eight reasons why emerging technologies matter—emphasizing interdisciplinary knowledge, future-ready skills, and leadership in a tech-driven world. It provides essential context for anyone exploring autonomous systems.

Autonomous Systems: Conclusion

Autonomous systems represent a transformative frontier in science and technology, combining advances in artificial intelligence, robotics, control engineering, and sensor technologies to create machines capable of operating without constant human oversight. These systems perceive their environments, make decisions, and take actions to fulfill complex tasks across diverse domains—from autonomous vehicles and surgical robots to warehouse automation, military drones, and planetary rovers.

This page introduces the foundational technologies driving autonomy, such as AI, machine learning, sensor fusion, IoT, and edge computing. It explores key areas of development including perception, control systems, navigation, human-machine interaction, and system validation. Through detailed case studies—such as Tesla’s self-driving technologies, the MQ-9 Reaper drone, and NASA’s Perseverance rover—it illustrates the practical applications and real-world impacts of autonomous systems. The discussion also highlights pressing challenges in safety, ethics, cybersecurity, and regulation, while offering insights into future directions such as collaborative robots, smart infrastructure, and autonomous supply chains.

Designed for students, educators, and professionals, this page provides a comprehensive yet accessible overview of the rapidly evolving field of autonomous systems, preparing learners to critically engage with its technological, societal, and ethical dimensions.

Autonomous Systems: Review Questions and Model Answers

These review questions help you test your understanding of sensing, decision-making, and control in autonomous systems, and link the ideas to realistic situations you may meet in further study.

1. A self-driving car and a traditional cruise-control system are both used on a motorway. How does the autonomy of the car go beyond what cruise control can do?

Answer: Traditional cruise control simply maintains a chosen speed as long as the driver steers and brakes. A self-driving car must sense lane markings, traffic lights, nearby vehicles, pedestrians, and road conditions; it then chooses when to accelerate, brake, signal, and change lanes. In other words, the autonomous car uses multiple sensors and decision-making algorithms to handle complex, changing situations that go far beyond the fixed behaviour of cruise control.

2. Imagine a delivery drone that must drop a parcel in a small courtyard between buildings. Which sensors would be useful, and what information would each one provide?

Answer: GPS can provide approximate position and altitude outdoors, while cameras or lidar help detect buildings, trees, and people in the courtyard. Ultrasonic or infrared range sensors can measure distance to the ground and nearby walls during landing. An inertial measurement unit tracks orientation and motion to keep the drone stable. Together, these sensors give the drone enough information to navigate safely and land in the correct spot.

3. An autonomous underwater vehicle is sent to map the sea floor. Why might engineers choose to combine several different sensing methods instead of relying on just one?

Answer: No single sensor works perfectly in all underwater conditions. For example, sonar provides good range measurements but may be affected by noise or reflections, while inertial sensors drift over time. Combining multiple methods—such as sonar, depth sensors, inertial units, and occasionally surface GPS—allows the vehicle to cross-check readings, reduce uncertainty, and maintain an accurate estimate of its position and surroundings.

4. Give an example of how a simple control algorithm for a mobile robot might respond when an obstacle suddenly appears in front of it.

Answer: When distance sensors detect that an obstacle has entered a critical range, the control algorithm could first command the robot to reduce speed, then stop. Next, it might turn slightly left or right while checking for free space, and finally resume forward motion along the new heading. This sequence of actions is based on rules that link sensor readings to safe movements.

5. In a warehouse, management wants to introduce autonomous robots to move goods between shelves and packing stations. List two potential benefits and two challenges of this decision.

Answer: Benefits include higher throughput and consistency (robots can work continuously without fatigue) and improved safety if robots handle heavy or repetitive lifting. Challenges include the need to redesign routes and shelf layouts for robot access, and the requirement for robust safety systems so that robots can coexist with human workers without collisions or confusion.

6. Explain how machine learning could help an agricultural robot distinguish between crops and weeds.

Answer: Engineers can train a machine-learning model on thousands of labelled images showing crops and weeds in different lighting and growth stages. The robot’s camera feeds new images into the trained model, which classifies each plant based on learned patterns. The robot can then decide whether to water, fertilise, or remove a plant, improving accuracy over simple rule-based colour or shape thresholds.

7. A research team is planning to deploy an autonomous system in a disaster zone after an earthquake. What design priorities should they consider to ensure that the system is useful and reliable?

Answer: Priorities include robustness to dust, debris, and unstable terrain; reliable communication even when infrastructure is damaged; long battery life or easy recharging; and simple, clear interfaces so rescue workers can understand what the system is doing. The team must also design fail-safe behaviours so that if sensors fail or communication is lost, the system moves to a safe state rather than posing additional risk.

8. Why is cybersecurity an important consideration when deploying networked autonomous systems in a factory?

Answer: Networked autonomous systems often exchange control commands and sensor data over digital networks. If attackers gain access, they might disrupt operations, cause unsafe behaviour, or steal sensitive production information. Strong cybersecurity—such as encryption, access control, and regular software updates—is therefore essential to protect both safety and intellectual property.

9. During testing, an autonomous robot occasionally makes unsafe decisions in rare conditions. What steps can engineers take before allowing the robot to operate in public spaces?

Answer: Engineers should analyse logs to understand the failure modes, improve sensor coverage or algorithms, and test the updated system extensively in simulation and controlled environments. They may introduce conservative safety rules, such as reduced speeds or larger safety margins, and design emergency stop mechanisms. Regulatory approval and independent safety audits may also be required before public deployment.

10. Reflect on your own learning: which aspect of autonomous systems—sensing, decision-making, or actuation—do you feel least confident about, and what can you do to strengthen it before university?

Answer: A model answer will vary by student, but a good response identifies a specific area (for example, “I am less confident with control algorithms”), explains why, and outlines concrete actions such as revising relevant physics and mathematics, practising programming exercises, building a small robot or simulation project, or following an introductory online course. This reflection helps students plan targeted preparation for further study.

Autonomous Systems: Thought-Provoking Questions

1. How might the evolution of autonomous systems reshape the future of transportation?

Answer: The evolution of autonomous systems is expected to revolutionize transportation by enabling self-driving vehicles that operate with increased safety and efficiency. This technology could reduce traffic accidents, optimize route planning, and lower emissions through improved fuel efficiency and coordinated vehicle behavior. The integration of real-time data and machine learning algorithms allows vehicles to adapt to changing traffic conditions and anticipate hazards, leading to smoother traffic flow. Over time, this may result in a significant shift in urban planning and mobility management, transforming how people commute and transport goods.

Furthermore, the widespread adoption of autonomous transportation could lead to new business models, such as ride-sharing fleets and on-demand public transit services. These changes may also influence regulatory frameworks and insurance practices, as traditional metrics of driver responsibility are redefined. The societal impact could be profound, affecting labor markets, urban design, and environmental sustainability. As such, the future of transportation hinges on balancing technological advances with thoughtful policy-making and public acceptance.

2. What ethical concerns arise from the widespread use of autonomous systems in daily life?

Answer: The deployment of autonomous systems in everyday settings raises ethical issues such as accountability, privacy, and the potential for bias in decision-making algorithms. When a machine makes a decision that results in harm or loss, determining liability becomes complex. Moreover, the data collected by autonomous systems can infringe on personal privacy if not managed properly. These concerns necessitate the development of ethical guidelines and robust regulatory oversight to ensure that technology serves the public good.

In addition, there is the challenge of ensuring that these systems do not perpetuate or amplify existing societal inequalities. Bias in data or algorithm design could lead to discriminatory practices, further marginalizing vulnerable groups. The ethical framework surrounding autonomous systems must address these issues by promoting transparency, fairness, and accountability. Engaging a diverse range of stakeholders in the development process is essential to create ethical standards that are both inclusive and effective.

3. How can autonomous systems contribute to more sustainable industrial practices?

Answer: Autonomous systems can drive sustainability in industrial practices by optimizing resource use, reducing waste, and improving operational efficiency. They enable precise control over manufacturing processes, leading to less material waste and lower energy consumption. Additionally, real-time monitoring and predictive maintenance can prevent equipment failures and reduce downtime. These improvements contribute to a smaller environmental footprint and promote more sustainable industrial operations.

Moreover, the integration of autonomous systems facilitates the adoption of renewable energy sources by managing energy distribution more effectively. Smart grids and automated energy management systems can balance supply and demand, reducing reliance on fossil fuels. This convergence of technology and sustainability practices not only enhances productivity but also supports global efforts to mitigate climate change. The long-term benefits include cost savings, enhanced environmental stewardship, and a more resilient industrial infrastructure.

4. In what ways might autonomous systems alter the structure of the workforce in high-tech industries?