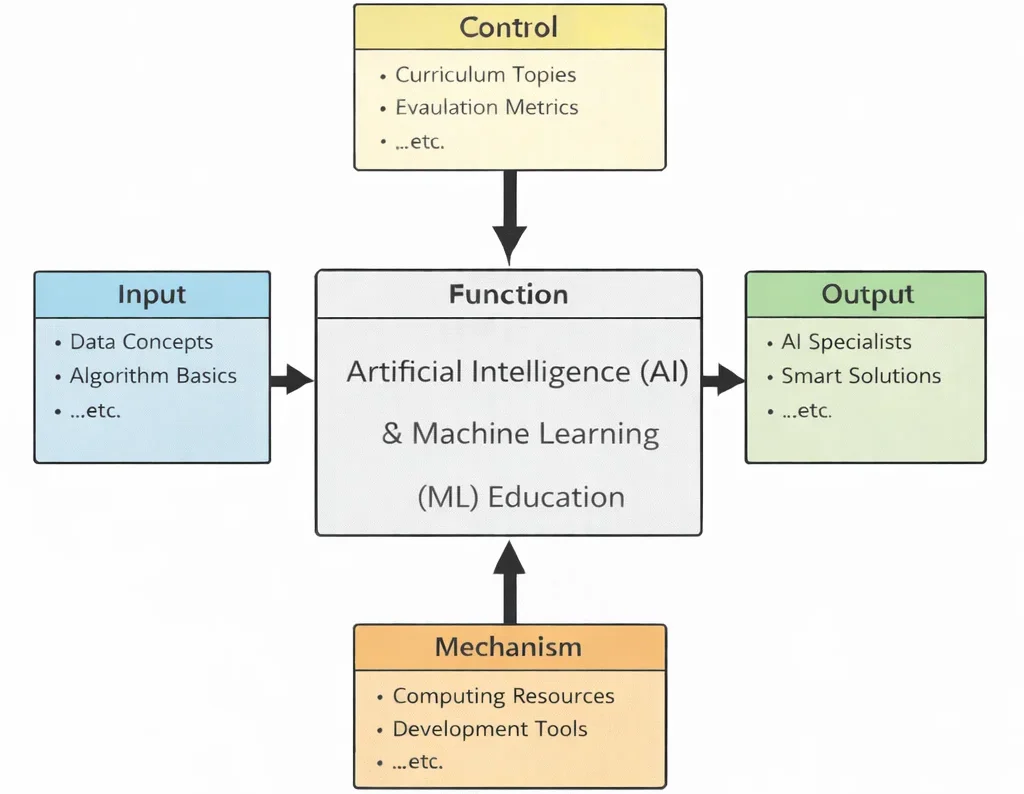

AI and machine learning education teaches students to treat data as something more than numbers—it becomes evidence, with all the responsibilities that evidence carries. This diagram shows that transformation clearly. The inputs bring the raw ingredients: how data is formed, how algorithms learn, and how models behave when the world refuses to be tidy. The controls—curriculum topics and evaluation metrics—serve as the guardrails, so learning does not become a collection of clever tricks; students must show that their results are valid, explainable, and repeatable. Inside the central function, learners practice a modern craft: they learn to frame problems, choose methods, train and tune models, and then ask the deeper questions—what failed, why it failed, and whether success is real or merely accidental. The mechanisms—computing resources and development tools—turn theory into work that can be tested and improved, letting students experiment at scale while keeping track of assumptions and limitations. The outputs are what society actually needs from AI: specialists who can think with discipline, and smart solutions that are useful because they are built on careful reasoning, not on hype.

This IDEF0 (Input–Control–Output–Mechanism) diagram presents Artificial Intelligence (AI) & Machine Learning (ML) Education as a structured learning process. Inputs on the left—data concepts, algorithm basics, …etc.—represent the foundational knowledge students need for building and understanding learning systems. Controls at the top—curriculum topics, evaluation metrics, …etc.—define what is taught and how mastery is assessed, guiding learners toward clear standards rather than vague experimentation. The central function, Artificial Intelligence (AI) & Machine Learning (ML) Education, converts these inputs and controls into applied capability through study, practice, and iterative problem-solving. Outputs on the right—AI specialists, smart solutions, …etc.—capture the intended outcomes: learners who can design and deploy useful AI systems responsibly. Mechanisms at the bottom—computing resources, development tools, …etc.—provide the practical environment for training models, testing ideas, and building real applications.

AI & Machine Learning turn data into predictions and decisions. Start with a simple pipeline: collect and clean data, define a baseline, try a modest model, validate honestly, and record trade-offs. Along the way you’ll meet the core ideas—loss functions, bias–variance, class imbalance, and cross-validation—and see why a clear baseline often beats a fancy model. The goal is reproducibility and judgement, not buzzwords.

From there, choose a track that fits your goals: supervised learning, unsupervised learning, or reinforcement learning; apply deep learning to computer vision or natural language; and pair models with data engineering & analytics so they work in production. We also keep real-world constraints in view—privacy, fairness, latency, cost, and model drift.

Overview & Study Paths

Artificial Intelligence (AI) has become one of the most transformative domains in modern STEM education and research. Closely linked with information technology, it enables machines to learn, adapt, and perform tasks that once demanded human cognition. At its foundation are methods such as supervised learning, unsupervised learning, and reinforcement learning, each shaping how systems understand data and make decisions.

The rise of data science and analytics supplies algorithms with massive, complex datasets from which to uncover patterns. Much of this work runs on scalable cloud computing infrastructure, with flexible cloud deployment models supporting rapid experimentation and deployment across industries.

Specialized subfields such as deep learning and computer vision extend AI’s reach to image recognition, autonomous vehicles, and surveillance systems. Meanwhile, natural language processing (NLP) enables machines to understand and generate human language, powering chatbots, search, and voice-activated assistants.

Classic expert systems remain valuable where auditable, rule-based logic is required, while newer techniques continue to push boundaries. In concert with IoT and smart technologies, AI supports real-time decision-making in smart homes, factories, and connected vehicles.

The link between AI and emerging technologies grows stronger each year. In aerospace, AI aids the operation of satellite technology and the optimization of space exploration technologies. In manufacturing, it powers precision automation through smart manufacturing and Industry 4.0, improving efficiency and reducing waste.

Quantum advances are also poised to reshape AI. Concepts from quantum computing—including qubits, superposition, quantum gates, and entanglement—suggest new pathways for accelerating learning and optimization.

AI also strengthens machine autonomy in complex environments. In robotics and autonomous systems, intelligent algorithms fuse perception, planning, and control to adapt in real time—from planetary rovers to disaster response. Underpinning these capabilities are advances in internet and web technologies, which enable distributed models and seamless platform integration.

As the field evolves, its reliance on rigorous mathematics and careful algorithmic reasoning remains fundamental. Students entering AI stand at the intersection of computation, ethics, and creativity—well prepared for the complex challenges ahead.

Illustration for the AI & ML hub at Prep4Uni.online. It reflects the page’s scope—supervised and unsupervised learning, deep learning, computer vision, NLP, reinforcement learning, expert systems, and robotics & autonomous systems—linking study paths to real-world applications.

Exploring Artificial Intelligence – Learning, Reasoning, and Intelligent Systems

Artificial Intelligence (AI) asks how machines can perceive, learn, reason, and act in complex environments. On Prep4Uni.Online, the AI hub is organised into key areas that connect school-level computing to university-level AI: learning from data, neural and deep models, decision-making and control, and applications in language, vision, and robotics. Together, these pages help you see how algorithms move from abstract maths and code to systems that power search engines, recommendation systems, autonomous vehicles, and modern research tools.

AI – Overview

Use this page as your starting map for artificial intelligence. It introduces core questions such as what counts as “intelligent” behaviour, how data and algorithms work together, and where AI is used in science, industry, and everyday life. You will find guidance on how to move between learning paradigms, deep learning, language and vision systems, expert systems, and robotics – and how these strands support further study in computer science, data science, engineering, and cognitive science.

Supervised Learning

Focuses on algorithms that learn from labelled examples, such as predicting exam scores from study data or classifying emails as spam or not spam. This page introduces training and test sets, loss functions, overfitting, and performance metrics – foundations for many real-world AI systems in finance, health, and recommendation engines.

Unsupervised Learning

Explores how algorithms discover patterns in unlabelled data. You will meet clustering, anomaly detection, and dimensionality reduction, and see how these tools are used for customer segmentation, fraud detection, and exploring large datasets when we don’t yet know what we are looking for.

Deep Learning

Introduces neural networks and deep architectures that power today’s image recognition, speech recognition, and large language models. This page explains layers, activation functions, and backpropagation, and helps you connect school mathematics (vectors, matrices, functions) to modern deep-learning practice.

Reinforcement Learning

Looks at agents that learn through trial and error using rewards and penalties. You will explore states, actions, policies, and the balance between exploration and exploitation, and see how these ideas apply to game-playing AIs, industrial control, recommendation systems, and robotics.

Natural Language Processing (NLP)

Covers how computers work with human language, from tokenising text and modelling grammar to sentiment analysis, translation, and conversational agents. This page connects linguistic concepts to the AI techniques behind search engines, chatbots, writing assistants, and accessibility tools.

Computer Vision

Explains how machines interpret visual information from images and video. You will meet ideas such as feature extraction, convolutional neural networks, object detection, and segmentation, and see how computer vision is used in medicine, autonomous vehicles, security systems, and creative media.

Expert Systems

Revisits rule-based systems that encode human expertise in “if–then” rules, such as early medical diagnosis tools and decision-support systems. This page contrasts symbolic AI with data-driven machine learning, helping you understand where each approach is strong and how hybrid systems are emerging.

Robotics

Connects AI to the physical world. You will explore how robots sense their environment, build internal models, plan actions, and execute movements safely. This subpage links perception, control, and learning in applications ranging from industrial robots and drones to assistive devices and planetary rovers.

Table of Contents

Core Concepts and Techniques in AI & ML

In the early stages of exploring AI and machine learning, students build a strong foundation by mastering the core learning paradigms and model families that recur in real projects.

Supervised & Unsupervised Learning

Supervised learning trains on labeled examples to map inputs to known targets. Through tasks like classification and regression, learners see how features, loss functions, and generalization fit together. Supervised Learning

Unsupervised learning discovers structure without labels. Clustering groups similar items, while dimensionality reduction simplifies complex data for analysis and downstream models. Unsupervised Learning

Neural Networks & Decision Trees

Neural networks learn non-linear relationships using layers, weights, and activation functions—powering perception and language tasks at scale. Deep Learning

Decision trees split on informative features to produce interpretable rules; ensembles such as random forests and boosting often provide strong, transparent baselines. Supervised Learning

Deep Learning Architectures

Modern architectures learn hierarchical representations. Convolutional networks excel at images, while sequence models and transformers handle text and multimodal data—driving applications from visual recognition to summarization. Deep Learning · Computer Vision · Natural Language Processing

Reinforcement Learning (RL)

In RL, an agent learns by interacting with an environment and optimizing rewards over time. Key ideas include exploration vs. exploitation, policies, and value functions—foundations for robotics, game-playing systems, and resource optimization. Reinforcement Learning · Robotics & Autonomous Systems

Language Models & NLP

Neural language models power translation, search, chat, and summarization. By working with tokenization, embeddings, attention, and transformers, students see how large language models achieve fluency and controlled generation. Natural Language Processing

Expert Systems & Knowledge-Based AI

Rule-based systems encode expert knowledge in if-then rules and inference engines. They remain valuable for audits, safety-critical checks, and hybrid pipelines that combine symbolic logic with learned models. Expert Systems

Robotics & Autonomous Systems

Real-world autonomy blends perception, planning, and control with reliable execution in dynamic environments—integrating vision, language, and RL into embodied systems. Robotics & Autonomous Systems

Refining Skills and Ensuring Fairness

Beyond writing a model, students learn to tune thoughtfully, evaluate rigorously, and build responsibly.

Fine-Tuning Parameters

Systematic hyperparameter tuning—learning rate, batch size, regularization, and network depth/width—improves accuracy, stability, and compute efficiency while reducing overfitting.

Evaluating Model Performance

Metrics match tasks: accuracy, precision/recall, F1, ROC-AUC, or mean squared error. Students practice validation/test splits, cross-validation, and interpreting confusion matrices before deployment.

Mitigating Biases in Data

Data choices shape outcomes. Learners audit datasets, engineer features carefully, and apply fairness-aware techniques to reduce disparate impact—so systems serve users equitably.

By immersing themselves in these ideas and practices, students emerge able to build, evaluate, and improve AI systems with both accuracy and responsibility in mind.

Practical Applications of AI & ML

Applied projects turn concepts into working systems and help students build a credible portfolio for university and industry.

Natural Language Processing (NLP)

Summarize articles, translate text, extract entities, and power question–answering. Projects introduce tokenization, embeddings, attention, and robust evaluation. Explore NLP

Computer Vision

Classify, detect, and segment images and video for quality control, medical imaging, visual search, and safety. Practice dataset curation, augmentation, and efficient on-device inference. Explore Computer Vision

Deep Learning

Train and fine-tune CNNs and transformers that power modern NLP and vision systems. Learn transfer learning, prompt/few-shot tuning, and metrics beyond accuracy. Explore Deep Learning

Interdisciplinary Opportunities

AI & ML blend with many fields. These examples show how three core areas—Deep Learning, Computer Vision, and NLP—turn ideas into impact.

Engineering & Manufacturing

Automated inspections flag defects with Computer Vision; forecasting and anomaly detection ride on Deep Learning models.

Natural & Environmental Sciences

Satellite and drone imagery power land-use and habitat mapping via Computer Vision; climate and hydrology teams use Deep Learning for nowcasting and pattern discovery, while reports and field notes are mined with NLP.

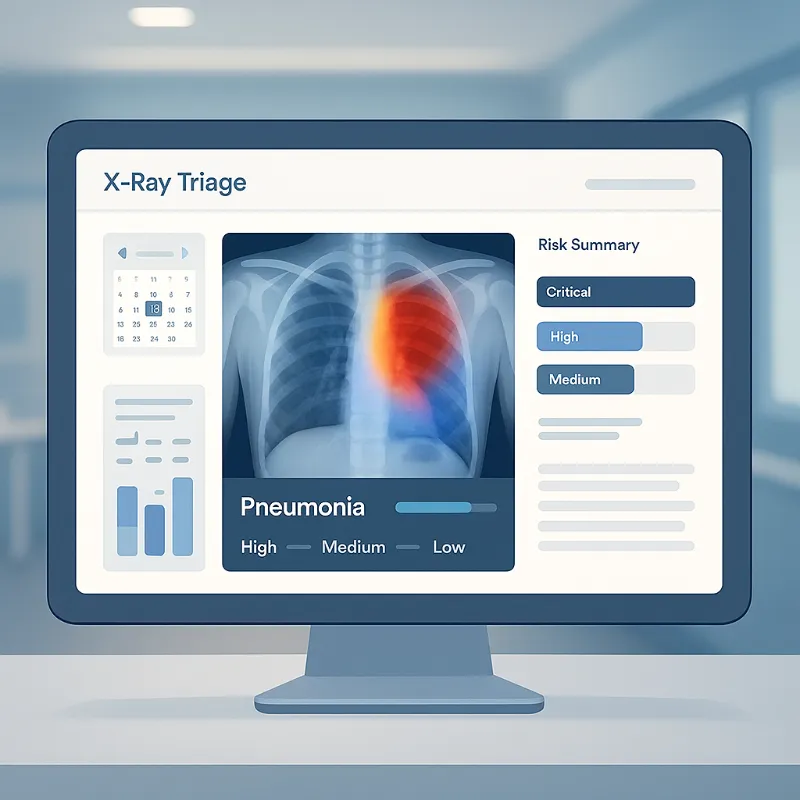

Health & Biomedicine

Imaging workflows combine Computer Vision and Deep Learning for detection and triage; clinical notes and guidelines are structured with NLP.

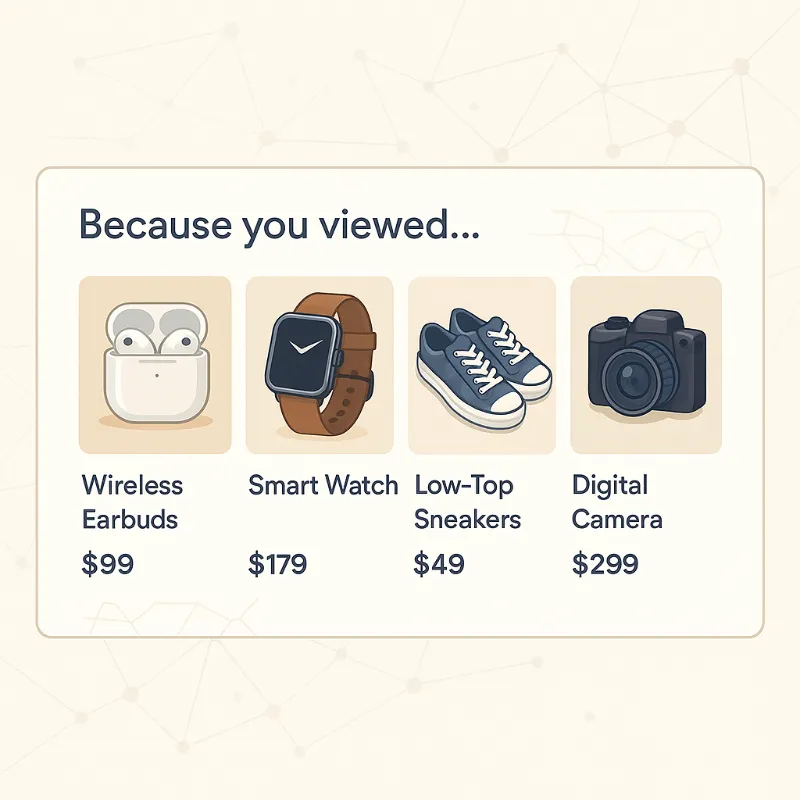

Business & Operations

Demand forecasting and recommendations leverage Deep Learning; customer feedback and policy text are analyzed using NLP; visual search in catalogs taps Computer Vision.

Humanities & Social Research

Large corpora—archives, speeches, literature—become analyzable with NLP; digitization and restoration apply Computer Vision; embeddings and representation learning from Deep Learning surface themes and links.

Education, Arts & Media

Adaptive learning and content generation use Deep Learning; tagging, moderation, and visual search lean on Computer Vision; editorial assistance and summarization rely on NLP.

Across these domains, students assemble portfolios that demonstrate technical skill and real-world relevance.

From Foundations to Research & Careers

Strong fundamentals open doors to lab work, internships, and product prototyping—while building confidence for fast-moving courses and industries.

University Research Readiness

Arrive ready to run baselines, curate datasets, and reproduce results. Visual pipelines lean on Computer Vision; sequence and multimodal work uses NLP; advanced representation learning grows from Deep Learning.

Industry Pathways & Roles

Skills translate into roles like ML engineer, data scientist, and applied researcher. Teams value production-ready models from Deep Learning, practical pipelines in Computer Vision, and language tools built with NLP.

Portfolio that Stands Out

Show tangible work: a detector/segmenter in Computer Vision, a summarizer or classifier in NLP, and a fine-tuned modern model from Deep Learning. Document datasets, metrics, and error analysis.

Ethics, Safety & Leadership

Pair technical fluency in Deep Learning and NLP with responsible practice—privacy, fairness, robustness, interpretability, and clear human oversight.

With this base, students are ready for university research and real-world roles building useful AI systems.

AI in Action: Quick Examples

Short, real-world cases that map directly to Deep Learning, Computer Vision, and NLP.

Why Study Artificial Intelligence

Understanding the Technology Reshaping the World

Exploring Core Ideas in Learning & Data

Solving Complex Problems Across Industries

Engaging Ethical, Social & Philosophical Questions

Preparing for Advanced Study & Careers

AI & ML: Quick Answers

What’s the difference between AI and ML?

AI is the goal—useful behavior from machines. ML is how we learn it from data. Start with supervised vs unsupervised learning, then explore reinforcement learning.

Which math and computing skills should I review?

Refresh mathematics (algebra, calculus, probability) and basic Python. Then move to deep learning for modern architectures.

How do I choose a first project?

Pick a small, real dataset and a clear metric. For images, try computer vision; for text, try NLP. Keep a log of errors and iterations.

Where do AI models usually run?

Most teams train and serve models on cloud computing platforms, often alongside data science & analytics pipelines.

How is AI used in robotics?

Robotics blends perception, planning, and control, often with RL. See Robotics & Autonomous Systems for examples.

AI & ML Glossary

- Baseline model

- A simple, honest starting point used to judge whether a more complex model is actually better. Sets the bar for improvement.

- Loss function

- A numeric measure of error the model tries to minimize (e.g., MSE, cross-entropy). Guides training and model comparison.

- Overfitting

- When a model memorizes training data and performs poorly on new data. Prevent with validation, regularization and early stopping.

- Cross-validation

- Rotate train/validation splits to get a robust estimate of performance. Helps avoid lucky or unlucky splits.

- Precision / Recall

- Precision: how many predicted positives are correct. Recall: how many actual positives you found. Balance via thresholds or F1.

- ROC-AUC

- Probability a classifier ranks a random positive higher than a random negative. Useful when classes are balanced.

- Class imbalance

- One class dominates the data. Use proper metrics (PR curve), resampling, or class-weighted losses.

- Data leakage

- Using information in training that won’t exist at prediction time (or from the test set). Inflates scores; fix your pipeline first.

- Regularization

- Penalties (e.g., L1/L2, dropout) that discourage overly complex models and improve generalization.

- Gradient descent / learning rate

- Iterative optimization using gradients; the learning rate controls step size. Too high diverges, too low is slow.

- Tokenization

- Turning text into model-readable units (tokens). Choices affect vocabulary, sequence length and performance.

- Attention

- Mechanism that lets models focus on relevant parts of the input; core to Transformers for NLP, vision and beyond.

Information Technology (IT): Frequently Asked Questions

These questions and answers give you a quick orientation to what IT involves, the skills you will build, and how it connects to study and careers.

1. What is Information Technology (IT) and how is it different from computer science?

Answer: Information Technology (IT) focuses on applying computing tools, networks, and systems to support organizations and users, while computer science emphasizes the theory of computation, algorithms, and how software and hardware are fundamentally built. In practice, IT professionals are often responsible for configuring systems, managing infrastructure, supporting users, and ensuring security and reliability, whereas computer scientists may work more on designing new algorithms, architectures, or software tools. In many modern workplaces, the two areas overlap and collaborate closely.

2. What core areas are usually covered in an IT-related degree?

Answer: An IT-related degree typically covers core areas such as programming fundamentals, data structures and basic algorithms, computer networks, databases, operating systems, cybersecurity, cloud and virtualized infrastructure, web and mobile technologies, and IT project management. Many programmes also include modules on user experience, data analytics, and business or organizational context so that graduates can bridge technical solutions with real-world needs.

3. Which skills are most important for success in Information Technology?

Answer: Success in Information Technology requires a blend of technical and human skills. Key technical skills include basic programming, understanding of networks and the internet, familiarity with operating systems, cloud services, cybersecurity fundamentals, and databases. Equally important are soft skills such as problem-solving, communication with non-technical stakeholders, teamwork, documentation, and the ability to learn new tools quickly. Because technologies change rapidly, a mindset of continuous learning is one of the most valuable assets in an IT career.

4. What kinds of careers can an IT background lead to?

Answer: An IT background can lead to many career paths, including roles such as systems administrator, network engineer, IT support specialist, cloud engineer, cybersecurity analyst, database administrator, DevOps engineer, IT project manager, and solutions architect. With experience and further specialization, IT professionals can move into areas such as digital transformation consulting, technology leadership, and cross-disciplinary roles that combine IT with domains like finance, healthcare, logistics, or education.

5. How does IT support digital transformation in modern organizations?

Answer: IT is at the heart of digital transformation by providing the infrastructure, platforms, and technical expertise that allow organizations to move from manual, paper-based processes to integrated digital workflows. This includes implementing cloud solutions, managing data platforms, securing systems, automating routine tasks, and supporting collaboration tools. Well-designed IT systems help organizations become more efficient, data-driven, scalable, and responsive to customers and stakeholders.

6. How is cybersecurity related to IT, and do all IT professionals need to know security?

Answer: Cybersecurity is a core concern in IT because almost every system, network, and application can be a potential target for attack. Even if someone is not a full-time security specialist, all IT professionals need a baseline understanding of security principles such as access control, encryption basics, secure configuration, patch management, and safe handling of data. Good security practices start with everyday IT operations, so security awareness is a shared responsibility across all IT roles.

7. What are some major trends currently shaping the future of IT?

Answer: Major trends shaping the future of IT include cloud-native architectures, edge computing, widespread use of APIs and microservices, automation and DevOps practices, the integration of artificial intelligence and machine learning into everyday applications, zero-trust security models, and the growth of data-driven decision-making. In addition, there is increasing emphasis on sustainability in data centres, responsible AI, and designing systems that are inclusive and accessible to diverse users.

8. How can students prepare in advance if they want to study IT at university?

Answer: Students who want to study IT at university can prepare by strengthening their basic maths and logical reasoning, learning at least one programming language, and becoming comfortable with using computers beyond everyday applications. Exploring topics like networks, web development, or simple database projects can be helpful. Joining coding clubs, contributing to small open-source or school projects, and practising problem-solving with real tools and platforms gives a practical head start and builds confidence for university-level IT modules.

Last updated: