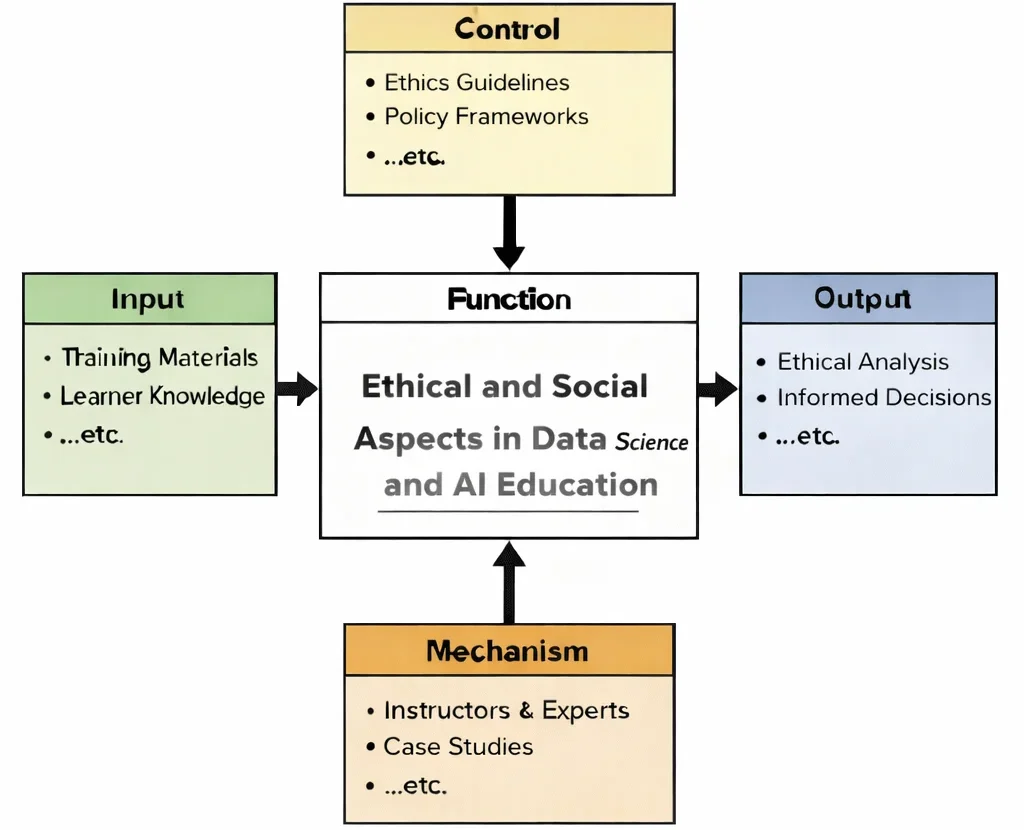

Ethical and Social Aspects in Data Science and AI Education is where technical ability meets moral clarity. The diagram makes a simple point: responsible AI is not “added at the end,” like decoration on a finished project—it is a shaping force throughout the learning process. Inputs (resources and prior knowledge) enter the work, but controls such as ethics guidelines and policy frameworks act like boundary stones, reminding learners that data is often about people, and models can quietly amplify inequality, expose privacy, or shift power. Mechanisms—experts, instructors, and well-chosen case studies—give students a safe place to practice the hardest skill of all: judgment under uncertainty. Learners learn to ask questions that code alone cannot answer: Who benefits? Who bears the risk? What is being assumed? What could go wrong at scale? The output is ethical analysis and more informed decisions—graduates who can build systems with both competence and conscience, and who understand that the true “performance” of AI includes its effects on trust, dignity, and society.

This IDEF0 (Input–Control–Output–Mechanism) diagram summarizes Ethical and Social Aspects in Data Science and AI Education as a structured transformation. Inputs include training materials and learner knowledge. Controls include ethics guidelines and policy frameworks that set expectations for fairness, privacy, accountability, transparency, and acceptable use. Mechanisms include instructors and experts alongside case studies and discussion-based activities that make abstract principles tangible. Outputs include ethical analysis and better-informed decisions, reflecting the learner’s ability to evaluate AI and data-driven work not only for technical performance, but also for human impact and social responsibility.

As data science and artificial intelligence become increasingly embedded in our daily lives, addressing their ethical and social implications is more critical than ever. The promise of automation, personalization, and predictive power must be balanced with concerns about privacy, bias, accountability, and equity. These concerns touch all domains—from cybersecurity to healthcare—and demand a multidisciplinary understanding of how technology affects individuals and society.

AI systems, especially when driven by AI and ML in cybersecurity, can introduce opaque decision-making that impacts employment, justice, and access to services. Ethical frameworks need to guide not only the algorithms but also the application security protocols and design choices in systems used by millions. As data is increasingly gathered and analyzed, data collection and storage practices must prioritize consent, security, and transparency.

The growing reliance on cloud platforms for sensitive data processing adds another dimension to the challenge. Professionals must understand the ethical ramifications of data use in cloud security environments and how breaches or leaks can damage public trust. Solutions must go beyond technical fixes to include cybersecurity policy frameworks that enforce accountability and uphold user rights.

Bias in data is another pressing issue. Without thoughtful data cleaning and preprocessing, predictive models may amplify existing inequalities. Even when technical methods are sound, the selection of training data, features, and target outcomes reflects human judgment that must be scrutinized for fairness. Visual summaries—developed through effective data visualization—can help surface ethical concerns during the development process.

Accountability also plays a vital role in fields like incident response and forensics or endpoint security, where decisions can have legal implications. This applies equally to sectors implementing domain-specific analytics in health, finance, or law enforcement. It is essential to ensure systems do not only perform accurately but also align with ethical norms and social expectations.

Ethics in data use is not just a theoretical exercise—it has direct consequences on how identity and access management is structured, how cryptography defends privacy, and how cybersecurity awareness is cultivated among users. These layers form the ethical backbone of digital trust in both government and corporate sectors.

As data science and analytics becomes more powerful with tools and technologies in data science, the role of professionals must evolve from technical implementers to responsible stewards. This means considering the unintended effects of deploying big data analytics and data analysis in sensitive settings, especially when consequences disproportionately affect vulnerable communities.

The same vigilance is needed in sectors guided by threat intelligence, network security, or OT security, where AI-enhanced surveillance or automation may compromise civil liberties. Legal, social, and cultural perspectives should be considered alongside technical choices to ensure ethical alignment at every level.

Ultimately, ethical and social aspects of data and AI represent the moral compass of digital transformation. They challenge us to consider not just what is possible, but what is just, inclusive, and sustainable for future generations.

This illustration uses a grand set of scales to symbolize the central challenge of ethical data work: weighing the promise of insight against the responsibility to protect people. One pan holds a data-streaked globe, representing the reach of analytics across societies and economies; the other holds a lock and digital signals, suggesting privacy, security, and the duty to safeguard sensitive information. Around the scales, bright icons hint at the many forces that ethics must consider—AI systems, biometric data, healthcare, finance, and online platforms—while the ring of human silhouettes at the bottom emphasizes that real lives sit behind every dataset. The glowing circuitry and networked background suggest that decisions ripple outward through connected systems, shaping trust, equality, and accountability. Overall, the image captures the idea that responsible analytics is not only about what we can do with data, but what we should do—and how to keep that balance as technology advances.

Table of Contents

Data Privacy

Data privacy focuses on safeguarding individuals’ personal information and ensuring compliance with legal and ethical standards.

Key Concepts:

- Personal Identifiable Information (PII):

- Protecting sensitive data such as names, addresses, and financial details from unauthorized access or misuse.

- Data Minimization:

- Collecting only the data necessary for a specific purpose.

- Anonymization and Pseudonymization:

- Techniques to protect user identities in datasets by removing or masking identifiable attributes.

- Personal Identifiable Information (PII):

Regulations:

- General Data Protection Regulation (GDPR):

- Enforced in the European Union, GDPR mandates clear user consent, the right to data deletion, and strict penalties for breaches.

- California Consumer Privacy Act (CCPA):

- Provides California residents the right to know what data is collected, how it’s used, and to request deletion.

- Health Insurance Portability and Accountability Act (HIPAA):

- Protects health-related data in the U.S.

- General Data Protection Regulation (GDPR):

Best Practices:

- Implement secure data storage and encryption.

- Regularly audit data collection and usage practices.

- Train employees on privacy policies and compliance.

Challenges:

- Balancing data utility with privacy.

- Navigating international differences in privacy laws.

Examples:

- Social media platforms implementing opt-in features for data sharing.

- Healthcare providers anonymizing patient data for research purposes.

Bias and Fairness

Bias and fairness focus on ensuring that AI and data-driven systems do not propagate or amplify discrimination, inequality, or injustice.

Key Concepts:

- Algorithmic Bias:

- Occurs when AI models reflect existing societal biases present in training data.

- Example: Facial recognition systems misidentifying individuals of certain ethnic groups.

- Fairness Metrics:

- Demographic Parity: Ensuring outcomes are equally distributed across groups.

- Equal Opportunity: Ensuring similar true positive rates for all groups.

- Algorithmic Bias:

Sources of Bias:

- Data Bias: Incomplete or unrepresentative training data (e.g., datasets underrepresenting minorities).

- Model Bias: Algorithms favoring certain features over others.

- Deployment Bias: Misuse or misinterpretation of model outputs in real-world contexts.

Mitigation Strategies:

- Perform bias audits during data collection and model evaluation.

- Use adversarial training to reduce bias in machine learning models.

- Implement fairness-aware algorithms, such as Fairlearn or IBM AI Fairness 360.

Examples:

- HR systems ensuring gender-neutral hiring decisions.

- Credit scoring models avoiding discrimination against underprivileged groups.

Interpretability and Explainability

Interpretability and explainability focus on making machine learning models and their predictions understandable to humans.

Key Concepts:

- Interpretability:

- The extent to which a model’s operations can be understood.

- Example: Linear regression is interpretable because the relationship between inputs and outputs is explicit.

- Explainability:

- The ability to provide reasons for a model’s predictions in terms understandable to users.

- Example: Explaining why a loan application was rejected by an AI system.

- Interpretability:

Importance:

- Builds trust in AI systems among users and stakeholders.

- Facilitates debugging and model improvement.

- Ensures compliance with regulations like GDPR’s “right to explanation.”

Techniques:

- Feature Importance Analysis:

- Identifying which features contribute most to a model’s decisions (e.g., SHAP values, LIME).

- Model Simplification:

- Using simpler models (e.g., decision trees) when interpretability is critical.

- Visualization Tools:

- Tools like TensorBoard or Explainable AI platforms to visualize and interpret model behavior.

- Feature Importance Analysis:

Challenges:

- Balancing model performance with interpretability (e.g., deep learning models are powerful but often seen as “black boxes”).

- Tailoring explanations to different audiences, from technical teams to end-users.

Examples:

- Financial institutions explaining credit approval decisions to customers.

- Healthcare systems providing reasons for treatment recommendations.

Interconnected Nature of Ethical and Social Aspects

- Data Privacy impacts bias and fairness, as anonymized data can sometimes lose critical context needed for equitable analysis.

- Bias and Fairness efforts rely on interpretability and explainability to identify and mitigate unfair outcomes.

- Addressing these aspects collectively builds trust and accountability in AI systems.

Why Study Ethical and Social Aspects of Data Science and Analytics

Recognizing the Power and Responsibility of Data

Addressing Issues of Bias, Fairness, and Inclusion

Understanding Privacy, Consent, and Data Ownership

Evaluating the Social Impact of Data-Driven Decisions

Preparing for Thoughtful Leadership in a Data-Driven Society

Ethical and Social Aspects of Data Science and Analytics – Frequently Asked Questions

These questions explore how data-driven systems interact with people and society, and what future data scientists should consider when building responsible analytics solutions.

1. What do we mean by the ethical and social aspects of data science and analytics?

The ethical and social aspects of data science and analytics refer to how data collection, analysis, and prediction affect people, communities, and institutions. They include issues such as privacy, fairness, bias, transparency, accountability, consent, and the broader impact of algorithms on jobs, opportunities, and social trust. Instead of focusing only on accuracy or performance, ethical and social analysis asks who benefits, who is harmed, and whose voice is included in decisions about data.

2. Why are ethics and social responsibility important in data science?

Ethics and social responsibility are important in data science because analytical systems increasingly influence real-life decisions about credit, hiring, policing, healthcare, and education. Poorly designed or unreflective models can reinforce discrimination, invade privacy, or create opaque systems that people cannot challenge. Taking ethics seriously helps protect vulnerable groups, maintain legal and regulatory compliance, and build long-term trust in data-driven decision-making.

3. How can bias and unfairness arise in data-driven models?

Bias and unfairness can arise in data-driven models through skewed or incomplete training data, historical discrimination embedded in records, unbalanced class labels, and design choices that ignore how variables relate to protected characteristics such as gender, race, or disability. Even technically correct models can be unfair if the target variable, evaluation metric, or deployment context disadvantages certain groups. Ethical data practitioners examine data sources, model assumptions, and outcomes to detect and mitigate these forms of bias.

4. What is the role of privacy and consent in data analytics projects?

Privacy and consent play a central role in data analytics projects because individuals have rights over how their personal information is collected, stored, shared, and used. Responsible projects minimise data collection, anonymise or pseudonymise records where possible, and clearly explain to users what data is being used and why. They also respect legal frameworks such as data protection regulations and give individuals meaningful options to opt in, opt out, or request deletion of their data.

5. What do transparency and explainability mean in the context of algorithms?

Transparency and explainability refer to how understandable an algorithm and its decisions are to affected people and stakeholders. A transparent system makes it possible to see what data is used, how the model was trained, and which factors drive decisions. Explainable models offer human-friendly reasons or simplified explanations for predictions, enabling users, regulators, and auditors to check for errors, contest unfair outcomes, and improve accountability in automated decision-making.

6. How can organisations promote accountability in data science and analytics?

Organisations can promote accountability in data science and analytics by defining clear roles and responsibilities, documenting data pipelines and model decisions, and establishing review and audit processes. They can adopt ethical guidelines, conduct impact assessments, and set up mechanisms for individuals to question or appeal automated decisions. In addition, interdisciplinary teams that include legal, ethical, and domain experts help ensure that accountability is not left only to technical staff.

7. What are some potential social impacts of large-scale analytics and AI systems?

Large-scale analytics and AI systems can have many social impacts. Positive impacts include better healthcare planning, more efficient transport, and personalised education. Negative impacts can include surveillance, loss of privacy, job disruption, algorithmic discrimination, and concentration of power in a few large platforms. Ethical analysis requires looking beyond technical performance and asking how systems change behaviour, shape incentives, and influence social structures over time.

8. How can students prepare to handle ethical and social issues in data science careers?

Students can prepare to handle ethical and social issues in data science careers by combining technical training with courses in ethics, law, social sciences, and public policy. They should get used to documenting assumptions, questioning data sources, and discussing trade-offs between accuracy, fairness, and privacy. Working on case studies, interdisciplinary projects, and reflective writing helps students develop the habit of asking who is affected by a system and how to design more responsible analytics.

Ethical and Social Aspects in Data and AI: Conclusion

Ethical and social aspects are essential to responsible AI and data practices. By prioritizing privacy, fairness, and transparency, organizations can create systems that are not only effective but also trustworthy and aligned with societal values.

Ethical and Social Aspects in Data and AI: Review Questions and Answers:

1. What are the ethical aspects of data science analytics and why are they important?

Answer: Ethical aspects in data science analytics refer to the principles and practices that ensure data is used responsibly and fairly, respecting privacy and avoiding bias. They are important because they guide organizations in making decisions that protect individual rights and promote transparency. By adhering to ethical standards, data scientists can build trust with users and stakeholders while ensuring that the insights derived are both accurate and just. This foundation is critical for long-term success and social responsibility in an increasingly data-driven world.

2. How do social considerations influence the implementation of data science analytics projects?

Answer: Social considerations influence data science analytics projects by highlighting the impact that data-driven decisions can have on society, including issues of equity, privacy, and public trust. They compel organizations to consider not only the technical and financial benefits but also the broader implications of their analytics practices. Incorporating social perspectives ensures that projects are designed to benefit all stakeholders and mitigate potential harms. This balanced approach leads to more sustainable and socially responsible analytics initiatives.

3. What role does data privacy play in ethical data science, and how can it be maintained?

Answer: Data privacy is a cornerstone of ethical data science, ensuring that sensitive personal information is protected throughout the data lifecycle. It plays a vital role in maintaining user trust and complying with legal regulations. Privacy can be maintained through techniques such as anonymization, encryption, and strict access controls. By implementing robust privacy policies and continuously monitoring data practices, organizations can ensure that their analytics work does not compromise individual rights.

4. How can bias in data and algorithms affect the outcomes of data science analytics?

Answer: Bias in data and algorithms can lead to skewed or unfair outcomes, which may reinforce existing inequalities or create new forms of discrimination. When bias is present, the predictive models and insights derived from the data may not accurately reflect reality, leading to poor decision-making. Addressing bias involves carefully selecting and preprocessing data, as well as continuously monitoring algorithms for fairness. Mitigating bias is essential for ensuring that analytics are equitable and truly reflective of the diverse populations they serve.

5. What is the importance of transparency in ethical data analytics?

Answer: Transparency in ethical data analytics involves openly communicating how data is collected, processed, and used to derive insights. This openness is crucial for building trust among users, stakeholders, and regulatory bodies. Transparency allows for independent verification of methods and results, ensuring accountability in decision-making processes. It also encourages a culture of openness where ethical practices are prioritized, ultimately leading to more credible and accepted outcomes.

6. How do accountability and responsibility factor into ethical data science practices?

Answer: Accountability and responsibility in data science ensure that individuals and organizations are held answerable for their data practices and the outcomes of their analytics. These principles require that clear roles and responsibilities are established throughout the data lifecycle, from collection to analysis and decision-making. They help prevent misuse of data and ensure that any errors or biases are promptly addressed. By fostering accountability, organizations can maintain high ethical standards and build trust with their stakeholders.

7. What are the potential social impacts of biased data analytics, and how can they be mitigated?

Answer: Biased data analytics can have significant social impacts, including perpetuating discrimination, influencing public opinion unjustly, and creating unequal opportunities in areas such as employment, finance, and healthcare. These negative effects can harm individuals and communities by reinforcing systemic inequalities. Mitigation strategies include rigorous data cleansing, bias detection, and the inclusion of diverse data sources. By actively addressing bias, organizations can promote fairness and social equity, ensuring that analytics benefits are distributed more evenly across society.

8. How do regulatory frameworks influence ethical and social practices in data science analytics?

Answer: Regulatory frameworks set the legal standards for data privacy, security, and ethical use, which guide how data science analytics is conducted. They compel organizations to implement practices that protect sensitive information and ensure fairness in data processing. Compliance with these regulations not only minimizes legal risks but also reinforces ethical standards and accountability. Regulatory oversight drives continuous improvements in data practices, fostering a culture where ethical considerations are integral to analytics projects.

9. What is the significance of stakeholder engagement in addressing the ethical and social aspects of data science analytics?

Answer: Stakeholder engagement is significant because it brings diverse perspectives into the discussion of ethical and social issues in data science analytics. Engaging stakeholders such as customers, employees, and community representatives ensures that the impact of data practices is thoroughly considered and that their concerns are addressed. This inclusive approach fosters trust and enhances the legitimacy of the analytics process. By involving stakeholders, organizations can create more balanced and socially responsible strategies that reflect the interests of all parties involved.

10. How can organizations promote a culture of ethical data practices within their analytics teams?

Answer: Organizations can promote a culture of ethical data practices by establishing clear policies, providing regular training, and integrating ethical considerations into every stage of the analytics process. Encouraging open discussions about ethics and creating channels for feedback helps ensure that ethical issues are identified and addressed promptly. This proactive approach not only improves the quality of analytics but also builds trust with stakeholders and enhances the organization’s reputation. By embedding ethical principles into the organizational culture, companies can ensure that data-driven decisions are both responsible and sustainable.

Ethical and Social Aspects in Data and AI: Thought-Provoking Questions and Answers

1. How will emerging technologies like AI and blockchain shape the ethical landscape of data science analytics in the future?

Answer: Emerging technologies such as artificial intelligence and blockchain have the potential to revolutionize the ethical landscape of data science analytics by introducing new methods for ensuring data integrity and accountability. AI can help automate the detection of biases and improve decision-making processes, while blockchain offers a decentralized framework for verifying data provenance and maintaining an immutable audit trail. These technologies can enhance transparency and trust in analytics processes, making it easier for organizations to demonstrate their commitment to ethical practices.

However, they also raise new ethical challenges, such as algorithmic bias in AI and privacy concerns in blockchain implementations. Organizations will need to develop robust ethical guidelines and regulatory frameworks to navigate these complexities. By proactively addressing these issues, companies can harness the benefits of these emerging technologies while minimizing their potential risks, ensuring that the evolution of data science remains aligned with societal values.

2. What strategies can organizations adopt to ensure that ethical considerations are integrated throughout the data analytics lifecycle?

Answer: Organizations can adopt a range of strategies to integrate ethical considerations throughout the data analytics lifecycle, starting with the development of comprehensive data governance policies that emphasize privacy, fairness, and accountability. This includes establishing clear guidelines for data collection, processing, and analysis that adhere to ethical standards and regulatory requirements. Regular training and awareness programs for data scientists and analysts are also critical, ensuring that ethical considerations are understood and prioritized at every stage of the process.

Additionally, organizations can implement continuous monitoring and auditing of data practices to detect and address any deviations from established ethical norms. Engaging with external stakeholders, including ethicists and regulatory bodies, can further reinforce a culture of ethical data use. These combined strategies help ensure that ethical principles are not an afterthought but are woven into the fabric of every analytics initiative.

3. How might increasing data privacy regulations affect the practices of data collection and analytics in various industries?

Answer: Increasing data privacy regulations, such as GDPR and CCPA, are poised to have a profound impact on data collection and analytics practices across industries by imposing stricter standards for data usage and protection. These regulations require organizations to obtain explicit consent for data collection, anonymize sensitive information, and ensure transparency in data processing. As a result, companies will need to invest in more robust data governance frameworks and advanced technologies to comply with these standards, which may increase operational costs but ultimately lead to higher data quality and trust.

On the other hand, these regulations can drive innovation by encouraging the development of privacy-enhancing technologies and more ethical data practices. Organizations that adapt quickly to these regulatory changes will be better positioned to build customer trust and avoid legal penalties, thereby enhancing their competitive advantage. This shift towards greater data privacy and accountability is likely to become a central theme in the future of data analytics.

4. What are the potential societal implications of biased data analytics, and how can organizations work to minimize these effects?

Answer: Biased data analytics can have far-reaching societal implications, including reinforcing stereotypes, perpetuating discrimination, and exacerbating social inequalities. When analytics models are trained on biased data, they can produce skewed outcomes that negatively impact marginalized groups, leading to unfair decisions in areas such as hiring, lending, and law enforcement. These biased outcomes can undermine public trust in technology and exacerbate existing social issues, creating long-term challenges for society as a whole.

To minimize these effects, organizations must prioritize fairness and transparency in their data collection and analysis processes. This involves using diverse datasets, implementing bias detection and mitigation techniques, and involving stakeholders in the evaluation of analytical models. Regular audits and independent reviews can also help identify and correct biases, ensuring that data-driven decisions are equitable and contribute positively to societal well-being.

5. How can the incorporation of ethical frameworks into data analytics enhance corporate social responsibility?

Answer: Incorporating ethical frameworks into data analytics can enhance corporate social responsibility (CSR) by ensuring that data practices align with societal values and contribute to the greater good. Ethical frameworks provide guidelines for responsible data collection, processing, and analysis, which help prevent misuse and protect individual rights. By adhering to these principles, companies can build trust with customers, employees, and regulators, reinforcing their commitment to social and ethical standards.

This proactive approach to ethics not only minimizes legal risks and reputational damage but also positions the organization as a leader in responsible innovation. In turn, this can drive positive social impact by ensuring that data analytics initiatives support sustainable practices and contribute to broader societal benefits. Ultimately, ethical analytics become a cornerstone of CSR, reflecting a company’s dedication to both profitability and public welfare.

6. How might future advancements in data analytics tools influence the ethical decision-making process within organizations?

Answer: Future advancements in data analytics tools are likely to enhance the ethical decision-making process by providing more transparent, accurate, and explainable models. As tools become more sophisticated, they will offer deeper insights into how data is processed and how decisions are made, allowing organizations to identify and address potential biases and ethical concerns more effectively. These advancements can lead to the development of algorithms that are not only powerful but also interpretable, ensuring that ethical considerations are an integral part of the decision-making process.

Moreover, as analytics tools incorporate features that automatically flag potential ethical issues, decision-makers will be better equipped to evaluate the social implications of their data-driven strategies. This increased transparency and accountability can foster a culture where ethical considerations are prioritized, leading to more responsible and fair business practices. Ultimately, the evolution of these tools will empower organizations to make decisions that are both data-driven and ethically sound.

7. What are the potential risks of ignoring ethical and social considerations in data science analytics?

Answer: Ignoring ethical and social considerations in data science analytics can lead to significant risks, including legal repercussions, reputational damage, and the erosion of public trust. Unethical data practices may result in biased algorithms that disproportionately affect certain groups, leading to unfair treatment and discrimination. These issues can spark public backlash, regulatory fines, and long-term damage to an organization’s brand and customer relationships.

Furthermore, neglecting ethical considerations may result in missed opportunities for innovation and sustainable growth, as responsible data practices are increasingly valued by consumers and investors alike. Organizations that fail to address these concerns risk not only financial loss but also a diminished capacity to compete in an environment where ethical standards are paramount.

8. How can organizations foster transparency and accountability in their data analytics processes?

Answer: Organizations can foster transparency and accountability by implementing robust data governance policies that clearly define how data is collected, processed, and analyzed. This includes maintaining detailed documentation, creating audit trails, and regularly reporting on data practices and outcomes. Transparency can also be enhanced through open communication with stakeholders, providing them with clear insights into the methodologies and assumptions behind analytical models.

Accountability is reinforced by establishing roles and responsibilities for data management and by conducting independent audits to ensure compliance with ethical and regulatory standards. By creating an environment where data practices are open to scrutiny, organizations can build trust and ensure that their analytics processes are both ethical and effective.

9. What role do cultural and societal values play in shaping ethical guidelines for data analytics?

Answer: Cultural and societal values play a significant role in shaping ethical guidelines for data analytics, as they define what is considered acceptable and fair within a given community. These values influence how privacy, consent, and data ownership are perceived and regulated, which in turn affects the development of ethical frameworks in data analytics. Organizations must consider these cultural factors when designing their data practices to ensure that they align with the expectations and norms of the societies in which they operate.

By incorporating diverse perspectives and engaging with community stakeholders, companies can create more inclusive and culturally sensitive ethical guidelines. This approach not only enhances the social acceptability of data analytics initiatives but also contributes to more equitable outcomes that respect the rights and values of all individuals.

10. How can data visualization be used to communicate ethical considerations and social impact effectively to a broad audience?

Answer: Data visualization can effectively communicate ethical considerations and social impact by presenting complex ethical data in a clear, engaging, and accessible manner. Visual tools such as infographics, interactive dashboards, and storytelling charts can illustrate the implications of data practices on privacy, fairness, and accountability. These visualizations help translate abstract ethical concepts into tangible insights that resonate with both technical and non-technical audiences, facilitating broader understanding and discussion.

By highlighting key metrics and trends related to ethical performance, organizations can use data visualization to promote transparency and accountability. This approach not only informs stakeholders about the social impact of data practices but also drives continuous improvement by making ethical considerations a visible and integral part of the decision-making process.

11. How might evolving data privacy laws influence the ethical and social responsibilities of organizations engaged in data analytics?

Answer: Evolving data privacy laws, such as GDPR and CCPA, are increasingly shaping the ethical and social responsibilities of organizations by imposing strict standards on data collection, storage, and processing. These regulations compel organizations to prioritize user consent, data anonymization, and robust security measures to protect sensitive information. As a result, companies must integrate ethical practices into their data analytics workflows to ensure compliance and build trust with consumers and regulators.

The evolving legal landscape not only demands adherence to stricter standards but also encourages organizations to innovate in their ethical data practices. By proactively adapting to these changes, companies can mitigate legal risks, enhance their reputation, and contribute to a more responsible and equitable digital ecosystem.

12. What are the potential benefits of incorporating ethical training into data science education, and how might this shape the future of the industry?

Answer: Incorporating ethical training into data science education offers significant benefits by equipping future professionals with the knowledge and skills necessary to navigate complex ethical challenges in the field. This training ensures that data scientists are not only technically proficient but also aware of the social implications of their work, leading to more responsible and fair data practices. Over time, this emphasis on ethics can foster a culture of accountability and transparency within the industry, driving innovation that is both sustainable and socially beneficial.

In the long term, ethical training can shape the future of data science by promoting best practices that prioritize user privacy, fairness, and responsible data management. As emerging technologies continue to evolve, a workforce that is well-versed in ethical principles will be better prepared to address the challenges of a data-driven world, ultimately contributing to a more trustworthy and equitable industry.