Robotics and Autonomous Systems fuse mechanical engineering, electronics, and artificial intelligence & machine learning to build machines that can perceive, decide, and act with minimal human input. From factory cobots to self-driving vehicles, modern robots lean on deep learning, computer vision, and sensor fusion to understand the world and take real-time decisions. With data science & analytics, they improve continuously from the streams they generate.

Most autonomous systems operate in connected environments—powered by internet & web technologies and scalable cloud computing. Different cloud service models back heavy workloads such as mapping, coordination, and fleet monitoring. In smart manufacturing (Industry 4.0), robots boost quality and throughput while collaborating safely with people.

On the software side, classic expert systems capture rule-based decisions; natural language processing enables voice/text commands; and reinforcement learning lets agents learn by interacting with their environment.

Robotics also intersects the IoT & smart technologies—from logistics and healthcare to space exploration and satellite systems. Looking ahead, quantum ideas—qubits, superposition, gates—hint at new ways robots might learn and plan.

As a core strand of modern STEM, robotics blends design, control, cloud-integrated software, and the ethics of autonomy—preparing learners to build systems that think, perceive, and act responsibly.

As a critical frontier of STEM innovation, robotics and autonomous systems offer transformative solutions to global challenges. The field is deeply embedded in information technology and continually draws from interdisciplinary knowledge to evolve. For students and professionals alike, understanding robotics means exploring not just mechanical design but also adaptive intelligence, cloud-integrated control, and the ethics of autonomy. The journey into robotics opens doors to designing systems that think, perceive, and act—pushing the boundaries of what machines can achieve.

Table of Contents

Core Components of Robotics & Autonomy

Sensing & Perception

Robots fuse cameras, LiDAR, IMU, GPS, and proprioceptive signals to build a scene understanding. With

computer vision and

deep learning, they detect, segment, and track objects.

- Object detection/segmentation for obstacles and drivable space.

- Sensor fusion for robustness to lighting, weather, and motion blur.

Localisation & Mapping (SLAM)

Estimating pose while building a map; closing loops to reduce drift.

- Visual/Visual-Inertial SLAM, LiDAR SLAM; loop closure & relocalisation.

- Metrics: ATE/RPE (see metrics table below).

Planning & Decision

From path planning (A*, RRT*, lattice planners) to behaviour/state machines and policy learning via

reinforcement learning.

- Global vs. local planners; dynamic obstacle avoidance; rule-based behaviours.

- Task planners and mission control for multi-robot coordination.

Control & Actuation

Feedback control (PID, LQR, MPC) turns plans into smooth, stable motion.

- Tracking error, overshoot, settling time; safety stops and fail-safes.

Human–Robot Interaction & Safety

Communication via GUIs/voice (NLP), intent signalling, and collaborative safety.

- Intervention rate, near-miss logging, geofencing, and speed/force limits.

Compute & Connectivity

On-board edge compute + fleet services on cloud;

telemetry and OTA updates over reliable networking.

Sensors & Fusion

What this diagram shows

Three translucent circles represent the information each sensor contributes; the overlaps indicate complementary signals that a fusion layer (yellow pill) combines to produce a consistent state estimate. The labels list common outputs and typical fusion methods.

- Vision (camera): rich appearance cues — objects, lanes, text. Low cost and high resolution, but depth is indirect and performance depends on lighting/weather.

- LiDAR: accurate geometry and ranges; lighting-invariant depth for mapping/obstacle detection. Sparse appearance and higher cost; moving objects can cause returns to vary.

- IMU / GNSS: IMU delivers high-rate motion (roll/pitch/yaw rates, linear accel) but drifts; GNSS gives absolute position outdoors but can suffer outages/multipath.

- Fusion (EKF · UKF · VIO): statistically combines the above to reduce drift and increase robustness. Typical outputs: robot pose (x,y,z, roll,pitch,yaw), velocity, IMU biases, plus an uncertainty covariance.

Common pairings

- VIO (Vision + IMU): indoor/low-cost navigation, good in textured scenes.

- LIO (LiDAR + IMU): reliable depth in low-light/texture-poor environments.

- GNSS + VIO/LIO: outdoor global navigation; GNSS constrains long-term drift.

Rule of thumb: use camera + IMU where appearance is strong and lighting is acceptable; add LiDAR for precise geometry or poor lighting; add GNSS when you need global accuracy outdoors.

Sensors & Fusion (What to use when)

| Sensor | What it gives | Strengths | Failure modes | Notes |

|---|---|---|---|---|

| RGB camera | Texture/colour, 2D/3D via stereo or depth nets | Cheap, rich semantics (objects, lanes, text) | Low light, glare, motion blur | Great for CV & scene understanding |

| Depth camera / Stereo | Short-range geometry | Simple obstacle detection/VO | Sunlight IR noise, reflective/black surfaces | Indoors & manipulation; pair with IMU |

| LiDAR | Accurate ranges/3D point clouds | Robust geometry in varied light | Rain/fog/dirty lens, sparse semantics | Excellent for mapping & local planning |

| IMU | Angular rate & linear accel | Fast dynamics, complements vision | Bias/ drift over time | Fuse (visual-inertial) to arrest drift |

| Wheel odometry | Planar motion estimate | Stable on flat ground | Slip, uneven surfaces | Good prior for indoor AMRs |

| GPS / RTK | Global position (outdoors) | Absolute pose outdoors | Urban canyons, multipath, loss indoors | Fuse with SLAM; use RTK for precision |

Autonomy Pipeline (Sensors → SLAM → Planning → Control)

Localisation & SLAM — Pose-Graph, RPE, Loop Closure, ATE

Blue = estimated pose-graph; grey dashed = ground truth. Green arrow = RPE at one step (local error). Orange dashed arc = loop-closure factor (helps correct drift). Red bar at last pose = ATE (final drift to truth).

Algorithm Spotlights

Visual-Inertial SLAM (VI-SLAM)

- Tracks features in images; fuses with IMU to stabilise fast motion.

- Loop closure corrects long-term drift; re-localise after loss.

- When: indoor drones/AMRs where GPS is weak or absent.

Local Planning (DWA / MPC)

- DWA: sample velocities, score for collision/goal heading.

- MPC: optimise controls over a short horizon with constraints.

- Trade-off: DWA is simple/fast; MPC handles dynamics neatly.

Behaviour Trees vs. FSMs

- FSM: small tasks, few modes, simple transitions.

- BT: modular, composable tasks with fallback/retry.

- Pattern: FSM for low-level modes; BT for mission logic.

PID / LQR / MPC Control

- PID: error feedback (P+I+D); quick to tune for speed/heading.

- LQR: minimises quadratic cost; needs a linear model.

- MPC: handles constraints (speed, curvature, torque).

u(t) = Kp·e(t) + Ki·∫e(t)dt + Kd·de(t)/dt

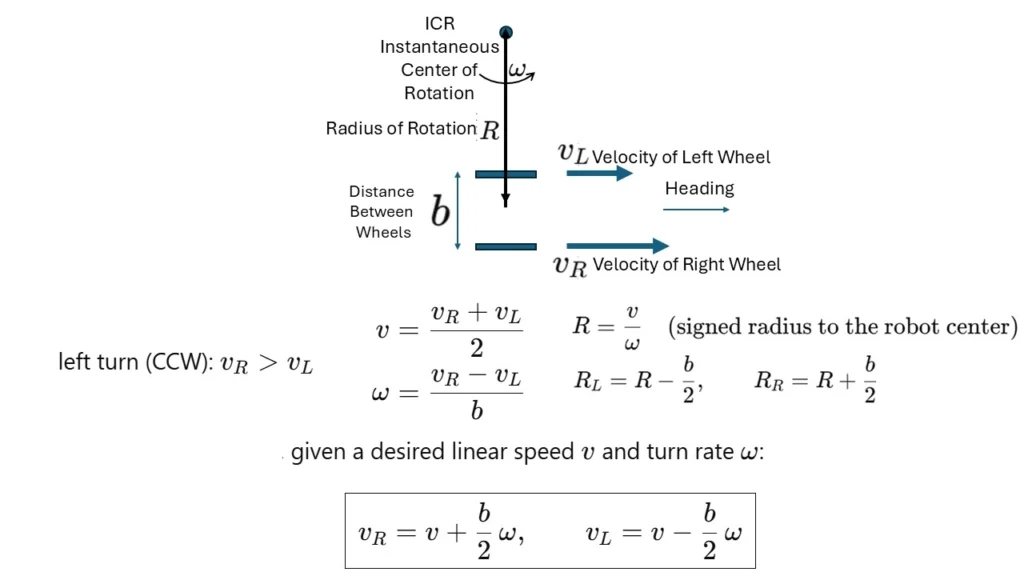

Differential-Drive Kinematics (left turn, CCW)

A two-wheel differential-drive robot steers by setting unequal wheel speeds. For a left (CCW) turn, \(v_R\!>\!v_L\) and the robot rotates about the instantaneous center of rotation (ICR) on the left. With track width \(b\), \(v=\frac{v_R+v_L}{2}\), \(\omega=\frac{v_R-v_L}{b}\), and \(R=\frac{v}{\omega}\); equivalently \(v_R=v+\frac{b}{2}\omega\) and \(v_L=v-\frac{b}{2}\omega\).

Description. This figure illustrates a two-wheel differential-drive robot turning left (counter-clockwise). The right wheel is faster than the left ($v_R > v_L$), so the robot instantaneously rotates about the instantaneous center of rotation (ICR) located on the left of the chassis. The track width $b$ is the distance between the wheels, and the turning radius to the axle midpoint is $R$.

\[

v=\frac{v_R+v_L}{2},\quad

\omega=\frac{v_R-v_L}{b},\quad

R=\frac{v}{\omega}.

\]

The inside and outside wheel-path radii are

\[

R_L = R-\frac{b}{2},\quad

R_R = R+\frac{b}{2}.

\]

Equivalently, for a desired linear speed $v$ and turn rate $\omega$,

\[

v_R = v + \frac{b}{2}\,\omega,\quad

v_L = v – \frac{b}{2}\,\omega.

\]

Sign convention: counter-clockwise (CCW) is positive, so $\omega>0$ and the ICR lies on the left when $v_R>v_L$.

Planning Stack

Collaborative Safety Envelope

ROS 2 Nodes & Topics (Example)

/image_raw, /imu/data, /nav_msgs/Path, etc., with appropriate QoS (SensorData for sensors, reliable for planning/control).Worked Example: PID Tuning for a Line-Follower

- Define error: e = line_center − camera_detected_center (pixels).

- Start with P-only: increase Kp until it follows the line but starts to oscillate.

- Add D: raise Kd to damp oscillation; monitor overshoot and settling time.

- Add I: small Ki to remove steady-state bias on long curves.

- Validate: 5 laps → compute mean lap time & variance; count off-track events.

Example (after tuning): Kp = 0.9, Kd = 0.12, Ki = 0.02 Lap time = 41.3 ± 1.6 s (5 laps), Off-track = 0 Tip: clamp integral (|I| ≤ I_max) and add derivative low-pass.

Illustrative Case Studies

Warehouse AMR

- Map with LiDAR SLAM; local plan with DWA; fleet traffic rules.

- KPIs: units/hour, charger wait, near-miss count.

- Gotcha: reflective floors → LiDAR dropouts → add camera fusion.

Indoor Drone Survey

- VI-SLAM + IMU; waypoint missions; pause/hover accuracy checks.

- KPIs: hover error, flight time, collision-free passes.

- Gotcha: GPS-denied; add fiducials for re-localisation.

Pick-and-Place Cobot

- Vision grasp detection; MoveIt planning; force control for insertion.

- KPIs: success %, cycle time, operator intervention rate.

- Gotcha: shiny parts → bad segmentation → polarised lighting.

Debugging & Reliability Checklist

- Replay logs → are timestamps monotonic? Any time jumps?

- Raw sensor plots (gyro/accel, wheel encoders) → obvious bias/saturation?

- CPU/GPU & thermals → does performance fall with heat?

- Perception: confusion matrix by class; worst 20 frames.

- SLAM: plot ATE/RPE vs. speed; check loop closures.

- Planner: collision checks in sim; latency per stage.

- Ops: log interventions & near-misses; define rollback triggers; version model+map+config.

- Safety: verify E-stop, geofence, and degraded-mode behaviours on every release.

Applications

Autonomous Vehicles

- Perception stack for lanes, objects, and free space.

- Behaviour planning at intersections; V2X for coordination.

- KPIs: disengagements, route success, latency, energy/thermal limits.

Aerial Robots (Drones/UAVs)

- Visual-inertial navigation in GPS-denied spaces; waypoint missions.

- Use cases: inspection, mapping, delivery, disaster response.

- KPIs: hover error, wind tolerance, flight time, payload/Wh.

Industrial & Collaborative Robotics

- Assembly, welding, inspection; cobots with safety-rated monitors.

- Vision-guided pick-and-place; force/torque control.

- KPIs: cycle time, first-pass yield, MTBF, human proximity events.

Service & Medical Robotics

- Hospital logistics, assistive devices, surgical teleoperation.

- HRI for intent/consent; auditability and privacy safeguards.

- KPIs: task success, intervention rate, user satisfaction.

Logistics & Warehousing

- AMRs for tote moves, inventory scanning, and pallet transport.

- Fleet management, traffic rules, charging orchestration.

- KPIs: UPH, throughput variance, dock utilisation, charger wait.

Field Robotics & Agriculture

- Rugged perception for crops/terrain; RTK for precision rows.

- Spraying/harvesting; herd and wildlife monitoring.

- KPIs: coverage %, fuel/battery per hectare, false-positive weeds.

Space & Extreme Environments

- Autonomy for exploration and

satellites. - Radiation tolerance, fault detection, delayed comms.

- KPIs: navigation drift, duty cycle, autonomous recovery rate.

Data, Evaluation & Safety

- Unit tests & replay logs → simulation → HIL (hardware-in-loop) → pilot field tests.

- Scenario libraries (night, rain, dust); domain randomisation.

- Telemetry & event cameras for rare-case capture.

- Edge compute for control; cloud for fleet monitoring, maps, OTA.

- Latency budgets per stage (perception → planning → control).

- Versioning: model + map + config; rollback plans.

Safety & Responsibility checklist

- Redundant sensing; safe-state & E-stop; geofencing.

- Human-in-the-loop override; intervention logging.

- Privacy: video/PII handling; transparent data notices.

- Bias checks for vision models; adverse-case testing.

- Algorithms: unit/CI → integration (ROS2 nodes) → system tests.

- Benchmarks: SLAM ATE/RPE; detection mAP/IoU; control RMSE.

- Operations: uptime, MTBF, maintenance MTTR.

Autonomous Vehicles

Autonomous vehicles are among the most transformative applications of robotics and AI, spanning land, air, and sea transportation.

Self-driving Cars:

Equipped with sensors (e.g., LiDAR, cameras, radar) and AI algorithms, these vehicles navigate complex urban environments, recognize traffic signs, detect pedestrians, and plan optimal routes while adhering to traffic laws.

Autonomous Trucks:

Utilized for long-haul transportation, these vehicles optimize logistics by reducing fuel consumption, minimizing human errors, and operating continuously.

Marine Vessels:

Unmanned surface or underwater vessels are used for oceanographic research, cargo shipping, or military operations.

Autonomous Vehicles

Autonomous vehicles combine robust sensing, precise localisation, predictive planning, and safe control to act reliably in open environments. Below is a practical view of the core stack and how it appears across major vehicle types.

- Perception

- Camera/LiDAR/radar + learned detectors & trackers (objects, lanes, traffic lights), occupancy/costmaps, freespace.

- Localisation & Mapping

- GNSS + IMU + wheel odometry; visual/LiDAR SLAM; HD/semantic maps; loop closure for drift correction.

- Prediction

- Short-horizon motion forecasts for dynamic agents (vehicles, cyclists, pedestrians); intent & interaction modelling.

- Planning

- Behaviour layer (yield, overtake, stop) → trajectory generation (optimisation/MPC) subject to kinematics & safety envelope.

- Control

- Low-level longitudinal/lateral control (PID/LQR/MPC), actuator limits, fault detection, graceful degradation.

- Safety & Ops

- ISO 26262/21448 (SOTIF) style thinking, geofencing, remote ops, data logging, simulation → HIL → pilot rollout.

Self-Driving Cars (Robotaxi / Private AV)

- Sensors: Multi-camera ring, LiDAR, radar; high-rate IMU; GNSS with RTK where available.

- Environment: Dense urban traffic, pedestrians, signage; complex right-of-way rules.

- Maps: HD & semantic maps for lanes, traffic lights, drivable space; continuous map updates.

- Challenges: Night/rain/fog, occlusions, unusual roadworks, emergency vehicles, rare corner cases.

Key metrics: policy rule compliance, intervention/disengagement rate, comfort (jerk), near-miss rate.

Autonomous Trucks (Middle/Long-Haul)

- Focus: Highway driving with controlled merges, platooning, long duty cycles.

- Constraints: Longer stopping distance, heavy load dynamics, strict lane keeping & cut-in handling.

- Ops: Depot geofencing, remote supervision, predictive maintenance and fuel optimisation.

Common stack simplification: highway-prioritised perception and behaviour; strong redundancy for braking/steer.

Shuttles & Rail-Like Systems

- Use-case: Fixed routes/campuses with low speed and frequent stops.

- Benefits: Constrained ODD simplifies perception & planning; high service availability.

- Safety: Fused safety LiDAR, emergency stop chains, conservative envelopes near crowd areas.

Great for early deployments where controlled corridors reduce edge-case complexity.

Last-Mile Ground Robots

- Environment: Pavements, crossings, shared spaces with pedestrians and pets.

- Perception: Wide-FOV cameras + depth/LiDAR for curb ramps, drop-offs, and occlusions.

- Ops: Remote assist for rare situations; docking/charging autonomy; anti-tamper measures.

Key metric: mission success without human assist; safe social navigation and etiquette.

Aerial Drones (UAV/UAS)

- Missions: Inspection, mapping, delivery, emergency response.

- Navigation: GNSS-denied indoor VIO/SLAM; geofencing; wind-aware path planning.

- Safety: Return-to-home, lost-link handling, parachute/prop-guards where applicable.

Consider U-space/UTM rules, VLOS/BVLOS constraints, and no-fly zones.

Marine Vessels (Surface & Underwater)

- Sensing: Radar/AIS for traffic; cameras/LiDAR above water; sonar/DVL/pressure for underwater.

- Localisation: GPS/RTK on surface; acoustic beacons and inertial dead-reckoning underwater.

- Planning: COLREGs compliance, current/tide modelling, station keeping & waypoint tracking.

Use cases: oceanographic survey, port automation, pipeline inspection, spill response.

Operational Excellence & Safety (what real deployments require)

- Validation pipeline: unit → simulation (scenario libraries, night/rain/fog) → HIL → shadow → pilot.

- Monitoring: health & performance KPIs, incident triage, map freshness, OTA updates and rollback.

- Responsible AI: bias checks for perception; privacy-aware logging; clear user notices; geofenced ODD.

- Metrics: RPE/ATE for localisation, collision-surrogate metrics (TTC), comfort (lateral/long jerk), MTTF.

- Fallbacks: degraded-mode behaviours, safe-stop envelopes, and remote assist handover.

Drone Navigation and Control

Drones, or unmanned aerial vehicles (UAVs), benefit significantly from AI to enhance their autonomy and versatility.

Delivery Services:

Companies like Amazon and DHL are deploying AI-powered drones for package delivery in urban and rural areas, optimizing flight paths and avoiding obstacles.

Agriculture:

Drones equipped with computer vision analyze crop health, assess irrigation needs, and monitor large agricultural areas with precision.

Disaster Response:

Autonomous drones assist in search and rescue operations, surveying hazardous areas, and delivering supplies to inaccessible locations.

Surveillance and Inspection:

Drones inspect critical infrastructure (e.g., bridges, wind turbines, pipelines) and provide real-time feedback, reducing risk and cost.

Data, Evaluation & Safety

- Unit tests & replay logs → simulation → HIL (hardware-in-loop) → pilot field tests.

- Scenario libraries (night, rain, dust); domain randomisation.

- Telemetry & event cameras for rare-case capture.

- Edge compute for control; cloud for fleet monitoring, maps, OTA.

- Latency budgets per stage (perception → planning → control).

- Versioning: model + map + config; rollback plans.

Safety & Responsibility checklist

- Redundant sensing; safe-state & E-stop; geofencing.

- Human-in-the-loop override; intervention logging.

- Privacy: video/PII handling; transparent data notices.

- Bias checks for vision models; adverse-case testing.

- Algorithms: unit/CI → integration (ROS2 nodes) → system tests.

- Benchmarks: SLAM ATE/RPE; detection mAP/IoU; control RMSE.

- Operations: uptime, MTBF, maintenance MTTR.

Metrics Cheat-Sheet

| Area | Key metrics | Notes |

|---|---|---|

| Perception (CV) | mAP / mAR, IoU / Dice | Object detection & segmentation quality. |

| Tracking | MOTA / MOTP, ID-switches | Multi-object tracking stability. |

| SLAM / Localisation | ATE, RPE, loop-closure rate | Trajectory & drift accuracy. |

| Planning | Success rate, path length ratio, time-to-goal | Avoid collisions & dead-ends. |

| Control | Tracking error (RMSE), overshoot, settling time | Trajectory following quality. |

| System | Latency budget, throughput, MTBF/MTTR | Real-time performance & reliability. |

| Energy | Wh per km / per task, runtime | Battery/fuel efficiency under load. |

| HRI & Safety | Intervention rate, near-miss count | Operator workload & safety margins. |

Industrial Automation and Service Robotics

Industrial and service robots play an essential role in improving productivity and safety across various industries.

Smart Manufacturing:

Robots equipped with AI optimize production lines by performing precision tasks, quality control, and predictive maintenance. They can adapt to changing workflows and handle complex manufacturing processes.

Logistics and Warehousing:

Autonomous mobile robots (AMRs) streamline inventory management, order picking, and transportation within warehouses, significantly enhancing operational efficiency.

Healthcare Robotics:

Service robots assist in healthcare by performing surgeries with high precision, delivering medications in hospitals, or aiding in elderly care with mobility support.

Customer Service:

Robots in hospitality and retail interact with customers, answering questions, guiding them, or providing personalized experiences through AI-driven natural language processing.

Starter Projects

Sim Obstacle-Avoidance (ROS 2 + Nav2)

- Teleop, then autonomous navigation in Gazebo with lidar/vision.

- Baseline: reactive; Improve: local planner with costmaps.

Target: success ≥ 90% on 20 random maps; no collisions.

Line-Follower with PID

- Camera or IR sensors; tune PID for turns and speed changes.

- Stretch: add stop-sign detection via simple CV.

Target: lap-time variance < 5% across 5 runs; no off-track events.

Mini-SLAM & Mapping

- Run VIO/ORB-SLAM or RTAB-Map in a small indoor world.

- Compare ATE/RPE across sensor configs; log loop closures.

Target: ATE < 0.15 m over 50 m trajectory; loop closure >= 2.

Pick-and-Place with MoveIt

- UR or Panda arm in sim; grasp planning with simple depth cues.

- Stretch: bin-picking with segmentation from Computer Vision.

Target: success ≥ 80% on 50 attempts; mean cycle time benchmarked.

Core Technologies Powering Robotics and Autonomous Systems

Sensor Integration:

Cameras, LiDAR, ultrasonic sensors, and more for environmental perception.

Machine Learning:

Algorithms for object recognition, predictive analytics, and decision-making.

Reinforcement Learning:

Training robots to learn optimal actions through trial and error in dynamic environments.

Simultaneous Localization and Mapping (SLAM):

For building real-time maps and navigating unfamiliar areas.

Edge Computing:

Processing data locally for real-time decision-making without reliance on cloud infrastructure.

Human-Robot Interaction (HRI):

Enhancing collaboration between robots and humans in shared spaces.

Why Study Robotics and Autonomous Systems

Exploring the Fusion of Intelligence, Mechanics, and Control

Understanding Core Components of Intelligent Machines

Driving Innovation Across Industries

Engaging with Ethical, Social, and Safety Considerations

Preparing for a High-Demand Future in Engineering and AI

Robotics: Quick Answers

Robotics vs. Autonomous Systems? Robotics builds the physical machine; autonomous systems add perception, decision, and control so it can operate with minimal human input.

What maths/programming helps most? Linear algebra, calculus, probability; Python/C++; control theory and basic optimisation.

Which simulator should I use? ROS 2 with Gazebo/Ignition; for manipulation, add MoveIt; for fast physics, Isaac or Webots are common alternatives.

Edge or cloud for autonomy? Core control/perception at the edge for latency; fleet monitoring, maps, and training in the cloud.

How is safety evaluated? Layered tests (unit → sim → HIL → field), intervention/near-miss logs, and fail-safe behaviours (stop/geofence).

Robotics and Autonomous Systems: Conclusion

By integrating AI into robotics and autonomous systems, these technologies are transforming industries, improving efficiency, safety, and accessibility while opening up new possibilities for innovation.

Key Terms

- SLAM

- Simultaneous Localisation and Mapping—estimate pose while building a map; reduce drift via loop closure.

- ATE / RPE

- Absolute / Relative Pose Error—core SLAM metrics for overall drift and step-to-step accuracy.

- MOTA / MOTP

- Tracking metrics for multi-object tracking (accuracy/precision; penalise ID switches).

- Cobot

- Collaborative robot designed to work safely near people using force/space limits and sensors.

- HIL

- Hardware-in-the-Loop testing—run control/perception on real hardware with simulated environments.

- Geofencing

- Virtual boundaries that restrict where a robot can operate; tied to safety modes and E-stops.

Robotics and Autonomous Systems: Review Questions and Answers:

1. What is robotics and how is it defined in modern engineering?

Answer: Robotics is an interdisciplinary field that integrates mechanical engineering, electronics, and computer science to design, build, and operate robots. It involves the creation of systems that can perform tasks autonomously or semi-autonomously by processing sensor data and executing pre-programmed instructions. Modern engineering views robotics as a critical component in automation, offering solutions that enhance productivity and safety in various industries. The field continuously evolves as emerging technologies improve robot intelligence and adaptability.

2. What are the key components of a robotic system?

Answer: A robotic system typically consists of a mechanical structure, sensors, actuators, and a control unit or processor. The mechanical structure provides the physical form and movement capabilities, while sensors collect data about the environment. Actuators convert electrical signals into physical actions, enabling movement and task execution. The control unit processes sensor inputs and coordinates actuator outputs, ensuring that the robot operates efficiently and adapts to changing conditions.

3. How do sensors contribute to the functionality of robots?

Answer: Sensors play a vital role in robotics by providing real-time data about the robot’s surroundings and internal states. They enable robots to detect obstacles, measure distances, recognize patterns, and respond to environmental changes. This sensory information is crucial for tasks such as navigation, object manipulation, and safety monitoring. By integrating multiple types of sensors, robots can perform complex functions with a high degree of accuracy and reliability.

4. What is the role of actuators in robotic systems?

Answer: Actuators are the components responsible for converting electrical signals into physical motion, allowing robots to interact with their environment. They drive movements such as rotation, linear displacement, and gripping, which are essential for task execution. The performance and efficiency of a robotic system heavily depend on the type and quality of its actuators. Effective actuator integration ensures precise control, smooth operation, and responsiveness in dynamic applications.

5. How does feedback control improve the performance of robots?

Answer: Feedback control is a mechanism that uses sensor data to continuously adjust the actions of a robot, ensuring accuracy and stability in its operations. By comparing the desired outcome with the actual performance, feedback control systems can correct errors in real time. This process is fundamental for tasks that require high precision, such as robotic assembly or delicate object handling. Ultimately, feedback control enhances reliability, adaptability, and overall system performance in robotics.

6. What are some common applications of robotics in various industries?

Answer: Robotics is applied in a wide range of industries, from manufacturing and healthcare to agriculture and logistics. In manufacturing, robots improve efficiency by automating repetitive tasks and enhancing precision in assembly lines. In healthcare, robotic systems assist in surgeries, rehabilitation, and patient care, offering enhanced precision and consistency. The versatility of robotics makes it a valuable asset for addressing complex challenges and driving innovation across multiple sectors.

7. How is artificial intelligence integrated into modern robotics?

Answer: Artificial intelligence is integrated into robotics to enhance decision-making, perception, and adaptability in dynamic environments. AI algorithms enable robots to learn from their experiences, improve their performance over time, and make autonomous decisions based on sensor inputs. This integration allows robots to perform complex tasks, such as object recognition and autonomous navigation, with greater efficiency. As AI continues to evolve, its synergy with robotics is expected to revolutionize automation and intelligent systems design.

8. What is the significance of human-robot interaction in robotics?

Answer: Human-robot interaction focuses on designing systems that facilitate effective communication and collaboration between humans and robots. It is significant because it ensures that robots are user-friendly, safe, and capable of working alongside human operators in various settings. Effective interaction strategies lead to improved task performance and reduce the risk of errors or accidents in collaborative environments. This field is essential for integrating robotics into everyday life and enhancing the overall user experience.

9. What challenges are commonly faced in designing autonomous robots?

Answer: Designing autonomous robots involves overcoming challenges such as sensor integration, real-time decision-making, and ensuring reliable performance in unpredictable environments. Engineers must address issues like error propagation, system latency, and the robustness of control algorithms. Balancing complexity with efficiency remains a significant hurdle, particularly when robots are required to operate safely alongside humans. Continuous research and development are essential to overcome these challenges and advance the capabilities of autonomous systems.

10. How do simulation and modeling support robotics development?

Answer: Simulation and modeling provide critical tools for testing and refining robotic designs before physical prototypes are built. They allow engineers to experiment with various control strategies, sensor configurations, and mechanical designs in a virtual environment. This process helps identify potential issues and optimize system performance without the risk and cost associated with real-world trials. Consequently, simulation and modeling accelerate innovation and enhance the reliability and effectiveness of robotic systems.

Robotics and Autonomous Systems: Thought-Provoking Questions and Answers

1. How might robotics evolve in the next decade with the integration of emerging technologies?

Answer: Robotics is poised for transformative growth as emerging technologies such as artificial intelligence, machine learning, and advanced materials are integrated into system designs. The fusion of these innovations will likely result in robots that are more adaptable, intelligent, and capable of performing complex tasks in dynamic environments. Future robots may exhibit improved decision-making skills, greater autonomy, and enhanced interaction capabilities, redefining their roles in both industrial and everyday settings. This evolution is expected to drive significant advancements in automation, healthcare, transportation, and beyond.

The integration of emerging technologies will also open up new research avenues and collaboration opportunities across disciplines. As robotics evolves, ethical considerations and regulatory frameworks will need to be developed to ensure that these advancements benefit society while mitigating potential risks.

2. In what ways can robotics contribute to addressing global challenges such as climate change or resource scarcity?

Answer: Robotics can play a crucial role in addressing global challenges by optimizing resource management, enhancing environmental monitoring, and automating processes in sustainable energy production. For example, robots can be deployed for precision agriculture to minimize waste and maximize crop yields, or used in environmental cleanup operations to safely manage hazardous materials. These applications help reduce the ecological footprint and improve the efficiency of resource utilization. Moreover, the adaptability of robotics enables targeted interventions in areas that are difficult for humans to access, thereby contributing to broader sustainability goals.

Beyond immediate applications, robotics can drive innovation in renewable energy technologies and infrastructure management. By automating data collection and analysis, robots can support more informed decision-making in climate policy and environmental conservation, ultimately leading to more resilient and sustainable communities worldwide.

3. How can the development of soft robotics redefine human-robot interaction and collaboration?

Answer: Soft robotics, characterized by flexible materials and adaptable structures, has the potential to revolutionize the way robots interact with humans. Unlike traditional rigid robots, soft robots can safely navigate environments shared with people, reducing the risk of injury and improving collaborative efficiency. Their flexible nature allows for more natural movements and interactions, which are particularly beneficial in applications such as medical devices, assistive technologies, and wearable robotics. This advancement opens up new possibilities for integrating robots into everyday human activities in a seamless and intuitive manner.

The development of soft robotics also encourages the design of systems that can mimic biological functions and adapt to complex environments. As these robots become more prevalent, they are likely to foster deeper trust and cooperation between humans and machines, ultimately leading to more harmonious and productive partnerships in various domains.

4. What ethical considerations arise from the increasing autonomy of robots in decision-making roles?

Answer: As robots become more autonomous, ethical considerations such as accountability, transparency, and bias in decision-making processes become increasingly critical. Autonomous systems that make decisions without human intervention must be designed with clear guidelines to ensure they act in a manner that is fair and just. There is a risk that biased algorithms or unforeseen programming errors could lead to discriminatory outcomes or unintended harm. Ensuring that these systems are developed with ethical principles at the forefront is paramount for their safe integration into society.

The challenge also extends to determining liability when autonomous systems fail or cause harm. Establishing regulatory frameworks and ethical standards that govern the behavior of autonomous robots will be essential to maintain public trust and promote responsible innovation. Collaborative efforts between technologists, ethicists, and policymakers are needed to address these issues comprehensively.

5. How might advancements in machine learning reshape the design and functionality of future robotic systems?

Answer: Advancements in machine learning are set to dramatically enhance the capabilities of robotic systems by enabling them to learn from data and improve their performance over time. Machine learning algorithms allow robots to adapt to new tasks and environments without explicit programming, making them more versatile and efficient. These algorithms can optimize decision-making processes, improve pattern recognition, and facilitate real-time adjustments in complex scenarios. As a result, future robots will be more autonomous, capable of handling unpredictable situations, and better integrated into various industrial and personal applications.

In addition, machine learning can contribute to the development of more sophisticated control systems that enable robots to operate with greater precision and reliability. This evolution will likely lead to robots that can anticipate and respond to human needs more effectively, thereby transforming industries such as healthcare, manufacturing, and service delivery. The synergy between machine learning and robotics promises a future where intelligent systems are seamlessly woven into the fabric of everyday life.

6. Can robotics play a role in enhancing accessibility and assistive technologies for differently-abled individuals?

Answer: Robotics has significant potential to enhance accessibility and assistive technologies by providing personalized solutions that improve mobility, communication, and daily living for differently-abled individuals. Robotic prosthetics, exoskeletons, and assistive devices can restore or augment lost functions, offering users greater independence and improved quality of life. These systems are designed to adapt to individual needs, providing customized support through advanced sensors and machine learning algorithms. The integration of robotics in assistive technology represents a major step forward in inclusivity and accessibility.

Moreover, ongoing research and innovation in this area continue to push the boundaries of what is possible, paving the way for more intuitive and user-friendly interfaces. As these technologies become more affordable and widely available, they hold the promise of transforming healthcare and rehabilitation, enabling individuals to overcome physical limitations and participate more fully in society.

7. How do you envision the balance between human labor and robotic automation evolving in industrial settings?

Answer: The balance between human labor and robotic automation is likely to evolve into a more collaborative model where robots handle repetitive, dangerous, or highly precise tasks while humans focus on creative, strategic, and supervisory roles. This integration can lead to increased efficiency and productivity, as robots take over tasks that are physically demanding or prone to error. Human workers will be able to concentrate on problem-solving, innovation, and tasks that require emotional intelligence, resulting in a more dynamic and efficient workplace. This shift not only improves safety but also enhances the overall quality of work, driving industrial progress.

As automation becomes more sophisticated, ongoing workforce training and education will be crucial to help workers transition into roles that complement robotic systems. The evolution of this balance will likely stimulate job creation in emerging fields related to robotics maintenance, programming, and system management, ensuring that the benefits of automation are broadly shared.

8. What are the potential risks of over-reliance on robotic systems in critical infrastructure and defense?

Answer: Over-reliance on robotic systems in critical infrastructure and defense can pose significant risks, including system vulnerabilities, reduced human oversight, and potential catastrophic failures in the event of malfunctions. As these systems become more autonomous, any errors or cyberattacks could have far-reaching consequences, potentially disrupting essential services or compromising national security. There is also the risk that decision-making processes become opaque, making it difficult to identify and correct problems before they escalate. These concerns underscore the importance of implementing robust safety protocols, regular system audits, and backup measures to mitigate risks.

Furthermore, it is essential to maintain a balance between automation and human control to ensure that critical decisions are subject to human judgment when necessary. Developing resilient systems with built-in redundancies and fail-safe mechanisms will be crucial in managing the risks associated with extensive robotic integration in sensitive areas.

9. How can interdisciplinary approaches drive innovation in robotics research and development?

Answer: Interdisciplinary approaches are vital for driving innovation in robotics by combining insights from fields such as mechanical engineering, computer science, neuroscience, and materials science. This convergence of knowledge enables the development of more advanced and adaptable robotic systems that can operate in a variety of environments. Collaboration across disciplines fosters creative problem-solving, allowing researchers to tackle complex challenges that a single field might not overcome on its own. The integration of diverse perspectives leads to breakthroughs in areas like sensor technology, machine learning, and bio-inspired design, all of which are essential for advancing robotics.

By leveraging expertise from multiple domains, interdisciplinary research also encourages the cross-pollination of ideas and techniques, accelerating the pace of innovation. This collaborative environment is likely to result in robots that are more efficient, versatile, and capable of addressing real-world challenges in novel ways, ultimately transforming the landscape of technology and industry.

10. In what ways might robotics influence the future of education and skill development?

Answer: Robotics is set to have a profound impact on education by providing interactive, hands-on learning experiences that bridge the gap between theoretical concepts and practical applications. Educational robotics kits and programmable robots are already being used to teach subjects such as STEM, coding, and problem-solving in a dynamic and engaging manner. These tools not only make learning more accessible but also encourage creativity, collaboration, and critical thinking among students. As robotics technology continues to advance, its integration into educational curricula will prepare future generations for careers in emerging technological fields.

Furthermore, robotics can offer personalized learning experiences by adapting to individual students’ progress and learning styles. This adaptability can help educators identify areas where students need additional support and tailor instruction accordingly. The resulting shift in educational paradigms promises to cultivate a skilled and innovative workforce that is well-equipped to navigate the challenges of a technology-driven world.

11. How could the integration of augmented reality with robotics revolutionize maintenance and repair processes?

Answer: The integration of augmented reality (AR) with robotics has the potential to revolutionize maintenance and repair by providing real-time, immersive guidance to technicians. AR can overlay digital information onto physical systems, allowing for more precise diagnostics and repair instructions during robotic maintenance. This technology enhances the efficiency and accuracy of maintenance procedures, reducing downtime and the likelihood of errors. By combining AR with robotics, complex repair tasks can be performed more safely and effectively, transforming traditional maintenance processes into highly interactive and intuitive experiences.

This integration also facilitates remote support and training, enabling experts to guide technicians through intricate repair processes from a distance. The result is a more resilient and responsive maintenance infrastructure that can quickly adapt to the evolving demands of modern industrial systems.

12. What role do you see for open-source platforms in democratizing robotics innovation globally?

Answer: Open-source platforms can democratize robotics innovation by making cutting-edge tools, software, and design resources freely accessible to researchers, hobbyists, and startups around the world. This accessibility lowers the barrier to entry, enabling a diverse range of innovators to contribute to advancements in robotics technology. Open-source communities foster collaboration, rapid prototyping, and knowledge sharing, which accelerates the pace of technological breakthroughs and drives cost-effective solutions. By empowering individuals and organizations regardless of their resources, these platforms play a crucial role in expanding the reach and impact of robotics research.

In addition, open-source platforms encourage transparency and collective problem-solving, leading to more robust and adaptable systems. The global collaboration facilitated by these platforms can result in innovations that address local challenges while also contributing to the broader advancement of robotics worldwide.

Robotics and Autonomous Systems: Numerical Problems and Solutions

1. Calculating Total Response Time in a Robotic System

Solution:

Step 1: Determine the sensor delay, processing time, and actuator lag; for instance, sensor delay = 0.05 seconds, processing time = 0.1 seconds, and actuator lag = 0.07 seconds.

Step 2: Sum these individual times: 0.05 + 0.1 + 0.07 = 0.22 seconds.

Step 3: Verify that the cumulative response time of 0.22 seconds meets the system’s operational requirements and adjust any component if necessary.

2. Determining the Distance Traveled by a Mobile Robot

Solution:

Step 1: Assume the robot moves with a constant speed of 1.2 m/s for 15 seconds.

Step 2: Calculate the distance using the formula distance = speed × time, which equals 1.2 m/s × 15 s = 18 meters.

Step 3: Factor in any additional segments where the robot accelerates or decelerates to compute the total effective distance covered.

3. Energy Consumption Calculation for a Robotic Arm

Solution:

Step 1: Identify the power consumption rate of the robotic arm, say 150 watts, and the operating time of 2 hours.

Step 2: Convert the operating time to seconds (2 hours = 7200 seconds) or compute energy in watt-hours (150 W × 2 h = 300 Wh).

Step 3: Convert watt-hours to joules if required (300 Wh × 3600 J/Wh = 1,080,000 J) to determine the total energy consumption.

4. Calculating Joint Angle Using Inverse Kinematics

Solution:

Step 1: Define the coordinates of the target point for the end-effector in a two-link robotic arm system.

Step 2: Use trigonometric relations (e.g., cosine law) to compute the required angles at the joints based on the lengths of the robotic links and the target coordinates.

Step 3: Combine the computed angles to verify that the end-effector accurately reaches the desired point, adjusting calculations as necessary.

5. Battery Life Estimation for a Mobile Robot

Solution:

Step 1: Identify the battery capacity (e.g., 5000 mAh) and the average current draw of the robot (e.g., 250 mA).

Step 2: Calculate the operating time using the formula operating time = capacity ÷ current draw, which yields 5000 mAh ÷ 250 mA = 20 hours.

Step 3: Account for inefficiencies and additional loads to refine the battery life estimate to a more practical value.

6. Calculating the Force Output from an Electric Actuator

Solution:

Step 1: Determine the input electrical power (e.g., 200 W) and the efficiency of the actuator (e.g., 85%).

Step 2: Calculate the effective mechanical power output as 200 W × 0.85 = 170 W.

Step 3: Use the relationship between power, force, and velocity (power = force × velocity) and solve for force given a specific operating velocity, ensuring the units are consistent.

7. Kinematic Analysis of a Robotic Arm’s End-Effector

Solution:

Step 1: Define the lengths of the robotic arm’s segments and the angles between them.

Step 2: Apply forward kinematics equations using trigonometric functions to determine the position of the end-effector in Cartesian coordinates.

Step 3: Sum the contributions of each segment’s movement to derive the final position, checking the results with simulation data for verification.

8. Sensor Sampling Rate and Data Throughput Calculation

Solution:

Step 1: Identify the sensor’s sampling rate (e.g., 500 samples per second) and the data size per sample (e.g., 16 bits).

Step 2: Calculate the data throughput by multiplying the sampling rate by the data size: 500 samples/s × 16 bits = 8000 bits/s.

Step 3: Convert the throughput to a more convenient unit (e.g., kilobits per second) and ensure that the communication channel can handle this rate.

9. Trajectory Optimization Problem for a Mobile Robot

Solution:

Step 1: Define the start and end coordinates along with intermediate waypoints for the robot’s path.

Step 2: Use optimization techniques (e.g., Dijkstra’s or A* algorithm) to compute the shortest or most efficient route among the waypoints.

Step 3: Calculate the total distance and estimated travel time based on the optimized path, ensuring that the robot avoids obstacles and minimizes energy consumption.

10. Calculating the Cumulative Error in Sensor Measurements

Solution:

Step 1: Determine the error per measurement, for example, 0.2% of the measured value.

Step 2: Sum the errors over a series of 100 measurements to obtain the total potential error.

Step 3: Analyze the cumulative error as a percentage of the total measurement range and apply corrective calibration if necessary.

11. Multi-Step Calculation of a Robotic System’s Throughput

Solution:

Step 1: Determine the number of tasks a robot can perform per minute, for instance, 30 tasks.

Step 2: Multiply this rate by the number of operational minutes in an hour (e.g., 60 minutes) to find the hourly throughput: 30 × 60 = 1800 tasks/hour.

Step 3: Factor in downtime and efficiency losses to adjust the final throughput estimate to a realistic operational value.

12. Evaluating Performance Improvement with Parallel Processing

Solution:

Step 1: Establish the baseline processing time for a robotic algorithm running sequentially, for example, 120 seconds.

Step 2: Implement parallel processing and measure the new processing time, say 40 seconds, indicating a speed-up factor.

Step 3: Calculate the improvement percentage using the formula ((120 − 40) ÷ 120) × 100 = 66.67% improvement, confirming the efficiency gains of parallel computation.

Last updated: 26 Aug 2025 · Prep4Uni STEM Team