CV drives impact in smart manufacturing, healthcare imaging, agriculture, and aerospace (e.g., satellite technology). It often pairs with robotics & autonomous systems for real-time navigation and manipulation; with NLP for OCR and document understanding; and with internet & web technologies to coordinate edge devices in the field.

As a subject of study, CV draws on supervised and unsupervised learning, and increasingly uses reinforcement learning for perception-in-the-loop control. At the frontier, emerging technologies—including quantum computing—hint at new approaches to large-scale visual inference and 3D understanding.

Table of Contents

Computer Vision - Orientation

What you’ll learn on this page

Use this page as a practical guide—from data and annotation through metrics, deployment, and debugging.- ✓Build a robust vision pipeline — task definition, leakage-safe splits, annotation QA, class imbalance, and task-aware augmentation.

- ✓Measure what matters — IoU, AP/mAP, mIoU, PQ with per-class and slice metrics, plus confidence calibration.

- ✓Do systematic error analysis — confusion patterns, localization misses, scenario slices, and an actionable failure-bucket playbook.

- ✓Deploy on edge or cloud — quantization, pruning, distillation, runtime choices (TensorRT/TFLite/ONNX), and reliability monitoring.

- ✓Reference key metrics quickly — a compact SVG cheatsheet for detection, segmentation, and panoptic tasks.

- ✓Export models for production — PyTorch → ONNX → TensorRT/TFLite with sanity checks and a deployment checklist.

Key Capabilities of Computer Vision

Computer vision encompasses a rich array of capabilities that empower machines to interpret and make sense of visual data—turning raw pixel information into meaningful insights and actions. Below we delve deeper into three foundational capability areas, complete with real-world applications, technical insights, and illustrative use-cases.

Recognizing Faces and Objects

Core Concept

At its heart, face and object recognition involves identifying and classifying entities—be they human faces, vehicles, animals, or everyday items—across images and video frames. Modern systems use feature extraction and deep learning models like convolutional neural networks (CNNs) to encode distinctive patterns (e.g., facial landmarks, texture, shape) and map them to known categories.How It Works

- Feature Detection & Representation: Algorithms learn to capture low-level features (edges, colors) and gradually build up to more abstract patterns (faces, logos).

- Neural Embedding: Each object or face is converted into a numerical “embedding” vector. Similar vectors indicate similar appearances.

- Classification & Matching: Embeddings are compared to labeled datasets, enabling identification (e.g., face matching) or categorization.

Applications & Examples

- Facial Recognition

- Access Control & Authentication: Smartphones (e.g., iPhone Face ID) unlock devices by matching live face scans to stored profiles with sub-millimeter accuracy.

- Airport Security & Border Control: Automated gates use real-time face recognition to compare travelers’ images against passport databases.

- Public Safety: City cameras detect persons of interest in crowds—prompting law enforcement when matches arise.

- Object Detection

- E-commerce Recommendations: Platforms like Amazon identify clothes or gadgets in user-uploaded images, offering visually similar items.

- Augmented Reality (AR): IKEA’s AR app recognizes furniture outlines, allowing users to “place” virtual sofas in their living rooms.

- Robotics & Automation: Warehouse robots detect package types and sort them accordingly—via bounding boxes drawn around recognized objects.

Technical Challenges

- Occlusion & Variability: Faces and objects may be partially blocked or viewed from unusual angles.

- False Positives & Bias: Recognizing unseen objects or misclassifying can cause errors.

- Dataset Scale & Privacy: Large labeled datasets raise consent and surveillance concerns.

Illustrative Example

In a secure workplace, cameras continuously scan for registered employees’ faces. If an unrecognized face is detected, an alert is triggered. Meanwhile, a retail version identifies a returning customer and triggers personalized in-store offers.Detecting Anomalies

Core Concept

Anomaly detection involves identifying deviations from normal patterns—whether visual defects in manufacturing, abnormal growths in medical scans, or irregular infrastructure wear and tear.How It Works

- Baseline Learning: Models are trained on large amounts of “normal” data—such as defect-free products or healthy organs—so any deviations stand out.

- Residual Analysis: Deviations generate high residual errors or fall outside the distribution of expected embeddings.

- Alert Generation: Anomalies trigger downstream alerts or automatic corrections.

Applications & Examples

- Quality Inspection in Manufacturing

- Automotive Industry: High-res cameras scan weld seams and components for microscopic defects.

- Consumer Electronics: Circuit boards are inspected for missing components or soldering errors.

- Medical Imaging

- Radiology Diagnostics: AI flags suspicious masses in CT scans or X-rays.

- Pathology Slides: Detect cellular irregularities for early cancer detection.

- Infrastructure Monitoring

- Civil Engineering: Drones scan bridges and tunnels for fissures or erosion.

- Urban Management: Cameras detect potholes and prompt maintenance requests.

Technical Challenges

- High Precision Thresholds: False alarms or misses have serious consequences in high-stakes environments.

- Class Imbalance: Anomalies are rare and hard to model effectively.

- Interpretability: Visualization techniques like heat maps are needed to explain model behavior.

Illustrative Example

In an assembly line producing smartphone screens, every screen is inspected by AI-powered cameras. When a tiny scratch is detected, the screen is diverted for manual review or rework—saving expensive downstream repairs.Performing Scene Understanding

Core Concept

Scene understanding refers to the machine’s ability to perceive full visual contexts—not just objects, but relationships between them—and reason about scenes holistically.How It Works

- Semantic Segmentation: Each pixel is assigned a label—identifying roads, signs, people, etc.

- Instance Segmentation: Individual objects within a category are separately identified.

- 3D Scene Reconstruction: Depth estimation builds volumetric maps of the environment.

- Temporal Tracking & Activity Analysis: Objects are tracked over time to model interactions.

Applications & Examples

- Self-Driving & Driver-Assistance Systems

- Lane & Sign Detection: Vehicles recognize lane markers, STOP signs, and traffic lights.

- Risk Assessment: Systems detect pedestrians, cyclists, or animals and respond accordingly.

- Surveillance & Security

- Behavior Recognition: Cameras identify left luggage or unusual crowd movements.

- Intrusion Detection: Unauthorized entry prompts automated alerts.

- Geospatial & Urban Analytics

- Satellite Earth Observation: Classifies land use and monitors natural disasters.

- Disaster Response: Detects collapsed structures or blocked roads from aerial images.

Technical Challenges

- Scale & Complexity: Models must interpret multilayered, fast-changing visual contexts.

- Temporal and Spatial Context: Streaming analysis is needed to detect dynamic interactions.

- Edge & Embedded Constraints: Systems must function in real time on limited hardware.

Illustrative Example

In autonomous shuttles operating in pedestrian-heavy zones, scene understanding systems not only classify objects, but predict movements—anticipating when a toddler might dart into the path—allowing smooth, human-like driving decisions.

Summary Table: Capabilities in Practice

| Capability | Core Function | Real-world Impact |

|---|---|---|

| Face/Object Recognition | Classify, identify entities | Device unlocking, personalized retail, robotics |

| Anomaly Detection | Detect outliers in visual data | Quality control, early diagnosis, infrastructure safety |

| Scene Understanding | Semantic, spatial, temporal scene reasoning | Autonomous vehicles, public safety, disaster mapping |

Why These Capabilities Matter

Understanding these fundamental abilities is crucial for students and professionals alike. They form the backbone of systems we increasingly rely on—self-driving cars, robotic surgery, smart factories, and AI-enabled cities. Mastery of these techniques—object detection, segmentation, anomaly classification—offers a direct path to building impactful, safe, and intelligent systems. Through further study and hands-on projects, learners can contribute to solutions that perceive, reason, and react to the visual world—pioneering the next frontier of machine perception.

Emerging Applications of Computer Vision

Augmented and Virtual Reality (AR/VR):

Computer vision empowers AR/VR systems to overlay digital content seamlessly onto the user’s view of the real world, enabling immersive experiences in gaming, education, and therapy. For example, AR navigation apps can display turn-by-turn directions on top of live street views. In training and simulation, VR headsets use real-time hand tracking and spatial awareness to let users manipulate virtual controls or explore historical reconstructions. In industrial contexts, technicians using AR glasses can receive live diagrams overlaid on equipment, improving assembly and maintenance precision. Similarly, in healthcare, surgical training platforms simulate operations with responsive visual feedback and holographic patient anatomy.

Advances include markerless motion capture and environment scanning, enabling mobile AR apps to function without pre-defined reference points. Eye-tracking and gesture recognition add natural interaction capabilities, while neural rendering techniques enable fluid transitions between real and virtual elements. This integration facilitates powerful applications like collaborative VR workspaces, immersive storytelling in museums, and remote design prototyping where teams can visualize 3D models in shared virtual spaces.

This stylized image showcases how computer vision powers AR/VR technologies. On the left, a technician uses AR glasses to overlay holographic engine diagrams during machinery maintenance. On the right, a young man with a VR headset and controllers explores a virtual room with a holographic human body and data display, illustrating medical and educational uses.

Retail Analytics:

Smart cameras and shelf sensors backed by computer vision analyze shopper movements, dwell times, and product interactions to extract actionable insights. Retailers can generate visual heatmaps showcasing areas of high foot traffic or delayed purchases, guiding strategic shelf placement, promotional displays, and store layout optimization.

Computer vision-based stock monitoring tracks inventory levels in real time, triggering automatic restocking alerts when shelf space runs low. Smart checkout systems utilize vision to identify items customers place in baskets, reducing the need for scanning. In addition, loss prevention systems identify suspicious behavior—such as concealment or shoplifting—by tracking customer intent and flagging anomalies to security staff.

On the personalization front, CV-powered digital signage adapts to detected demographics—age range, gender, mood—and provides tailored marketing in-store. Integration with loyalty apps allows seamless checkout and personalized discounting triggered by recognized returning customers.

This stylized digital illustration captures the dynamic use of computer vision in modern retail environments. A store interior features smart shelves tracking inventory, overhead heatmap analytics showing customer movement, and a smart checkout counter where a shopper’s items are automatically recognized without scanning. A digital signage panel tailors advertisements based on detected customer demographics, and a staff member monitors a dashboard showing real-time alerts and stock data. The visual communicates how computer vision transforms store operations, personalization, and loss prevention in retail.

Wildlife Conservation:

Camera traps equipped with computer vision effectively identify species, count individuals, and log animal activities automatically in forests, savannas, and marine environments. AI analysis helps researchers monitor population health, track migration routes, and detect poaching events in near-real-time, all without disturbing wildlife.

Drones with multispectral imagers scan large conservation areas, identifying endangered species or mapping habitats. CV algorithms process aerial footage to differentiate between species via distinctive markings or size estimates, enabling automated censuses and habitat usage patterns.

Additionally, computer vision is used in acoustic camera systems that correlate movement with verified sound patterns (like bird calls) for species identification. In marine ecosystems, underwater vision systems detect coral bleaching, fish counts, and pollution impacts—providing continuous environmental monitoring to support timely conservation measures.

This artist impression captures the use of computer vision in wildlife conservation. A tiger, elephant, and bird are tagged in real-time by a drone and smart camera systems, while a conservationist sits in the foreground analyzing live data on a laptop and monitor. The scene showcases how AI assists in species identification, habitat mapping, and real-time population monitoring.

Agriculture:

In precision agriculture, drones and ground robots employ computer vision to scan fields, detecting early signs of plant stress, pest infestations, nutrient deficiencies, or fungal infections. The resulting high-resolution maps enable farmers to target treatments—limiting pesticide and fertilizer use and reducing costs and environmental impact.

Robotic harvesters integrated with vision systems identify and pick ripe fruits or vegetables, adjusting grip and motion to minimize damage and maximize yield. In vineyards, CV detects grape maturity levels and supports automated pruning and thinning, improving harvest timelines and fruit quality.

Soil health monitoring systems combine CV with spectral imaging to assess moisture levels and crop density, guiding seed planting patterns and optimizing irrigation schedules. Vision-based weed detection tools enable autonomous robots to differentiate and remove weeds individually, reducing herbicide use. Post-harvest, computer vision inspects produce for size, shape, or external defects—supporting automated grading and sorting in packing facilities.

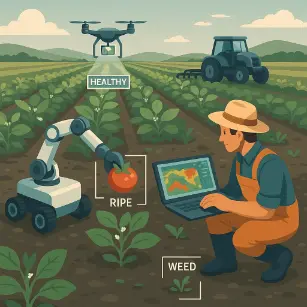

This stylized digital artwork depicts an advanced agricultural scene where computer vision technology is integrated into farming operations. A robot equipped with a mechanical arm plucks a ripe tomato while classifying other plants as “weed” or “healthy.” A drone flies overhead collecting data, and a farmer kneels with a laptop showing a crop heatmap. In the background, an autonomous tractor navigates through rows of plants, illustrating a modern, data-driven approach to sustainable farming.

How Computer Vision Works

Technologies Behind Computer Vision

Image Classification:

Image classification is the foundational task in computer vision where a machine assigns a predefined category or label to an entire image. For example, given a photo, the system may determine whether it contains a cat, dog, airplane, or tree. This task is widely used in organizing image databases, recommending content on social media, and enabling AI models to recognize and group similar visuals.

It involves preprocessing images to normalize lighting and scale, followed by feature extraction using convolutional layers in neural networks. State-of-the-art models like ResNet, VGG, and EfficientNet have set benchmarks in classification accuracy by learning hierarchical feature representations. Datasets such as ImageNet have been instrumental in training and testing these models across thousands of object categories.

Beyond static photos, image classification plays a key role in medical diagnostics (e.g., classifying X-rays as healthy or diseased), agriculture (e.g., identifying plant species or diseases), and wildlife monitoring. In educational tools, classification is used to label and sort content based on subject matter or difficulty.

Object Detection:

Object detection advances beyond classification by identifying the presence, type, and precise location of multiple objects within a single image. It generates bounding boxes around detected items and assigns labels, making it essential for applications like autonomous driving, robotics, and surveillance.

Techniques like YOLO (You Only Look Once), SSD (Single Shot Detector), and Faster R-CNN offer varying trade-offs between speed and accuracy. These models scan the image in a grid-like fashion or propose candidate regions, detecting vehicles, humans, animals, or objects of interest in real-time scenarios.

Object detection is used in retail to track customer interactions, in sports analytics to analyze player movement, and in smart cities to monitor traffic flow. Its precision and contextual awareness make it vital in safety-critical systems where understanding spatial arrangements of multiple objects is required.

Semantic Segmentation:

Semantic segmentation refers to labeling each pixel in an image with its corresponding class, thus producing a detailed map of the visual scene. Unlike object detection, which draws boxes, segmentation precisely outlines the shape and extent of objects.

This technique is crucial in applications where boundaries matter—such as medical imaging (e.g., tumor contouring), autonomous driving (e.g., distinguishing road lanes from pedestrians), and satellite imagery (e.g., separating urban areas from vegetation). Tools like U-Net and DeepLab are popular deep learning models for segmentation tasks.

It also supports environmental monitoring by assessing land usage and deforestation and enables smart factories to distinguish between components on assembly lines with pixel-level precision.

Optical Character Recognition (OCR):

OCR enables machines to detect and digitize text from scanned images, documents, signage, and video frames. This technology transforms visual data into machine-readable formats, facilitating automation in administration, translation, and archiving.

Modern OCR systems use computer vision to detect text regions, followed by recurrent neural networks (RNNs) or transformers to decode the character sequences. Tools like Tesseract and Google Vision API have enabled OCR in dozens of languages, including stylized fonts and handwriting.

Applications include automated license plate recognition, passport verification, digitizing handwritten notes in education, and real-time translation apps using smartphones. In legal and financial sectors, OCR helps extract structured data from unstructured scanned documents.

3D Vision:

3D vision allows machines to perceive depth, geometry, and spatial relationships by analyzing 2D images captured from one or more viewpoints. This capability is critical in robotics, virtual reality, and digital twin systems, where understanding the world in three dimensions is essential for interaction and manipulation.

Techniques include stereo vision (comparing two camera views), structure-from-motion (SfM), time-of-flight sensors, and LiDAR. 3D point cloud generation and mesh reconstruction are common outputs that support realistic modeling of objects and environments.

In construction, 3D vision supports building information modeling (BIM) and inspection. In healthcare, it enables volumetric analysis of internal organs using CT or MRI data. In entertainment, it powers motion capture, gaming avatars, and CGI environments.

Foundational Algorithms in Computer Vision:

Edge Detection: Edge detection is a classical technique used to identify boundaries within images. It detects significant transitions in intensity, allowing the identification of shapes, contours, and textures. Common algorithms include the Sobel operator, Prewitt filter, and the Canny edge detector. Canny, in particular, is known for its multi-stage approach—smoothing, gradient calculation, non-maximum suppression, and hysteresis thresholding—yielding clean and thin edges. Edge maps are often used as inputs for higher-level tasks such as segmentation and object recognition.

Simplified Algorithm: Canny Edge Detection

1. Apply Gaussian Blur to reduce noise. 2. Compute intensity gradients using Sobel filters. 3. Apply Non-Maximum Suppression to thin edges. 4. Use Double Thresholding to distinguish strong and weak edges. 5. Track edges using Hysteresis: keep weak edges connected to strong ones.

This algorithm provides a robust framework for extracting meaningful edges and is widely adopted in both classical and modern pipelines.

Feature Detection and Matching: This process extracts distinct points of interest, known as keypoints, from images. Algorithms like SIFT (Scale-Invariant Feature Transform), SURF (Speeded Up Robust Features), and ORB (Oriented FAST and Rotated BRIEF) are used to describe these keypoints with mathematical descriptors, which can then be matched across different images. This enables machines to identify the same object or pattern even under different lighting, scale, or rotation.

Simplified Workflow:

1. Detect keypoints using an interest point detector (e.g., FAST or Difference of Gaussians). 2. Compute descriptors for each keypoint (e.g., BRIEF, SIFT). 3. Match keypoints across images using distance metrics (e.g., Euclidean distance). 4. Refine matches with methods like RANSAC to eliminate outliers.

This workflow underpins applications such as panorama stitching, 3D modeling, and robotic navigation.

Histogram of Oriented Gradients (HOG): A technique that captures edge and gradient orientation patterns, HOG is effective in human detection and object tracking. It represents localized shape features using histograms computed from grid-based cells and blocks.

1. Divide the image into small spatial regions called cells. 2. For each cell, compute the gradient magnitude and orientation for each pixel. 3. Create a histogram of gradient orientations within the cell. 4. Normalize these histograms over larger blocks of cells to account for illumination variations. 5. Concatenate the histograms into a single feature vector representing the image.

HOG features are commonly fed into classifiers such as Support Vector Machines (SVM) for tasks like pedestrian detection in autonomous vehicles or security cameras.

Convolutional Neural Networks (CNNs): The backbone of deep learning in computer vision, CNNs apply layers of filters to learn spatial hierarchies of features. Starting from edges and corners in early layers to abstract object parts in deeper layers, CNNs have dramatically improved accuracy in tasks like classification, detection, and segmentation. Techniques like data augmentation, batch normalization, and transfer learning further enhance CNN performance.

Simplified Workflow:

1. Input image is preprocessed and normalized. 2. Multiple convolutional layers apply filters to extract features. 3. Pooling layers reduce spatial dimensions and computation. 4. Fully connected layers interpret high-level features. 5. Softmax (or sigmoid) layer outputs class probabilities.

CNNs are trained through backpropagation using labeled datasets and are known for their robustness, scalability, and transferability across computer vision tasks.

Image Preprocessing: Techniques such as normalization, histogram equalization, Gaussian filtering, and resizing ensure that input images are consistent in quality and scale before analysis. These steps improve model performance and reduce noise-induced errors.

1. Resize all input images to a consistent size (e.g., 224x224 pixels). 2. Apply Gaussian filter to smooth out high-frequency noise. 3. Perform histogram equalization to enhance contrast. 4. Normalize pixel intensity values to a standard range (e.g., 0 to 1). 5. Optionally apply data augmentation such as flipping, rotation, or cropping.

These steps form the essential foundation for any reliable computer vision model by ensuring uniformity and robustness in input data.

Understanding these theoretical underpinnings equips students and researchers to move beyond using pre-trained models, enabling them to develop novel algorithms and contribute to advancing the state of the art in visual understanding.

Practical Vision Pipeline — Data, Annotation & Augmentation

Turning pixels into reliable predictions depends less on model novelty and more on a disciplined pipeline. This section gives a checklist you can apply to classification, detection, segmentation, OCR, and 3D tasks.

1) Define the task & target

- Label granularity: class vs. bbox vs. polygon/mask; word/line/char for OCR.

- Evaluation unit: image, region, frame, or track? (affects splits & metrics).

- Throughput & latency: real-time (edge) vs. batch (cloud) → drives model size & deployment.

- Risk surface: false negatives vs. false positives; add abstain/second-review when needed.

2) Plan leakage-safe splits

- By source: split by camera/site/person, not by image, to avoid near-duplicates leaking.

- By time: use chronological splits for video/ops settings to reflect future drift.

- Stratify labels: maintain class prevalence; for detection, stratify by object presence.

- Freeze test: publish a test manifest; never touch it after model selection.

3) Annotation quality (what to check)

- Guidelines: one illustrated page with edge cases, IoU tolerance, “don’t-care” rules.

- Inter-annotator agreement: periodic overlap labeling; compute IoU/κ; reconcile disagreements.

- Box hygiene: tightness, coverage, no partial objects unless intended, consistent occlusion policy.

- Masks: close gaps, smooth jitter across frames, snap to boundaries if tool allows.

- Audit: sample 1–3% per batch; track error types and feed back into guidelines/tooling.

4) Class imbalance & long tail

- Data targeted collection; mine hard negatives; upsample rare classes carefully.

- Loss focal/tversky; class-balanced weights by effective number of samples.

- Sampler repeat-factor or per-class sampling for detection/instance seg.

- Evaluation report per-class metrics and macro-averages, not just micro.

5) Augmentation that helps (task-aware)

- Classification: random crop/resize, flips, color jitter, RandAugment; mixup/cutmix for robustness.

- Detection: mosaic/multi-scale, random resize+crop, horizontal flip, photometric jitter; careful with rotations.

- Segmentation: elastic/affine, coarse dropout (CutOut), small distortions; maintain mask integrity.

- OCR: blur, perspective warp, synthetic fonts/backgrounds; keep text legible.

- Video: consistent per-clip transforms; sample varied clip lengths.

6) Pre- & post-processing

- Pre: color space, mean/std normalization; letterbox vs. center-crop; tiling for large images.

- NMS: class-wise vs. class-agnostic; soft-NMS for crowded scenes; adjust IoU thresholds by object size.

- Confidence calibration: temperature scaling or isotonic on a held-out set.

- Test-time augmentation (TTA): flips/scale ensemble if latency allows; measure real gain.

7) Training routine (reliable defaults)

- Backbone: start with an ImageNet-pretrained CNN/ViT; unfreeze progressively.

- Schedules: cosine or one-cycle; warmup 3–10% of steps; EMA weights for eval.

- Batching: mix precision (fp16/bfloat16); gradient clipping for stability; accumulate if memory-bound.

- Validation cadence: fixed epoch/step; early stop on primary metric (e.g., mAP@0.5:0.95, mIoU).

8) Error analysis (what to look at)

- Per-class metrics: AP/IoU histograms; small vs. medium vs. large object performance.

- Confusion & localization: class confusions vs. low-IoU misses; heatmaps for activation sanity.

- Scenario slices: lighting, weather, camera angle, range, motion blur; build slice metrics.

- Qualitative review: save top FP/FN per class; weekly labeling quality spot-checks.

9) Quick checklist (copy/paste)

- Task, latency, and risk defined; evaluation unit chosen.

- Leakage-safe splits (by source/time); frozen test manifest.

- Written annotation guide; periodic overlap & agreement checks.

- Imbalance plan (data, loss, sampler); report macro & per-class metrics.

- Task-aware augmentations; sensible pre/post; calibrated confidence.

- Stable training (warmup, schedule, EMA, mixed precision, clipping).

- Error analysis by slice/size; curated FP/FN gallery for action.

Next up: evaluate with task-specific metrics (IoU, mAP, DET curves) and plan deployment (edge vs. cloud). See your Foundational Algorithms section for model families and map them into this pipeline.

Quick-Start Code Recipes (Classification · Detection · Segmentation · OCR)

Minimal, readable snippets to get from pixels → predictions. Use as learning aids; adapt to your tooling and datasets.

Image Classification (PyTorch)

Load a pretrained backbone and run a single image.

import torch, torchvision.transforms as T

from PIL import Image

from torchvision.models import resnet18, ResNet18_Weights

weights = ResNet18_Weights.DEFAULT

model = resnet18(weights=weights).eval()

preprocess = weights.transforms()

img = Image.open("example.jpg").convert("RGB")

x = preprocess(img).unsqueeze(0) # [1,3,H,W]

with torch.no_grad():

logits = model(x)

probs = logits.softmax(dim=1).squeeze()

top5 = probs.topk(5)

print(top5)- Swap backbone (e.g., ResNet50, ViT) as needed.

- Fine-tune by replacing the final layer and training on your labels.

Object Detection (YOLO family)

Fast baseline for detection/instance tasks.

from ultralytics import YOLO # pip install ultralytics

model = YOLO("yolov8n.pt") # choose size: n/s/m/l/x

results = model("example.jpg") # list of predictions

for r in results:

for b in r.boxes:

cls = int(b.cls[0]); conf = float(b.conf[0]); xyxy = b.xyxy[0].tolist()

print(cls, conf, xyxy)- Train on your data:

model.train(data="data.yaml", epochs=50) - Export:

model.export(format="onnx")→ use in deployment pipelines.

Semantic Segmentation (DeepLabv3)

Quick semantic masks with torchvision.

import torch, torchvision.transforms as T

from PIL import Image

from torchvision.models.segmentation import deeplabv3_resnet50

model = deeplabv3_resnet50(weights="DEFAULT").eval()

tr = T.Compose([T.Resize(520), T.ToTensor(),

T.Normalize(mean=[0.485,0.456,0.406], std=[0.229,0.224,0.225])])

img = Image.open("example.jpg").convert("RGB")

x = tr(img).unsqueeze(0)

with torch.no_grad():

out = model(x)["out"] # [1,C,H,W]

mask = out.argmax(1).squeeze().cpu() # per-pixel class id

print(mask.shape, mask.unique())- Report mIoU per class; validate on slice conditions (lighting, distance).

OCR (Tesseract + OpenCV)

Simple, classical OCR with language packs.

import cv2, pytesseract

img = cv2.imread("scan.png")

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

gray = cv2.threshold(gray,0,255,cv2.THRESH_BINARY+cv2.THRESH_OTSU)[1]

text = pytesseract.image_to_string(gray, lang="eng")

print(text)- For scene text, try CRNN/Transformers; ensure legible fonts & lighting.

Next: see Evaluation & Error Analysis and Edge vs Cloud Deployment to validate and ship your pipeline.

Computer Vision Evaluation & Error Analysis

Evaluation & Error Analysis for Vision Systems

Good models aren’t just trained — they’re measured, inspected, and iterated. Use the checklist below to evaluate classification, detection, and segmentation systems, then dig into error patterns that unlock the next gains.

1) Classification (top-1 / multi-label)

overall

macro

macro

macro

- Per-class metrics: macro vs. micro; look for tail classes.

- Confusion matrix: rank the heaviest confusions (e.g., “cat→fox”).

- Calibration: reliability curve / ECE; over-confident models harm UX.

- Thresholding: for multi-label, sweep threshold; plot precision–recall.

2) Object Detection (mAP & localization)

avg recall

latency

- IoU thresholds: report both

mAP@50andmAP@[.50:.95]. - Error buckets: missed gt (FN), spurious FP, poor IoU, wrong class.

- Scale sensitivity: small/medium/large objects; crowd vs. isolated.

- NMS sanity: vary

score_thresh,nms_iouand re-check PR curves.

3) Segmentation (IoU / Dice)

- Class imbalance: report per-class IoU; watch tiny/rare regions.

- Boundary quality: Boundary-F or trimap IoU for edge fidelity.

- Post-processing: CRF/morph ops — quantify if they help or hide issues.

4) Robustness & OOD sanity

- OOD holdout: new camera, lighting, or domain; track metric deltas.

- Common corruptions: blur, noise, JPEG, fog; plot performance vs. severity.

- Aug test-time: flip/resize/crop at inference and average if latency allows.

- Safety checks: adversarial-like perturbations should not flip obvious cases.

Practical Error-Analysis Playbook

- Sort failures: create top-N “worst” lists by confidence and loss.

- Tag buckets: lighting, occlusion, small objects, viewpoint, class-lookalikes.

- Look at distributions: per-class support, box sizes, aspect ratios, image entropy.

- Targeted fixes: curate a small corrective set (50–500 imgs) per bucket; add to training.

- Re-measure: always re-report the same KPIs; watch for regressions.

Minimal formulas you’ll see

- Precision / Recall: \( \mathrm{P}=\frac{TP}{TP+FP},\ \mathrm{R}=\frac{TP}{TP+FN} \)

- F1: \( \mathrm{F1}=2\frac{\mathrm{P}\cdot\mathrm{R}}{\mathrm{P}+\mathrm{R}} \)

- IoU (seg/det): \( \mathrm{IoU} = \frac{|A\cap B|}{|A\cup B|} \)

- Expected Calibration Error (ECE): bucket confidences; average |conf − acc| weighted by bucket size.

Vision Metrics that Matter (IoU, AP, mAP, mIoU, PQ)

Choose metrics that match the task. Report both headline and slice/per-class results for honest progress tracking.

1) IoU & localization quality

Intersection over Union (IoU): overlap quality for boxes or masks.

IoU(A,B) = area(A ∩ B) / area(A ∪ B)

- Used to decide a detection is a TP (e.g., IoU ≥ 0.5 or ≥ 0.5:0.95 grid).

- For segmentation, IoU is computed per class from masks.

2) Precision, Recall & AP

- Precision: of predicted positives, how many are correct.

- Recall: of all actual positives, how many are found.

- AP (Average Precision): area under the precision–recall curve (per class).

Sort detections by confidence, sweep thresholds → PR curve → compute AP.

3) mAP for detection/instance seg

mAP: mean of AP across classes. Common settings:

mAP@0.5— AP at IoU≥0.5 (PASCAL-style).mAP@[0.5:0.95]— average AP over IoU thresholds 0.50,0.55,…,0.95 (COCO-style).

Always include per-class AP and small/medium/large object breakdowns.

4) mIoU for semantic segmentation

mIoU: mean IoU across all classes.

mIoU = (1/C) · Σ IoUclass

- Ignore unlabeled/void pixels consistently.

- Report frequency-weighted IoU when class imbalance is severe.

5) Panoptic Quality (PQ)

PQ combines recognition & segmentation quality for panoptic tasks.

PQ = Σ IoU(TP) / ( |TP| + 0.5|FP| + 0.5|FN| )

Also seen as PQ = SQ × RQ where SQ is mean IoU over matched TPs, RQ is F1-like.

6) Reporting best practices

- Use class-wise tables and slice metrics (lighting, range, camera, motion).

- Calibrate confidence: include ECE or reliability plots for decision thresholds.

- Provide error galleries (top FN/FP) per class for qualitative review.

7) Quick metric checklist

- Detection/instance seg:

mAP@[0.5:0.95]+ per-class AP + size breakdowns. - Semantic seg:

mIoU(+ freq-weighted IoU if imbalance). - Panoptic:

PQ+ itsSQandRQcomponents. - Confidence calibration: ECE or temperature scaling on a held-out set.

- Publish exact eval config (IoU thresholds, NMS, score cutoffs).

Deployment & Performance for Computer Vision (Edge vs Cloud)

Edge vs Cloud Deployment for Vision (Quantization, Runtime, Constraints)

Pick a target by latency, privacy, and ops constraints. Optimize model and pipeline to meet the budget.

1) Choosing the target

| Factor | Edge (on-device) | Cloud (service) |

|---|---|---|

| Latency | Lowest; no network hop | Depends on RTT/throughput; batch helps |

| Bandwidth | No uplink needed | Video upload can be costly |

| Privacy | Data stays local | Centralized control & audits |

| Compute | Constrained power/memory | Scalable GPUs/TPUs |

| Ops model | Harder to update fleet | Central updates & A/B tests |

| Costs | CapEx (devices), low Opex | Opex (compute/storage/egress) |

2) Model optimization (portable wins)

- Quantization INT8 (post-training or QAT), FP16/bfloat16; calibrate with real data.

- Pruning structured/channel pruning to preserve speedups on target HW.

- Distillation train a compact student from a large teacher.

- Fusion conv+BN+act fusion; operator folding before export.

- Resolution/anchors scale input, reduce anchors/classes for throughput.

Export via ONNX or native (TFLite, Core ML) and verify numerics after each step.

3) Runtimes & targets

- Mobile/Edge: TFLite(+NNAPI), Core ML, ONNX Runtime Mobile, MNN, ncnn.

- Embedded NVIDIA: TensorRT (FP16/INT8 with calibration; DLA cores on Xavier/Orin).

- x86/Server: ONNX Runtime, TensorRT, OpenVINO; batch & dynamic shapes.

- Browsers: WebGPU/WebGL via ONNX Runtime Web or tf.js for light tasks.

4) Edge pipelines (Jetson/mobile)

- Zero-copy I/O (DMA/Native buffers); GPU pre-processing (resize, letterbox, color convert).

- TensorRT: build engine with proper max workspace; use

FP16orINT8with calibration cache. - Schedule: pin threads, set power modes; cap FPS or use duty cycling to meet thermals.

- Post-proc: GPU NMS if possible; throttle visualizers to avoid CPU spikes.

5) Cloud pipelines

- Autoscale GPU workers; right-size instances to model memory.

- Batching for throughput (within SLA); async queues; warm pools to avoid cold starts.

- Streaming ingestion: chunked uploads, keyframe sampling; pre-decode near model.

- Observability: per-route latency, GPU util, VRAM, error rates; slow query logs.

6) Safety & monitoring

- Confidence calibration + abstain/escalate path.

- OOD/drift checks (feature stats, FPR@T for “unknown”).

- Canary/shadow deploys; gated rollouts with rollback.

- Periodic re-eval on frozen test + fresh drift set.

7) Deployment checklist

- Exported & verified model (

ONNX/TFLite/TRT) matches reference. - Meets latency/throughput on target device with real video.

- Quantization accuracy drop ≤ agreed budget (e.g., <1% mAP).

- Telemetry, health endpoints, and versioned configs enabled.

- Fallback (older model / cloud) defined for low-confidence cases.

Rule of thumb: optimize the whole path—I/O, pre-proc, model, post-proc, and transport—not just the network.

Computer Vision — Model Export & Acceleration

Start from a trained PyTorch model. Use representative inputs for tracing and calibration. Verify numerics after every hop.

1) Export PyTorch → ONNX

import torch

from torchvision import models

# Example model & sample

model = models.resnet18(weights=None) # replace with your trained net

model.eval()

sample = torch.randn(1, 3, 224, 224)

torch.onnx.export(

model, sample, "model.onnx",

opset_version=17,

input_names=["input"],

output_names=["logits"],

dynamic_axes={

"input": {"0":"N", "2":"H", "3":"W"},

"logits": {"0":"N"}

}

)

print("Saved model.onnx")Use dynamic_axes if your inference sizes vary. Keep the opset modern (e.g., 17/18).

2) Sanity-check ONNX with ONNX Runtime

import onnxruntime as ort

import numpy as np

import torch

ort_sess = ort.InferenceSession("model.onnx", providers=["CPUExecutionProvider"])

x = np.random.randn(1,3,224,224).astype(np.float32)

y_onnx = ort_sess.run(["logits"], {"input": x})[0]

# (Optional) Compare to PyTorch numerically

with torch.no_grad():

y_torch = model(torch.from_numpy(x)).numpy()

print("Cosine similarity:", (y_onnx*y_torch).sum() / (np.linalg.norm(y_onnx)*np.linalg.norm(y_torch)))Expect small differences from kernels and eps; big gaps mean an export/operator issue.

3) Build TensorRT engine (CLI)

# FP16 engine

trtexec --onnx=model.onnx --saveEngine=model_fp16.plan --fp16 --workspace=4096

# INT8 with calibration (supply a calibration cache or dataloader in Python)

trtexec --onnx=model.onnx --saveEngine=model_int8.plan --int8 \

--calib=/path/to/calib.cache --workspace=4096Use --shapes / --optShapes for dynamic input sizes. Verify perf & accuracy vs ONNX.

4) Optional: TensorRT from Python

import tensorrt as trt

logger = trt.Logger(trt.Logger.INFO)

builder = trt.Builder(logger)

network = builder.create_network(1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH))

parser = trt.OnnxParser(network, logger)

with open("model.onnx","rb") as f: parser.parse(f.read())

config = builder.create_builder_config()

config.set_memory_pool_limit(trt.MemoryPoolType.WORKSPACE, 4 << 30) # 4 GB

config.set_flag(trt.BuilderFlag.FP16)

engine = builder.build_engine(network, config)

with open("model_fp16.plan","wb") as f: f.write(engine.serialize())5) PyTorch → TFLite (via TensorFlow)

Route A ONNX → TensorFlow (e.g., onnx2tf) → TFLite.

# Convert ONNX to TensorFlow SavedModel

onnx2tf -i model.onnx -o tf_model

# Then convert SavedModel to TFLite

python - <<'PY'

import tensorflow as tf

converter = tf.lite.TFLiteConverter.from_saved_model("tf_model")

converter.optimizations = [tf.lite.Optimize.DEFAULT] # enable size/speed opts

tflite_model = converter.convert()

open("model_fp16.tflite","wb").write(tflite_model)

print("Saved model_fp16.tflite")

PYFor full-INT8, add a representative dataset function to calibrate activations.

6) Verify TFLite numerics

import numpy as np, tensorflow as tf

interpreter = tf.lite.Interpreter(model_path="model_fp16.tflite")

interpreter.allocate_tensors()

inp = interpreter.get_input_details()[0]

out = interpreter.get_output_details()[0]

x = np.random.randn(1,224,224,3).astype(np.float32)

interpreter.set_tensor(inp["index"], x)

interpreter.invoke()

y = interpreter.get_tensor(out["index"])

print("TFLite output shape:", y.shape)7) Export & Deployment Checklist

- Use **representative** inputs for tracing and (INT8) calibration.

- Keep an eval set to measure accuracy deltas after each hop (ONNX → TRT/TFLite).

- Lock opset and runtime versions in CI to avoid silent drifts.

- Benchmark full pipeline (I/O + pre/post) on the actual device.

- Document exact flags (e.g.,

trtexecshapes, precision, workspace) for reproducibility.

Computer Vision — Learning & Wrap-Up

Why Study Computer Vision

Understanding How Machines Perceive the Visual World

Exploring the Foundations of Image Processing and Deep Learning

Driving Innovation in a Range of Applications

Addressing Ethical, Security, and Privacy Concerns

Preparing for Careers in AI, Robotics, and Digital Innovation

Vision Metrics Cheatsheet (IoU • mAP • mIoU • PQ)

A quick visual refresher of how common metrics relate across detection, semantic, and panoptic tasks.

Explore World-Class Resources to Learn and Apply Computer Vision

To deepen your understanding of computer vision and its transformative impact, we highlight three authoritative resources that offer high-quality, accessible pathways into the field. The Stanford Car Dataset and Computer Vision Tutorial introduces learners to fundamental concepts and annotated datasets through a structured academic lens. The Computer Vision Foundation connects you to cutting-edge research, real-world applications, and conferences that shape the future of visual intelligence. Meanwhile, PyTorch’s Official Computer Vision Tutorials provide hands-on, beginner-friendly coding environments and pre-trained model zoos, ideal for experimenting with deep learning workflows. Together, these resources form a powerful learning triad—offering theory, discovery, and practice for students, educators, and innovators alike.

Resource 1: Explore Stanford’s Classic Overview of Computer Vision and Its Applications

Stanford University has long been a pioneer in the development and teaching of computer vision, offering foundational resources that have educated generations of students, researchers, and practitioners. Among its most influential contributions is the Stanford Cars Dataset—a meticulously curated benchmark that has become a gold standard in fine-grained image classification and object recognition. The dataset contains over 16,000 images of 196 classes of cars, spanning makes, models, and years. These images are annotated with labels for training and testing, allowing researchers to evaluate how well computer vision models can distinguish subtle differences among visually similar objects.

This digital illustration highlights the essence of the Stanford Cars dataset, showcasing a red sports car, yellow hatchback, and gray sedan rendered in a semi-realistic art style. Positioned against a grid-like background with sketch overlays, the image reflects the diversity of vehicle classes captured in the dataset and the structured approach used for training computer vision models in fine-grained image classification tasks.

This stylized digital illustration presents key concepts from Stanford’s Computer Vision overview. A surveillance camera and two sedans—one highlighted for object detection and the other representing autonomous driving—are arranged around the bold label “Computer Vision.” Dashed boxes and grid lines visually emphasize these core capabilities, highlighting how computer vision applies to real-world scenarios in security and self-driving vehicles.

Students and professionals benefit from the course’s combination of theoretical lectures, coding assignments, and open datasets. The course walks learners through the intricacies of loss functions, activation layers, optimization methods like stochastic gradient descent, and performance evaluation metrics. The curriculum also introduces cutting-edge techniques such as data augmentation, transfer learning, and the use of pre-trained models, which are essential for building robust computer vision systems today.

This educational visual presents a simplified flat-style diagram of a red sedan within a dotted bounding box, placed against a beige grid. The image includes text labels—“Computer Vision,” “Stanford Car Dataset,” and “Object Detection”—linked by layout to a monitor showing the same car image. It visually explains the process of object recognition in machine learning using annotated car datasets like Stanford’s.

The Stanford Car Dataset and CV Tutorial together highlight the importance of not just designing accurate models, but also understanding how models interpret nuanced visual cues in high-dimensional data. These resources are routinely referenced in academic papers and serve as the foundation for countless graduate theses and industrial R&D efforts. Moreover, they provide a practical bridge between low-level image processing and high-level AI systems that power autonomous vehicles, intelligent robots, and real-time video analysis.

By exploring Stanford’s classic contributions, learners gain not only access to invaluable tools and datasets but also an appreciation for the evolving challenges in computer vision—ranging from generalization and domain adaptation to fairness and interpretability. Whether preparing for university coursework, contributing to open-source projects, or innovating in industry, these resources equip students with both the technical depth and strategic perspective needed to excel in one of the most dynamic fields in modern AI.

Resource 2: Dive into the Latest Advances and Real-World CV Applications at the Computer Vision Foundation

The Computer Vision Foundation (CVF) is one of the premier global platforms advancing the study and dissemination of cutting-edge research in computer vision. It serves as a hub for academic papers, datasets, and technological demonstrations presented at the world’s top conferences, notably the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), the International Conference on Computer Vision (ICCV), and the European Conference on Computer Vision (ECCV).

This digital artwork captures the essence of cutting-edge research supported by the Computer Vision Foundation. It uses abstract forms and vibrant contrasts—such as a silhouette head with an embedded eye, a desktop screen showing labeled vehicle images, and symbolic representations of AI and neural networks—to evoke the intersection of perception, data, and deep learning. The scene symbolizes how vision algorithms and research shape future technologies in autonomy, analytics, and augmented cognition.

For students, educators, and professionals alike, CVF offers an invaluable resource to explore how the theoretical foundations of deep learning and supervised learning evolve into practical, high-impact applications. Research hosted on the site covers a wide spectrum of topics—from 3D scene reconstruction and semantic segmentation to object detection and human pose estimation—often backed by open-source code and benchmark datasets. This makes it an ideal platform for those pursuing university projects or building prototypes based on recent breakthroughs.

One of CVF’s distinctive strengths is the sheer scale and visibility of its community. Leading labs and companies from around the world submit papers to CVPR and ICCV, ensuring that the content reflects both academic rigor and real-world relevance. For example, recent CVPR proceedings feature developments in autonomous vehicle vision systems, medical image analysis, and even vision-based robotics for agriculture. Each paper not only explains the methodology but often provides experimental results and ablation studies, giving readers an in-depth understanding of how vision algorithms are evaluated and optimized.

This modern digital artwork portrays a computer vision researcher, illustrated with bold lines and vibrant colors, interacting with an on-screen interface that highlights a red car enclosed in a bounding box labeled “CAR.” The background combines geometric circuitry patterns and a dynamic orange-blue contrast, evoking the intersection of AI, engineering, and visual technology. The scene captures a moment of insight and precision, celebrating the role of human expertise in developing intelligent visual recognition systems.

The site also emphasizes transparency and reproducibility—core values in today’s AI research ecosystem. Students accessing CVF materials will find links to GitHub repositories, pretrained model files, and detailed dataset annotations, allowing them to replicate experiments or build upon published work. This facilitates active learning and critical thinking, especially in university courses focusing on robotics, data science, or expert systems.

Educators can use CVF content to supplement lectures with real-world case studies or assign recent papers as reading materials to inspire discussion about ethics, algorithmic fairness, and technological frontiers. Meanwhile, industry practitioners and startup teams consult the CVF library to scout emerging methods that might soon shape autonomous drones, visual inspection tools, or augmented reality interfaces.

A stylized digital illustration depicts a female scientist in a retro-futuristic lab setting, examining visual data from a screen displaying a labeled image of an orange car marked “SAT-CAR.” The screen features bounding boxes and line charts, representing object recognition and data interpretation. In the background, abstract circuit patterns and a futuristic cityscape symbolize the integration of human insight and machine learning. This scene highlights Stanford’s contributions to advancing computer vision through datasets and tutorials.

By diving into the Computer Vision Foundation’s resources, learners not only keep pace with the field’s most recent innovations but also participate in the broader scientific dialogue shaping the future of visual intelligence. Whether exploring foundational topics or aspiring to contribute original research, CVF remains a gateway to excellence in computer vision.

Resource 3: Explore Hands‑On Learning with PyTorch’s Computer Vision Tutorials

The PyTorch Computer Vision Tutorials offer a dynamic and practical entry point for students, educators, and developers seeking to deepen their understanding of visual recognition tasks through modern deep learning techniques. Developed by the creators of the PyTorch framework—one of the most widely adopted platforms for machine learning and AI research—these tutorials bridge foundational concepts with hands-on coding experiences, allowing learners to explore how real-world image classification and object detection models are built, trained, and deployed.

These resources feature step-by-step guidance for tasks such as training neural networks on the CIFAR-10 dataset, implementing transfer learning, using data augmentation to improve model generalization, and deploying models to production-ready pipelines. Learners gain exposure to essential modules such as convolutional neural networks (CNNs), loss functions, optimizers, and GPU acceleration—all in an interactive environment using Python and PyTorch.

A key strength of PyTorch’s educational materials lies in their integration with pre-trained model zoos, which provide ready-to-use architectures like ResNet, AlexNet, and VGG. This allows students to build sophisticated solutions without starting from scratch, fostering faster experimentation and insight. The tutorials also support visualization tools like TensorBoard and Grad-CAM, enabling a clearer understanding of what the model “sees” when interpreting input data.

This modern flat-style digital illustration presents an educational interface for PyTorch’s official computer vision tutorials. It features a computer monitor screen with organized tutorial modules labeled “Neural Networks,” “Datasets,” and “Tensors,” alongside familiar PyTorch syntax like import torch and transform. The image conveys the hands-on and modular nature of PyTorch’s learning environment, aimed at students and developers working on visual AI systems.

For educators and curriculum designers, PyTorch’s modular approach and Jupyter Notebook compatibility make it easy to embed into classroom environments, online courses, or AI bootcamps. Each tutorial is structured to reinforce theoretical knowledge with coding practice, making abstract ideas tangible and testable.

< p> Ultimately, the PyTorch Computer Vision Tutorials stand out not only as instructional materials but as a launchpad for creative innovation. Whether you aim to develop smarter autonomous vehicles, healthcare diagnostics, or intelligent retail systems, these hands-on guides give you the tools to bring your ideas to life.

A flat-design digital illustration shows a young woman enthusiastically coding a convolutional neural network using PyTorch. She sits at a desk with an open notebook, while a diagram on the wall behind her visualizes the Conv-ReLU pipeline in deep learning. This image emphasizes the hands-on, learner-friendly nature of PyTorch’s tutorials and model zoos for mastering computer vision techniques.

When Vision Breaks: Shift, OOD & Safety

Computer vision works in the lab, then meets the messy world: lighting changes, cameras age, users behave in new ways. This section shows how to anticipate those shifts, catch out-of-distribution (OOD) inputs, and keep systems safe once deployed.

Types of Shift

- Covariate shift: inputs change (new sensors, lighting, viewpoints) while labels stay the same.

- Label shift: class frequencies change (rare defects become common after a process tweak).

- Concept shift: the mapping itself moves (new product lines, new road markings, updated uniforms).

Detecting Drift in the Wild

Compare recent data windows against a trusted baseline: class balance, confidence histograms, feature/embedding distances, and simple summary stats. Keep a small canary set from critical conditions and run it before every release. For distributed systems, surface stats from edge devices via internet & web technologies backends.

OOD Detection Playbook

Start with max-softmax probability (MSP) as a baseline. Improve with temperature scaling or energy-based scores. Add simple input checks (size, aspect, blur, exposure) and reject/route anomalous frames. Where latency allows, use a light consistency check (e.g., two augmentations, same prediction) to flag fragile cases. See foundations in supervised learning and calibration.

Hard-Negative Mining & Active Learning

Send low-confidence or novel samples to review; prioritize borderline false positives/negatives. Fold reviewed items back via scheduled re-training with clear data lineage. Pair this with targeted augmentation and domain randomization. Many pipelines sit alongside deep learning data curation and device-specific tests.

Production Monitoring & Guardrails

Track latency, throughput, error codes, drift alerts, and per-class performance. Define “stop-ship” guardrails (e.g., mAP or recall below threshold on the canary set) and enable safe rollback. In embodied settings, couple perception with robotics & autonomous systems failsafes (e.g., slowdown/stop on uncertainty spikes). Deployment choices often hinge on cloud computing vs. edge trade-offs.

From Pixels to Pose: A Mini-Primer on 3D Vision

Beyond classifying pixels, many real applications need geometry: where am I, how far is that object, what is the camera’s pose? This primer sketches the core ideas that connect images to 3D structure and motion.

Pinhole Camera Model

Images are projections of 3D points through a virtual pinhole. Intrinsic parameters (focal length, principal point) describe the camera; extrinsics describe its position and orientation. Calibrating both is the first step toward reliable measurement and control in robotics & autonomous systems.

Epipolar Geometry & Stereo Depth

With two calibrated views, corresponding points lie on epipolar lines. Estimating disparity across the image yields dense depth maps useful for navigation, mapping, and inspection. Stereo pairs also help validate monocular predictors in safety-critical pipelines.

Pose from Points (PnP) & Triangulation

Given 2D–3D correspondences, Perspective-n-Point (PnP) recovers the camera pose; given multi-view 2D points, triangulation recovers 3D structure. These building blocks anchor tasks like fiducial tracking, AR overlays, and UAV landing using satellite-guided navigation cues.

Monocular Depth, SfM & Visual SLAM

Modern deep learning models infer depth from a single image using learned priors, while classical Structure-from-Motion (SfM) and Visual SLAM estimate camera motion and sparse/dense maps over time. Hybrid systems fuse learned features with geometric solvers for robust tracking under motion blur and low texture.

Sensor Fusion (Camera + LiDAR/IMU)

Cameras provide rich semantics; LiDAR supplies precise range; IMUs stabilize motion estimates. Fusing these streams improves accuracy and resilience across weather and lighting. Fusion choices depend on compute, power, and latency budgets—often the deciding factors in edge vs. cloud deployment.

Computer Vision: Frequently Asked Questions

These questions and answers highlight what computer vision is, how it works technically, and how it connects to real-world applications, ethics, and future study or career paths.

1. What is computer vision, and how does it relate to Artificial Intelligence?

Answer: Computer vision is a field of Artificial Intelligence focused on enabling machines to interpret and understand visual information from the world, such as images and videos. It involves tasks like detecting objects, recognising faces, segmenting regions, estimating motion, and understanding scenes. Computer vision relies on AI and machine learning, especially deep learning, to learn patterns from large datasets of visual examples, and it serves as a crucial bridge between raw visual data and intelligent decision-making in applications like robotics, autonomous driving, healthcare imaging, and security.

2. How is computer vision different from traditional image processing?

Answer: Traditional image processing focuses on manipulating images at the pixel level using mathematical operations such as filtering, enhancement, compression, and edge detection. Computer vision goes further by trying to interpret what is present in an image or video and assign semantic meaning, such as identifying objects, activities, or relationships. While image processing techniques are often used as building blocks, computer vision combines them with machine learning and AI models to recognise patterns, make predictions, and support decisions based on visual inputs.

3. What are some common computer vision tasks and applications?

Answer: Common computer vision tasks include image classification, object detection, semantic and instance segmentation, facial recognition, optical character recognition (OCR), pose estimation, and tracking objects in video. These tasks support applications such as autonomous vehicles detecting pedestrians and road signs, medical imaging systems assisting with diagnosis, quality inspection in manufacturing, biometric security systems, augmented reality overlays, retail analytics, and environmental monitoring using satellite images or drones.

4. Why are deep learning and convolutional neural networks important in computer vision?

Answer: Deep learning, and in particular convolutional neural networks (CNNs), have transformed computer vision by allowing models to automatically learn hierarchical visual features directly from raw pixels. Instead of manually designing features, CNNs learn edges, textures, shapes, and high-level structures as layers of a deep network are trained on large image datasets. This approach has led to major improvements in accuracy for tasks like image classification, object detection, and semantic segmentation, and it underpins many modern computer vision systems deployed in industry and research.

5. What mathematical and programming skills are useful for studying computer vision at university?

Answer: Studying computer vision benefits from a foundation in linear algebra (for vectors, matrices, transformations, and convolutions), calculus and optimisation (for training neural networks), probability and statistics (for modelling uncertainty and evaluating models), and basic geometry (for understanding camera models and projections). On the programming side, skills in Python, familiarity with numerical libraries such as NumPy and OpenCV, and experience with deep learning frameworks like TensorFlow or PyTorch are often important for implementing and experimenting with vision algorithms and models.

6. How are datasets used in computer vision, and why are they so important?

Answer: Datasets are central to computer vision because most modern methods, especially deep learning models, are data-driven. Large collections of labelled images or videos are used to train models to recognise objects, scenes, or actions. Well-designed datasets expose models to a variety of conditions, such as changes in lighting, viewpoint, and background, helping them generalise to new examples. Benchmark datasets also allow fair comparison between algorithms. However, dataset limitations can introduce biases, so careful curation, augmentation, and evaluation are important parts of responsible computer vision practice.

7. What ethical and privacy concerns arise in computer vision applications?

Answer: Ethical and privacy concerns in computer vision include the risk of intrusive surveillance, misuse of facial recognition, biased performance across different demographic groups, lack of consent for image collection, and potential misidentification in security or law-enforcement contexts. Responsible computer vision work involves respecting privacy laws and social norms, minimising unnecessary data collection, ensuring transparency about how images are used, testing models for fairness and robustness, and keeping a human in the loop for high-stakes decisions. These issues are increasingly discussed in AI ethics and policy courses as well as technical modules.

8. What kinds of careers can computer vision skills lead to?

Answer: Computer vision skills can lead to careers as computer vision engineers, machine learning engineers, AI researchers, robotics developers, autonomous systems engineers, and specialists in fields such as medical imaging, remote sensing, and augmented or virtual reality. Graduates may work in technology companies, automotive firms, healthcare and biomedical sectors, manufacturing and quality control, security and defence, or research institutions. Strong foundations in AI, data, and software engineering also give flexibility to move between broader AI and data science roles.

Computer Vision — Conclusion

Computer Vision turns pixels into decisions. What began as handcrafted filters now operates as end-to-end systems that learn from data, quantify uncertainty, and run efficiently on edge and cloud. On this page you’ve moved from algorithms to practice: disciplined data/annotation, leakage-safe splits, task-appropriate metrics, and production-grade deployment.

Impact spans autonomous mobility, medical imaging, manufacturing inspection, agriculture, geospatial analysis, and more. In real products, success comes from systems thinking: robust datasets, clear evaluation (IoU, AP/mAP, mIoU, PQ), calibrated confidence with abstain paths, monitoring for drift/OOD, and careful runtime choices (quantization, distillation, TensorRT/TFLite/ONNX).

Responsible CV by design.

- Privacy & consent: minimize retention, process on-device when feasible, document data flows.

- Fairness & bias: measure per-class/per-slice performance; improve long-tail coverage with targeted data.

- Transparency: provide Model/Data Cards, versioned configs, and audit trails for decisions and updates.

- Safety: define escalation for low-confidence outputs; test under corruptions and environmental extremes.

Where to go next

- Build or audit a pipeline with the Practical Vision Pipeline checklist.

- Report honest results using Vision Metrics that Matter and add per-class/slice tables.

- Harden for the real world with Evaluation & Error Analysis and OOD checks.

- Ship confidently via Edge vs Cloud Deployment and the Runtime Export Guide.

Computer Vision – Review Questions and Answers:

1. What is computer vision and why is it a vital component of modern IT?

Answer: Computer vision is a field of artificial intelligence that enables computers to interpret and understand visual data from the world. It uses algorithms and deep learning models to process images and videos, allowing systems to recognize objects, detect patterns, and make decisions based on visual input. This capability is vital in modern IT as it supports applications ranging from autonomous vehicles to medical diagnostics and security systems. By automating complex visual tasks, computer vision enhances efficiency and drives innovation across multiple industries.

2. How do deep learning techniques enhance the performance of computer vision systems?

Answer: Deep learning techniques, particularly convolutional neural networks (CNNs), play a critical role in improving the accuracy and efficiency of computer vision systems. They automatically learn hierarchical features from raw image data, reducing the need for manual feature extraction. This leads to robust models capable of handling variations in lighting, scale, and orientation, which are common challenges in image analysis. As a result, deep learning significantly enhances tasks such as object detection, image classification, and segmentation, making computer vision applications more reliable and scalable.

3. What are the primary challenges faced by computer vision applications in real-world scenarios?

Answer: Computer vision applications face challenges such as varying lighting conditions, occlusions, and diverse object orientations that can degrade performance. Additionally, high computational demands and the need for large annotated datasets present significant hurdles for developing robust models. These challenges require sophisticated algorithms and powerful hardware to achieve real-time processing and high accuracy. Overcoming these issues is essential for deploying computer vision solutions in dynamic environments like autonomous driving or surveillance.

4. How is image processing used to extract useful information in computer vision systems?

Answer: Image processing involves a series of techniques to enhance and analyze digital images, extracting meaningful information for further analysis. Techniques such as filtering, edge detection, and morphological operations are applied to clean and segment images, highlighting features of interest. This preprocessing is crucial for reducing noise and improving the accuracy of subsequent tasks like object recognition and classification. By transforming raw images into a more analyzable format, image processing lays the foundation for effective computer vision applications.

5. In what ways does computer vision contribute to advancements in automation and robotics?

Answer: Computer vision contributes significantly to automation and robotics by enabling machines to perceive and interpret their environment. It allows robots to navigate complex spaces, recognize objects, and perform precise tasks with minimal human intervention. This technology is integral to applications such as robotic surgery, automated manufacturing, and warehouse logistics. By integrating computer vision, automation systems become more adaptable, efficient, and capable of operating in unstructured environments.

6. What role does data annotation play in training computer vision models, and what challenges are associated with it?

Answer: Data annotation is the process of labeling images with metadata such as object boundaries, classifications, and key points, which is crucial for training supervised computer vision models. Accurate annotations enable models to learn from examples and improve their ability to generalize to new data. However, the annotation process is often time-consuming, expensive, and prone to human error, making it a significant bottleneck in developing high-quality datasets. Addressing these challenges requires innovative solutions such as semi-automated annotation tools and crowdsourcing to accelerate and improve data labeling.

7. How does computer vision integrate with other IT domains to drive digital transformation?

Answer: Computer vision integrates with domains like big data analytics, cloud computing, and the Internet of Things (IoT) to provide comprehensive solutions that enhance digital transformation. By processing and analyzing vast amounts of visual data, computer vision contributes to smarter decision-making, real-time monitoring, and predictive maintenance. This integration enables organizations to optimize operations, enhance customer experiences, and develop innovative products and services. The convergence of computer vision with other IT fields drives efficiency and innovation across various sectors, fueling overall digital transformation.

8. What are some common applications of computer vision in everyday technology?

Answer: Computer vision is widely used in everyday technology, with applications including facial recognition on smartphones, automated license plate readers, and image search engines. It also plays a critical role in augmented reality, where real-time image processing overlays digital information onto the physical world. In retail, computer vision is used for inventory management and personalized advertising, while in healthcare, it aids in diagnostics through medical imaging analysis. These applications illustrate how computer vision improves convenience, security, and efficiency in daily life.

9. How does the scalability of computer vision systems impact their deployment in large-scale IT infrastructures?

Answer: Scalability in computer vision systems is essential for handling large volumes of visual data and supporting real-time applications in expansive IT infrastructures. As datasets and user demands grow, scalable architectures ensure that computer vision models can maintain high performance and accuracy without excessive computational overhead. Techniques such as cloud computing, parallel processing, and optimized neural network architectures enable systems to scale efficiently. This scalability is critical for deploying computer vision solutions in areas such as surveillance networks, smart cities, and industrial automation.

10. What future trends in computer vision are likely to shape the IT landscape in the coming years?

Answer: Future trends in computer vision include advancements in deep learning architectures, increased use of transfer learning, and the integration of multimodal data processing. Emerging technologies such as edge computing and 5G will enable faster, real-time analysis of visual data at scale. These trends are expected to drive innovations in areas like autonomous systems, personalized healthcare, and enhanced cybersecurity. As computer vision continues to evolve, it will play an increasingly critical role in shaping the future of IT and digital transformation.

Computer Vision – Thought-Provoking Questions and Answers

1. How might the evolution of computer vision redefine the boundaries of human-computer interaction?

Answer: The evolution of computer vision is set to transform human-computer interaction by enabling more natural and intuitive interfaces that rely on gesture recognition, facial expressions, and real-time visual feedback. This technology could lead to systems that understand and respond to human emotions and intentions, creating a more seamless integration between users and devices. Such advancements would allow for touchless interactions and personalized experiences, enhancing accessibility and convenience in both consumer electronics and professional applications. As computer vision becomes more sophisticated, it may blur the lines between the digital and physical worlds, offering transformative ways to interact with technology.

In addition, these changes could significantly impact industries such as healthcare, where computer vision-driven interfaces could assist patients with disabilities, and retail, where personalized shopping experiences could be enhanced through visual analytics. The redefinition of interaction boundaries might also lead to new ethical and privacy considerations, as the collection and interpretation of visual data become more pervasive. Balancing innovation with responsible use will be key to harnessing the full potential of advanced human-computer interactions.

2. What are the potential ethical implications of deploying computer vision in surveillance and public spaces?

Answer: Deploying computer vision in surveillance and public spaces raises significant ethical implications related to privacy, consent, and potential misuse of data. The technology’s ability to continuously monitor and analyze visual information can lead to mass data collection, often without the explicit knowledge or consent of individuals. This level of surveillance can result in a loss of anonymity and may be exploited for unauthorized tracking or profiling, leading to potential abuses of power. Ensuring that such systems are used responsibly and transparently is critical for maintaining public trust and protecting individual rights.

Moreover, ethical considerations must include the potential for bias in computer vision algorithms, which can disproportionately affect certain groups and lead to unfair treatment. Establishing robust regulatory frameworks and ethical guidelines is essential to mitigate these risks and ensure that surveillance technologies are implemented in a manner that respects human dignity and privacy. A multidisciplinary approach involving technologists, ethicists, policymakers, and community representatives is necessary to address these complex issues.

3. How might computer vision technologies impact the future of autonomous vehicles and transportation systems?